人工智能实践-Tensorflow笔记-MOOC-第四讲网络八股扩展

[TOC]

人工智能实践-Tensorflow笔记-MOOC-第四讲网络八股扩展 tf.keras 搭建神经网络八股——六步法 六步法 1)import——导入所需的各种库和包

本讲的目标 ① 自制数据集,解决本领域应用

上节代码回顾 p14_mnist_sequential.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import tensorflow as tfmnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

1-自制数据集-解决本领域问题 训练集图片:...\class4\MNIST_FC\mnist_image_label\mnist_test_jpg_60000

训练集标签:...\class4\MNIST_FC\mnist_image_label\mnist_test_jpg_60000.txt

测试集图片:...\class4\MNIST_FC\mnist_image_label\mnist_test_jpg_10000

测试集标签:...\class4\MNIST_FC\mnist_image_label\mnist_test_jpg_10000.txt

训练60000张图片,测试10000张图片,黑底白字的灰度图,每张图28行28列像素点,每个像素点都是0到255之间的整数,纯黑色用数值0,纯白色用255表示。

在上一讲中,导入mnist数据集时,看到了数据结构:

1 2 3 4 5 6 7 8 mnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data()

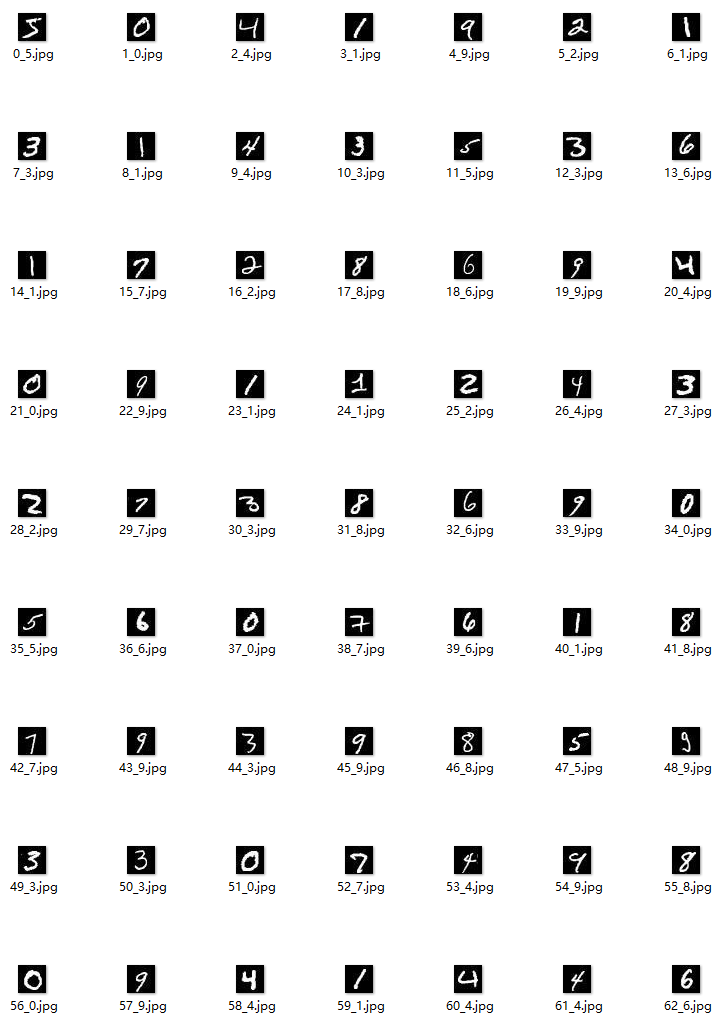

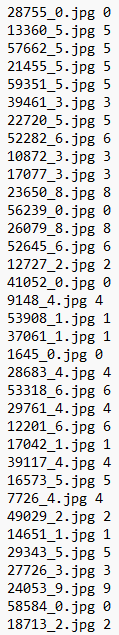

def generateds(图片路径, 标签文件) 定义数据集 标签文件mnist_train_jpg_xxxxx.txt:

value[0]

value[1]

0_5.jpg

5

1_0.jpg

0

2_4.jpg

4

3_1.jpg

1

4_9.jpg

9

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 def generateds (path, txt ): f = open (txt, 'r' ) contents = f.readlines() f.close() x, y_ = [], [] for content in contents: value = content.split() img_path = path + value[0 ] img = Image.open (img_path) img = np.array(img.convert('L' )) img = img / 255. x.append(img) y_.append(value[1 ]) print ('loading : ' + content) x = np.array(x) y_ = np.array(y_) y_ = y_.astype(np.int64) return x, y_

自生成数据集完整代码 P8_fashion_train_ex1.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 import tensorflow as tffrom PIL import Imageimport numpy as npimport ostrain_path = './fashion_image_label/fashion_train_jpg_60000/' train_txt = './fashion_image_label/fashion_train_jpg_60000.txt' x_train_savepath = './fashion_image_label/fashion_x_train.npy' y_train_savepath = './fashion_image_label/fahion_y_train.npy' test_path = './fashion_image_label/fashion_test_jpg_10000/' test_txt = './fashion_image_label/fashion_test_jpg_10000.txt' x_test_savepath = './fashion_image_label/fashion_x_test.npy' y_test_savepath = './fashion_image_label/fashion_y_test.npy' def generateds (path, txt ): f = open (txt, 'r' ) contents = f.readlines() f.close() x, y_ = [], [] for content in contents: value = content.split() img_path = path + value[0 ] img = Image.open (img_path) img = np.array(img.convert('L' )) img = img / 255. x.append(img) y_.append(value[1 ]) print ('loading : ' + content) x = np.array(x) y_ = np.array(y_) y_ = y_.astype(np.int64) return x, y_ if os.path.exists(x_train_savepath) and os.path.exists(y_train_savepath) and os.path.exists( x_test_savepath) and os.path.exists(y_test_savepath): print ('-------------Load Datasets-----------------' ) x_train_save = np.load(x_train_savepath) y_train = np.load(y_train_savepath) x_test_save = np.load(x_test_savepath) y_test = np.load(y_test_savepath) x_train = np.reshape(x_train_save, (len (x_train_save), 28 , 28 )) x_test = np.reshape(x_test_save, (len (x_test_save), 28 , 28 )) else : print ('-------------Generate Datasets-----------------' ) x_train, y_train = generateds(train_path, train_txt) x_test, y_test = generateds(test_path, test_txt) print ('-------------Save Datasets-----------------' ) x_train_save = np.reshape(x_train, (len (x_train), -1 )) x_test_save = np.reshape(x_test, (len (x_test), -1 )) np.save(x_train_savepath, x_train_save) np.save(y_train_savepath, y_train) np.save(x_test_savepath, x_test_save) np.save(y_test_savepath, y_test) model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

运行过程时,会生成generateds()函数制作了.npy格式的数据集,数据集生成好后,程序开始执行训练过程,随着迭代轮数的增加,识别准确率在不断提升。

当第一次运行完毕后,再次运行,程序直接加载数据集,执行训练过程。

2-数据增强-扩充数据集 对图像的增强,就是对图像的形变,用来应对因拍照角度不同引起的图片变形

TensorFlow2数据增强函数: 1 2 image_gen_train=tf.keras.preprocessing.image.ImageDataGenerator(增强方法) image_gen_train.fit(x_train)

缩放系数: rescale = 所有数据将乘以提供的值rotation_range = 随机旋转角度数范围width_shift_range = 随机宽度偏移量height_shift_range = 随机高度偏移量horizontal_flip = 是否水平随机翻转zoom_range = 随机缩放的范围 [1-n, 1+n]

例子:

1 2 3 4 5 6 7 8 image_gen_train = ImageDataGenerator( rescale=1. /255 , rotation_range=45 , width_shift_range=.15 , height_shift_range=.15 , horizontal_flip=True , zoom_range=0.5 image_gen_train.fit(x_train)

由于image_gen_train.fit()需要输入四维数据,需要对x_train进行reshape

1 2 3 4 5 6 7 x_train = x_train.reshape(x_train.shape[0 ], 28 , 28 , 1 ) model.fit(x_train, y_train, batch_size=32 , ……) model.fit(image_gen_train.flow(x_train, y_train, batch_size=32 ), ……)

1、 model.fit(x_train,y_train,batch_size=32,……)变为model.fit(image_gen_train.flow(x_train, y_train,batch_size=32), ……);

数据增强代码 p13_fashion_train_ex2.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 import tensorflow as tffrom tensorflow.keras.preprocessing.image import ImageDataGeneratorfashion = tf.keras.datasets.fashion_mnist (x_train, y_train), (x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 x_train = x_train.reshape(x_train.shape[0 ], 28 , 28 , 1 ) image_gen_train = ImageDataGenerator( rescale=1. / 1. , rotation_range=45 , width_shift_range=.15 , height_shift_range=.15 , horizontal_flip=True , zoom_range=0.5 ) image_gen_train.fit(x_train) model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(image_gen_train.flow(x_train, y_train, batch_size=32 ), epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

数据增强在小数据量上可以增加模型泛化性,在实际应用模型时能体现出效果。

标准minist数据集单单从准确率上是看不出来模型效果的。

3-断点续训-存取模型 读取模型 load_weights(路径文件名)

1 2 3 4 checkpoint_save_path = "./checkpoint/mnist.ckpt" if os.path.exists(checkpoint_save_path + '.index' ): print ('-------------Load the model-----------------' ) model.load_weights(checkpoint_save_path)

保存模型 借助 tensorflow 给出的回调函数,直接保存参数和网络

1 2 3 4 5 6 7 8 tf.keras.callbacks.ModelChcekpoint( filepath = 路径文件名, save_weights_only = True /False , monitor = 'val_loss' , save_best_only = True /False ) history = model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 , callbacks=[cp_callback])

注: monitor 配合 save_best_only 可以保存最优模型,包括:训练损失最小模型、测试损失最小模型、训练准确率最高模型、测试准确率最高模型等。

1 2 3 cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path, save_weights_only=True , save_best_only=True ) history = model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 , callbacks=[cp_callback])

断点续训代码 p16_fashion_train_ex3.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 import tensorflow as tfimport osfashion = tf.keras.datasets.fashion_mnist (x_train, y_train), (x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) checkpoint_save_path = "./checkpoint/fashion.ckpt" if os.path.exists(checkpoint_save_path + '.index' ): print ('-------------load the model-----------------' ) model.load_weights(checkpoint_save_path) cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path, save_weights_only=True , save_best_only=True ) history = model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 , callbacks=[cp_callback]) model.summary()

运行第一次会生成checkpoint文件夹,再次运行会在前一次训练结果上继续

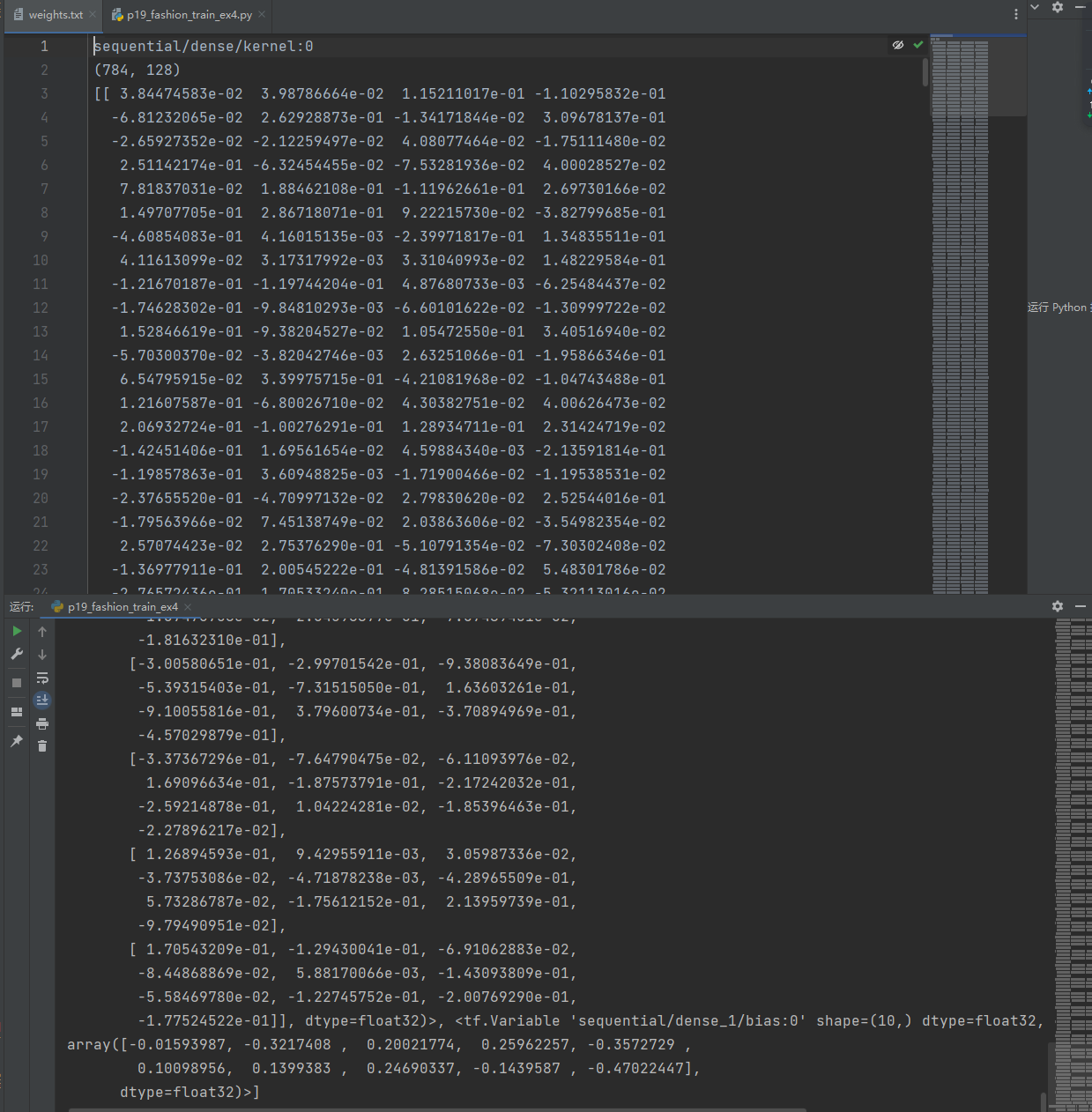

4-参数提取-把参数存入文本 返回模型中可训练的参数:model.trainable_variables

直接print,中间有很多数据被省略号替换掉.

设置print输出格式:np.set_printoptions(threshold = 超过多少省略显示)

1 np.set_printoptions(threshold = np.inf)

1 2 3 4 5 6 7 8 9 np.set_printoptions( precision=小数点后按四舍五入保留几位, threshold=数组元素数量少于或等于门槛值, 打印全部元素;否则打印门槛值+1 个元素, 中间用省略号补充) >>> np.set_printoptions(precision=5 )>>> print (np.array([1.123456789 ]))[1.12346 ] >>> np.set_printoptions(threshold=5 )>>> print (np.arange(10 ))[0 1 2 … , 7 8 9 ]

注: precision=np.inf 打印完整小数位; threshold=np.nan 打印全部数组元素。

1 2 3 4 5 6 7 print (model.trainable_variables)file = open ('./weights.txt' , 'w' ) for v in model.trainable_variables: file.write(str (v.name) + '\n' ) file.write(str (v.shape) + '\n' ) file.write(str (v.numpy()) + '\n' ) file.close()

在断点续训基础上增加参数提取功能

p19_fashion_train_ex4.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 import tensorflow as tfimport osimport numpy as npnp.set_printoptions(threshold=np.inf) fashion = tf.keras.datasets.fashion_mnist (x_train, y_train), (x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) checkpoint_save_path = "./checkpoint/fashion.ckpt" if os.path.exists(checkpoint_save_path + '.index' ): print ('-------------load the model-----------------' ) model.load_weights(checkpoint_save_path) cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path, save_weights_only=True , save_best_only=True ) history = model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 , callbacks=[cp_callback]) model.summary() print (model.trainable_variables)file = open ('./weights.txt' , 'w' ) for v in model.trainable_variables: file.write(str (v.name) + '\n' ) file.write(str (v.shape) + '\n' ) file.write(str (v.numpy()) + '\n' ) file.close()

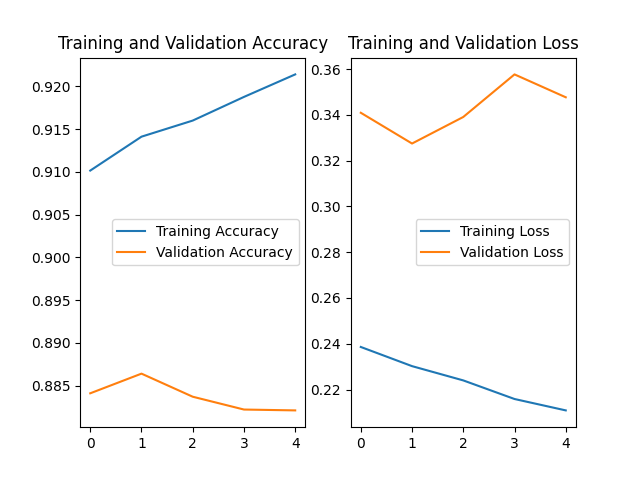

5-acc/loss可视化-查看训练效果 1 2 3 4 5 6 7 8 history=model.fit( 训练集数据, 训练集标签, batch_size = , epochs = , validation_split = 用作测试数据的比例, validation_data = 测试集, validation_freq = 测试频率)

在model.fit执行训练过程时,同步记录了:

loss:训练集 lossval_loss:测试集 losssparse_categorical_accuracy:训练集准确率val_sparse_categorical_accuracy:测试集准确率

可以使用history.history提取出来:

1 2 3 4 acc = history.history['sparse_categorical_accuracy' ] val_acc = history.history['val_sparse_categorical_accuracy' ] loss = history.history['loss' ] val_loss = history.history['val_loss' ]

画图的代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 acc = history.history['sparse_categorical_accuracy' ] val_acc = history.history['val_sparse_categorical_accuracy' ] loss = history.history['loss' ] val_loss = history.history['val_loss' ] plt.subplot(1 , 2 , 1 ) plt.plot(acc, label='Training Accuracy' ) plt.plot(val_acc, label='Validation Accuracy' ) plt.title('Training and Validation Accuracy' ) plt.legend() plt.subplot(1 , 2 , 2 ) plt.plot(loss, label='Training Loss' ) plt.plot(val_loss, label='Validation Loss' ) plt.title('Training and Validation Loss' ) plt.legend() plt.show()

acc/loss可视化在第4部分基础上增加绘图plt模块和绘图代码

p23_fashion_train_ex5.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 import tensorflow as tfimport osimport numpy as npfrom matplotlib import pyplot as pltnp.set_printoptions(threshold=np.inf) fashion = tf.keras.datasets.fashion_mnist (x_train, y_train), (x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) checkpoint_save_path = "./checkpoint/fashion.ckpt" if os.path.exists(checkpoint_save_path + '.index' ): print ('-------------load the model-----------------' ) model.load_weights(checkpoint_save_path) cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path, save_weights_only=True , save_best_only=True ) history = model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 , callbacks=[cp_callback]) model.summary() print (model.trainable_variables)file = open ('./weights.txt' , 'w' ) for v in model.trainable_variables: file.write(str (v.name) + '\n' ) file.write(str (v.shape) + '\n' ) file.write(str (v.numpy()) + '\n' ) file.close() acc = history.history['sparse_categorical_accuracy' ] val_acc = history.history['val_sparse_categorical_accuracy' ] loss = history.history['loss' ] val_loss = history.history['val_loss' ] plt.subplot(1 , 2 , 1 ) plt.plot(acc, label='Training Accuracy' ) plt.plot(val_acc, label='Validation Accuracy' ) plt.title('Training and Validation Accuracy' ) plt.legend() plt.subplot(1 , 2 , 2 ) plt.plot(loss, label='Training Loss' ) plt.plot(val_loss, label='Validation Loss' ) plt.title('Training and Validation Loss' ) plt.legend() plt.show()

6-应用程序-给图识物 输入一张手写数字图片:

输出识别结果:

向前传播执行应用:

1 predict(输入特征, batch_size = 整数)

注: predict 参数详解。x: 输入数据, Numpy 数组(或者 Numpy 数组的列表,如果模型有多个输出);batch_size: 整数,由于 GPU 的特性, batch_size最好选用 8, 16, 32, 64……, 如果未指定,默认为 32;verbose: 日志显示模式, 0 或 1;steps: 声明预测结束之前的总步数(批次样本), 默认值 None;返回:预测的 Numpy 数组(或数组列表)。

1 2 3 4 5 6 7 8 9 10 11 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax’)]) # 2-加载参数 model.load_weights(model_save_path) # 3-预测结果 result = model.predict(x_predict)

案例代码:

p27_fashion_app.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 from PIL import Imageimport numpy as npimport tensorflow as tftype = ['T-shirt/top' , 'Trouser' , 'Pullover' , 'Dress' , 'Coat' , 'Sandal' , 'Shirt' , 'Sneaker' , 'Bag' , 'Ankle boot' ]model_save_path = './checkpoint/fashion.ckpt' model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.load_weights(model_save_path) preNum = int (input ("input the number of test pictures:" )) for i in range (preNum): image_path = input ("the path of test picture:" ) img = Image.open (image_path) img=img.resize((28 ,28 ),Image.ANTIALIAS) img_arr = np.array(img.convert('L' )) img_arr = 255 - img_arr img_arr = img_arr/255.0 x_predict = img_arr[tf.newaxis,...] result = model.predict(x_predict) pred=tf.argmax(result, axis=1 ) print ('\n' ) print (type [int (pred)])