人人共享的机器学习

原文链接:Machine Learning for Everyone

[TOC]

人人共享的机器学习

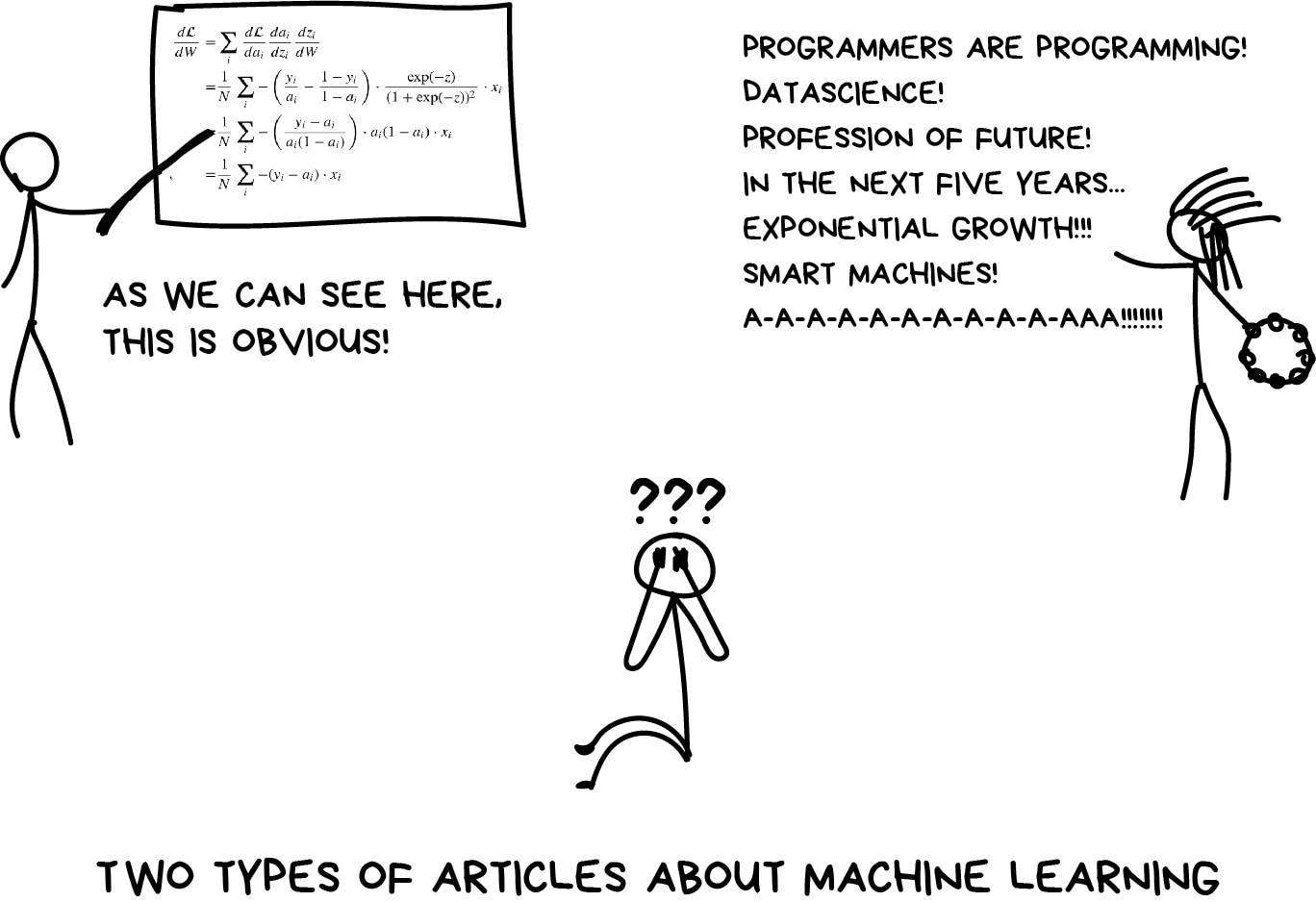

Machine Learning is like sex in high school. Everyone is talking about it, a few know what to do, and only your teacher is doing it. If you ever tried to read articles about machine learning on the Internet, most likely you stumbled upon two types of them: thick academic trilogies filled with theorems (I couldn’t even get through half of one) or fishy fairytales about artificial intelligence, data-science magic, and jobs of the future.

机器学习就像高中时的性爱。每个人都在谈论它,少数人知道该做什么,只有你的老师在做。如果你试图在互联网上阅读关于机器学习的文章,很可能你偶然发现了两种类型的文章:充满定理的厚厚的学术三部曲(我甚至读不完一半),或者关于人工智能、数据科学魔法和未来工作的可疑童话。

I decided to write a post I’ve been wishing existed for a long time. A simple introduction for those who always wanted to understand machine learning. Only real-world problems, practical solutions, simple language, and no high-level theorems. One and for everyone. Whether you are a programmer or a manager.

我决定写一篇我一直希望存在已久的帖子。对于那些一直想了解机器学习的人来说,这是一个简单的介绍。只有现实世界的问题,实用的解决方案,简单的语言,没有高级定理。一个,为每个人。无论你是程序员还是经理。

Why do we want machines to learn? 我们为什么要让机器学习?

This is Billy. Billy wants to buy a car. He tries to calculate how much he needs to save monthly for that. He went over dozens of ads on the internet and learned that new cars are around $20,000, used year-old ones are $19,000, 2-year old are $18,000 and so on.

这是比利。比利想买一辆车。他试着计算每月需要存多少钱。他浏览了互联网上的几十个广告,了解到新车价格约为2万美元,二手车价格为1.9万美元,2年车价格为1.8万美元,以此类推。

Billy, our brilliant analytic, starts seeing a pattern: so, the car price depends on its age and drops $1,000 every year, but won’t get lower than $10,000.

Billy,我们出色的分析师,开始看到一个模式:所以,汽车价格取决于它的年龄,每年下降1000美元,但不会低于10000美元。

In machine learning terms, Billy invented regression – he predicted a value (price) based on known historical data. People do it all the time, when trying to estimate a reasonable cost for a used iPhone on eBay or figure out how many ribs to buy for a BBQ party. 200 grams per person? 500?

在机器学习方面,Billy发明了回归——他根据已知的历史数据预测价值(价格)。人们总是这样做,试图在易趣上估计一部二手iPhone的合理成本,或者计算出烧烤派对要买多少排骨。每人200克?500?

Yeah, it would be nice to have a simple formula for every problem in the world. Especially, for a BBQ party. Unfortunately, it’s impossible.

是的,如果能为世界上的每一个问题都有一个简单的公式,那就太好了。尤其是烧烤派对。不幸的是,这是不可能的。

Let’s get back to cars. The problem is, they have different manufacturing dates, dozens of options, technical condition, seasonal demand spikes, and god only knows how many more hidden factors. An average Billy can’t keep all that data in his head while calculating the price. Me too.

让我们回到汽车上。问题是,它们有不同的生产日期、几十种选择、技术条件、季节性需求激增,天知道还有多少隐藏因素。一个普通的比利在计算价格时无法将所有这些数据都记在脑子里。我也是。

People are dumb and lazy – we need robots to do the maths for them. So, let’s go the computational way here. Let’s provide the machine some data and ask it to find all hidden patterns related to price.

人们又笨又懒——我们需要机器人为他们计算。所以,让我们从计算的角度来看。让我们向机器提供一些数据,并要求它找到所有与价格相关的隐藏模式。

Aaaand it works. The most exciting thing is that the machine copes with this task much better than a real person does when carefully analyzing all the dependencies in their mind.

Aaaan而且有效。最令人兴奋的是,当仔细分析他们脑海中的所有依赖关系时,机器比真人更好地处理这项任务。

That was the birth of machine learning.

这就是机器学习的诞生。

Three components of machine learning 机器学习的三个组成部分

Without all the AI-bullshit, the only goal of machine learning is to predict results based on incoming data. That’s it. All ML tasks can be represented this way, or it’s not an ML problem from the beginning.

如果没有人工智能的废话,机器学习的唯一目标就是根据输入的数据预测结果。就是这样。所有ML任务都可以用这种方式表示,或者从一开始就不是ML问题。

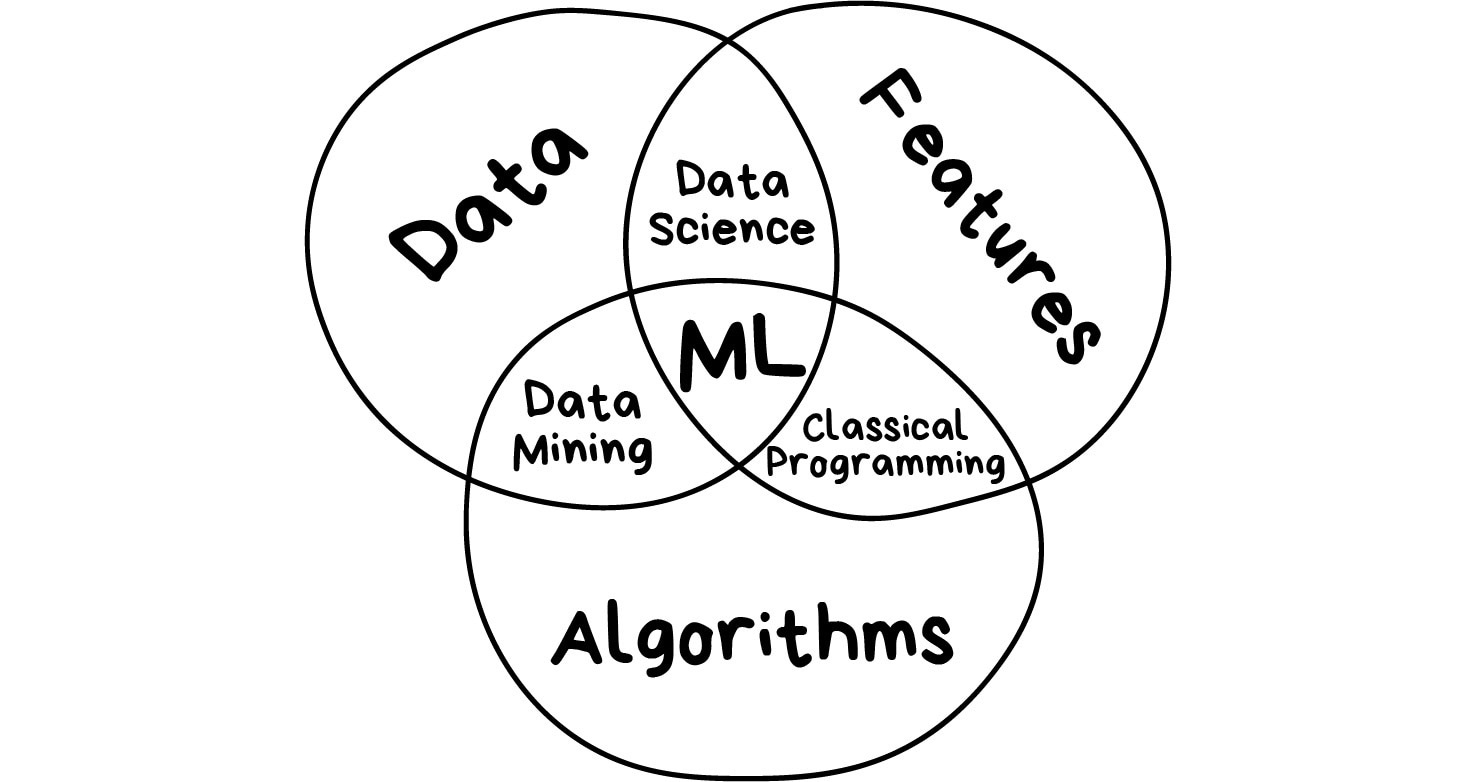

The greater variety in the samples you have, the easier it is to find relevant patterns and predict the result. Therefore, we need three components to teach the machine:

样本的种类越多,就越容易找到相关的模式并预测结果。因此,我们需要三个组件来教机器:

Data Want to detect spam? Get samples of spam messages. Want to forecast stocks? Find the price history. Want to find out user preferences? Parse their activities on Facebook (no, Mark, stop collecting it, enough!). The more diverse the data, the better the result. Tens of thousands of rows is the bare minimum for the desperate ones.

数据想要检测垃圾邮件吗?获取垃圾邮件的示例。想预测股票吗?查找价格历史记录。想了解用户偏好吗?分析他们在Facebook上的活动(不,马克,停止收集,够了!)。数据越多样化,结果越好。对于绝望的人来说,数以万计的争吵是最低限度的。

There are two main ways to get the data — manual and automatic. Manually collected data contains far fewer errors but takes more time to collect — that makes it more expensive in general.

获取数据主要有两种方式——手动和自动。手动收集的数据包含的错误要少得多,但需要更多的时间来收集,这通常会使数据的成本更高。

Automatic approach is cheaper — you’re gathering everything you can find and hope for the best.

自动方法更便宜——你正在收集你能找到的一切,并希望一切都好。

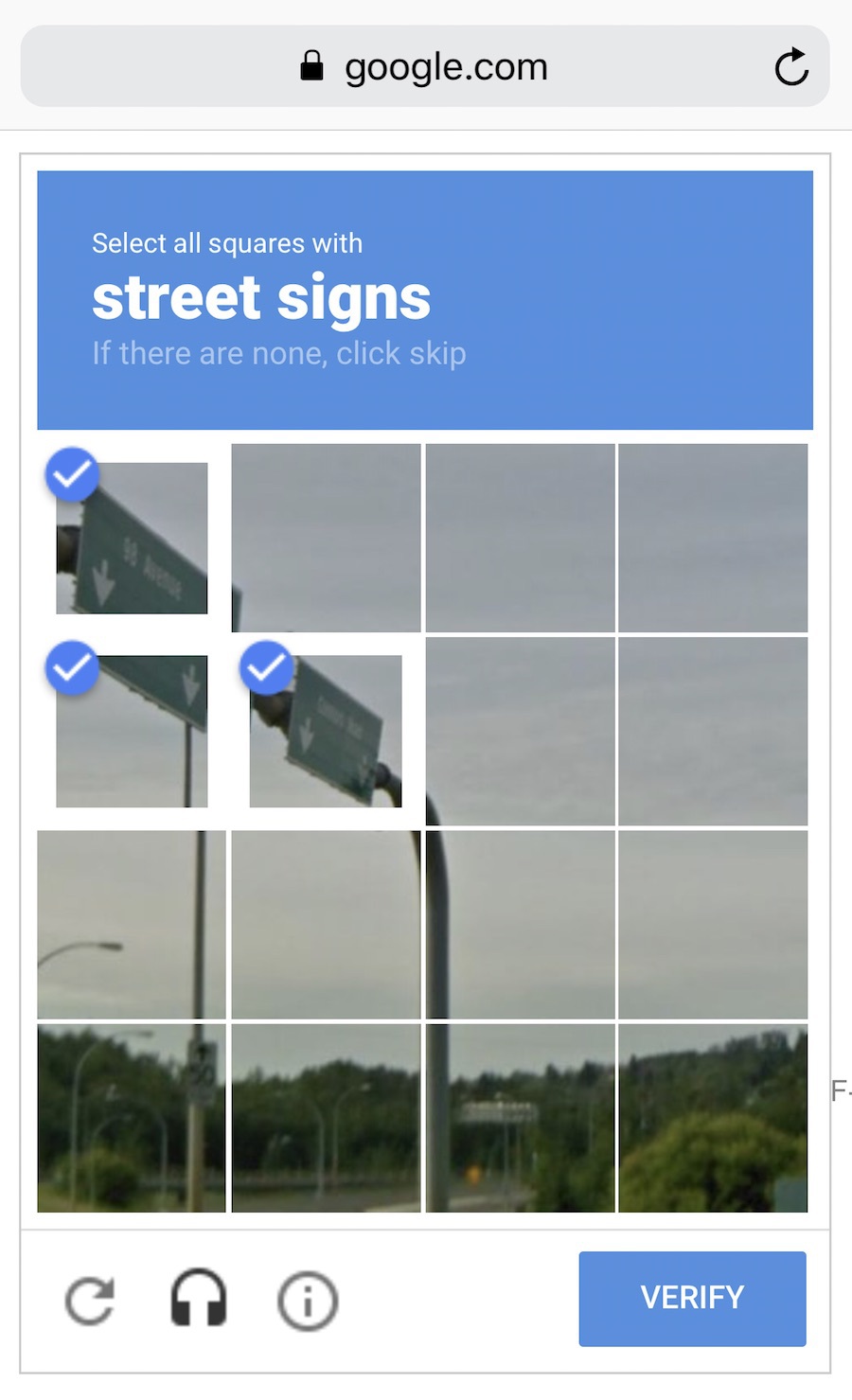

Some smart asses like Google use their own customers to label data for them for free. Remember ReCaptcha which forces you to “Select all street signs”? That’s exactly what they’re doing. Free labour! Nice. In their place, I’d start to show captcha more and more. Oh, wait…

一些像谷歌这样的聪明人利用自己的客户免费为他们标记数据。还记得ReCaptcha强制你“选择所有路标”吗?这正是他们正在做的。免费劳动力!美好的在他们的位置上,我会越来越多地向captcha展示。哦,等等。。。

It’s extremely tough to collect a good collection of data (usually called a dataset). They are so important that companies may even reveal their algorithms, but rarely datasets.

收集一个好的数据集(通常称为数据集)是非常困难的。它们是如此重要,以至于公司甚至可以公布他们的算法,但很少公布数据集。

Features Also known as parameters or variables. Those could be car mileage, user’s gender, stock price, word frequency in the text. In other words, these are the factors for a machine to look at.

特征也称为参数或变量。这些可能是汽车里程、用户性别、股价、文本中的词频。换句话说,这些都是机器需要考虑的因素。

When data stored in tables it’s simple — features are column names. But what are they if you have 100 Gb of cat pics? We cannot consider each pixel as a feature. That’s why selecting the right features usually takes way longer than all the other ML parts. That’s also the main source of errors. Meatbags are always subjective. They choose only features they like or find “more important”. Please, avoid being human.

当数据存储在表中时,这很简单——特性就是列名。但是,如果你有100 Gb的猫照片,它们是什么?我们不能将每个像素视为一个特征。这就是为什么选择正确的特性通常比所有其他ML部分花费更长的时间。这也是错误的主要来源。肉包总是主观的。他们只选择自己喜欢或觉得“更重要”的功能。请不要做人。

Algorithms Most obvious part. Any problem can be solved differently. The method you choose affects the precision, performance, and size of the final model. There is one important nuance though: if the data is crappy, even the best algorithm won’t help. Sometimes it’s referred as “garbage in – garbage out”. So don’t pay too much attention to the percentage of accuracy, try to acquire more data first.

算法最明显的部分。任何问题都可以用不同的方式解决。选择的方法会影响最终模型的精度、性能和大小。不过,有一个重要的细微差别:如果数据很糟糕,即使是最好的算法也无济于事。有时它被称为“垃圾进-垃圾出”。因此,不要过于关注准确率,尽量先获取更多数据。

Learning vs Intelligence 学习与智力

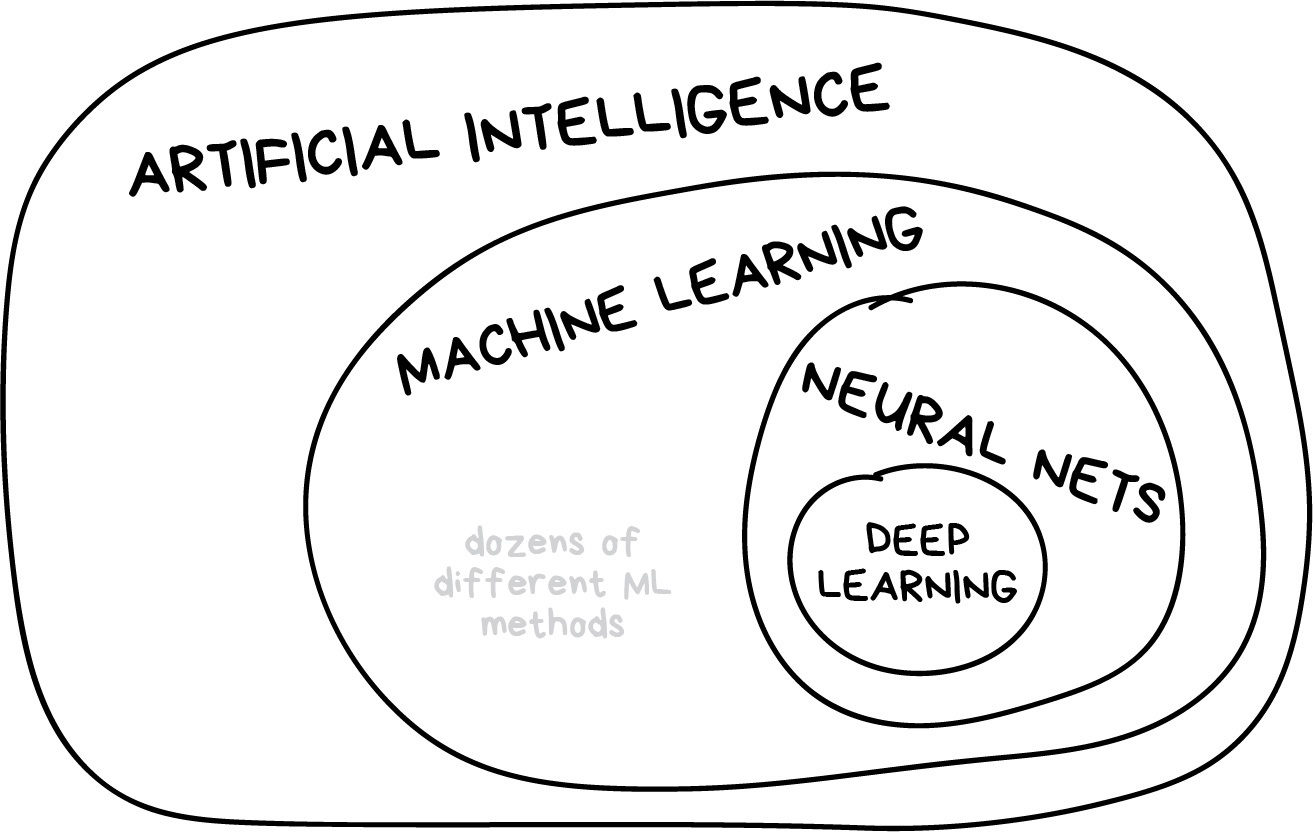

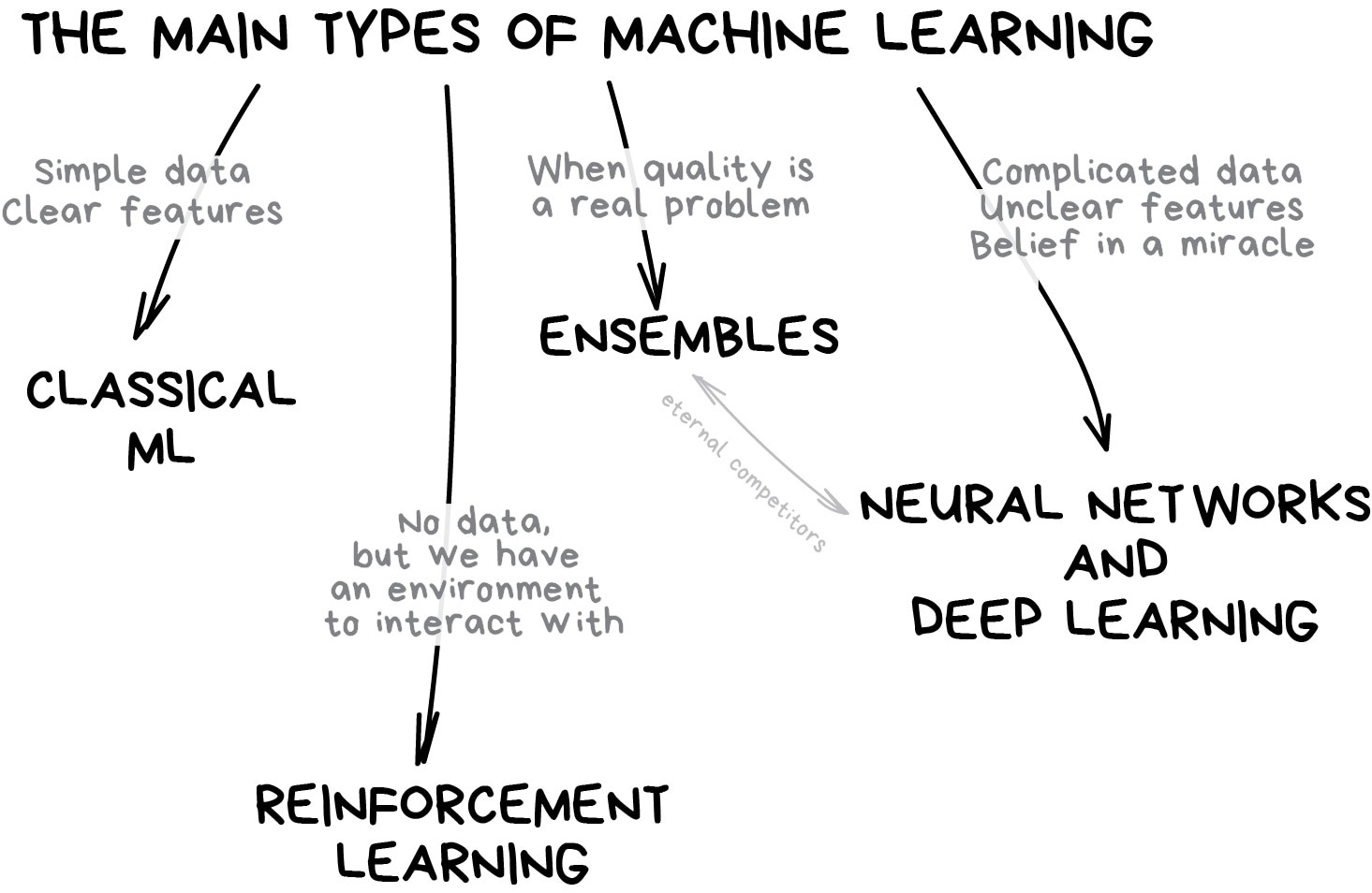

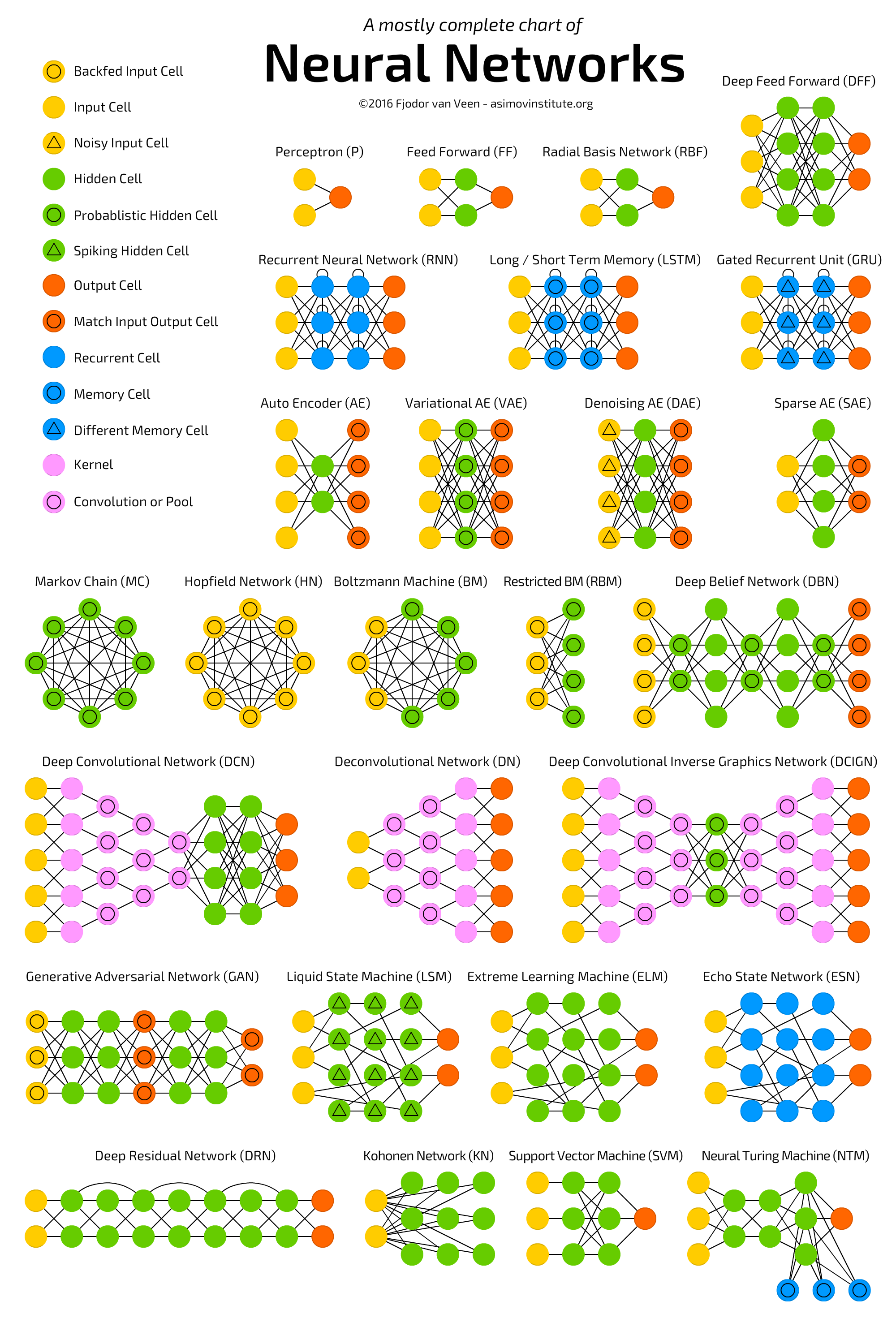

Once I saw an article titled “Will neural networks replace machine learning?” on some hipster media website. These media guys always call any shitty linear regression at least artificial intelligence, almost SkyNet. Here is a simple picture to show who is who.

有一次,我在一些时髦的媒体网站上看到一篇题为“神经网络会取代机器学习吗?”的文章。这些媒体人总是把任何糟糕的线性回归称为人工智能,几乎是天网。这里有一张简单的图片来展示谁是谁。

Artificial intelligence is the name of a whole knowledge field, similar to biology or chemistry.

人工智能是整个知识领域的名称,类似于生物学或化学。

Machine Learning is a part of artificial intelligence. An important part, but not the only one.

机器学习是人工智能的一部分。一个重要的部分,但不是唯一的。

Neural Networks are one of machine learning types. A popular one, but there are other good guys in the class.

神经网络是机器学习类型之一。很受欢迎,但班上还有其他好人。

Deep Learning is a modern method of building, training, and using neural networks. Basically, it’s a new architecture. Nowadays in practice, no one separates deep learning from the “ordinary networks”. We even use the same libraries for them. To not look like a dumbass, it’s better just name the type of network and avoid buzzwords.

深度学习是一种构建、训练和使用神经网络的现代方法。基本上,这是一个新的体系结构。如今,在实践中,没有人将深度学习与“普通网络”分开。我们甚至为它们使用相同的库。为了不让自己看起来像个傻瓜,最好只是说出网络的类型,避免使用流行语。

The general rule is to compare things on the same level. That’s why the phrase “will neural nets replace machine learning” sounds like “will the wheels replace cars”. Dear media, it’s compromising your reputation a lot.

一般的规则是在同一水平上进行比较。这就是为什么“神经网络会取代机器学习吗”这句话听起来像“车轮会取代汽车吗”。亲爱的媒体,这会大大损害你的声誉。

| Machine can | Machine cannot |

|---|---|

| Forecast | Create something new |

| Memorize | Get smart really fast |

| Reproduce | Go beyond their task |

| Choose best item | Kill all humans |

| 机器可以 | 机器不能 |

|---|---|

| 预测 | 创造新的东西 |

| 记忆 | 快速变得聪明 |

| 复制 | 超越他们的任务 |

| 选择最好的物品 | 杀死所有人类 |

The map of the machine learning world 机器学习世界地图

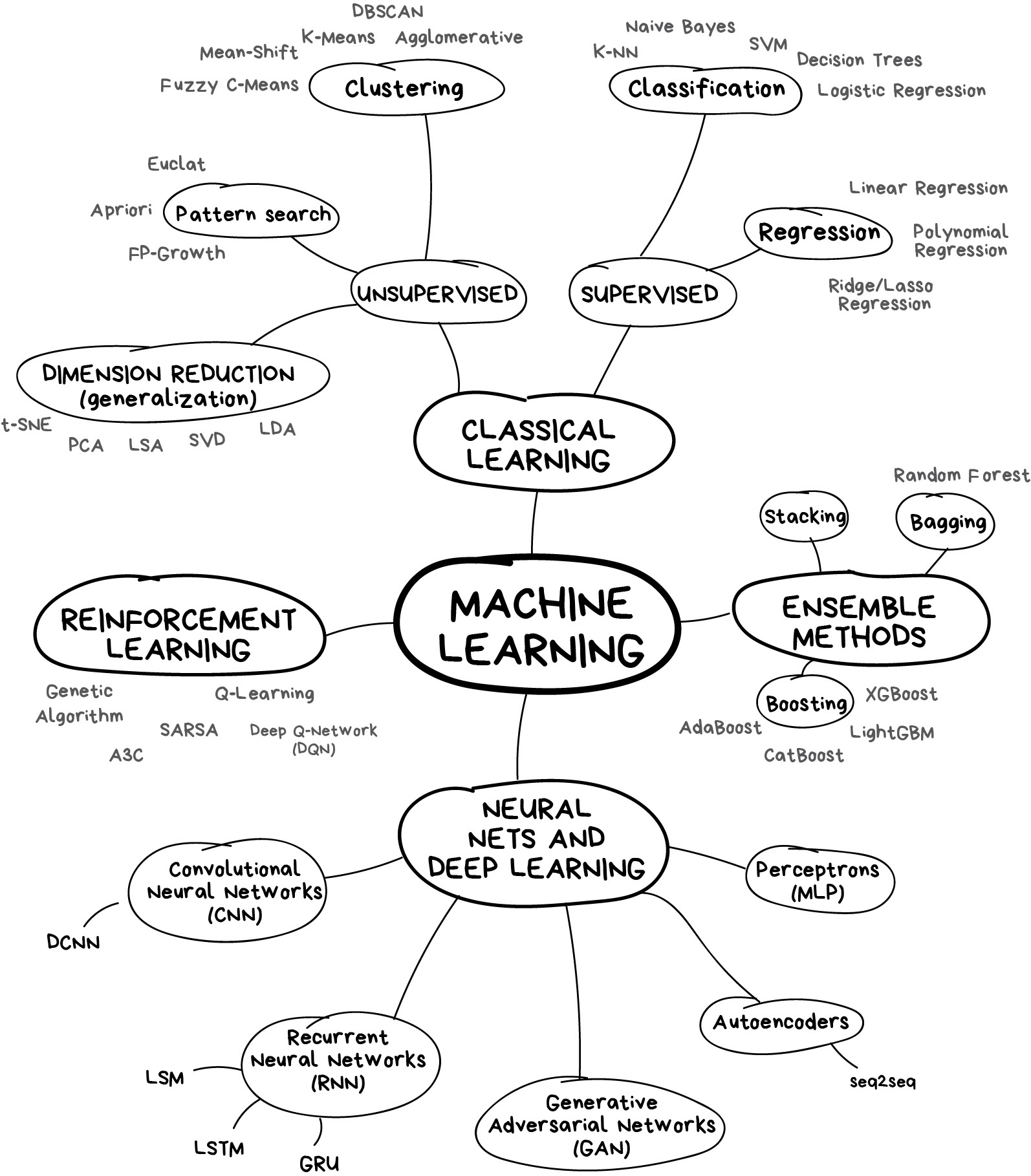

If you are too lazy for long reads, take a look at the picture below to get some understanding.

如果你太懒了,不适合长时间阅读,看看下面的图片,了解一下。

Always important to remember — there is never a sole way to solve a problem in the machine learning world. There are always several algorithms that fit, and you have to choose which one fits better. Everything can be solved with a neural network, of course, but who will pay for all these GeForces?

永远重要的是要记住——在机器学习的世界里,解决问题从来没有唯一的方法。总有几种算法适合,你必须选择哪一种更适合。当然,一切都可以用神经网络解决,但谁来为所有这些GeForces买单?

Let’s start with a basic overview. Nowadays there are four main directions in machine learning.

让我们从一个基本概述开始。如今,机器学习有四个主要方向。

Part 1. Classical Machine Learning 第1部分。经典机器学习

The first methods came from pure statistics in the ‘50s. They solved formal math tasks — searching for patterns in numbers, evaluating the proximity of data points, and calculating vectors’ directions.

第一种方法来自50年代的纯统计学。他们解决了正式的数学任务——搜索数字中的模式,评估数据点的接近度,以及计算矢量的方向。

Nowadays, half of the Internet is working on these algorithms. When you see a list of articles to “read next” or your bank blocks your card at random gas station in the middle of nowhere, most likely it’s the work of one of those little guys.

如今,一半的互联网都在研究这些算法。当你看到一张“下一步要读”的文章清单,或者你的银行在一个不知名的加油站挡住了你的卡,很可能是其中一个小家伙的工作。

Big tech companies are huge fans of neural networks. Obviously. For them, 2% accuracy is an additional 2 billion in revenue. But when you are small, it doesn’t make sense. I heard stories of the teams spending a year on a new recommendation algorithm for their e-commerce website, before discovering that 99% of traffic came from search engines. Their algorithms were useless. Most users didn’t even open the main page.

大型科技公司是神经网络的超级粉丝。明显地对他们来说,2%的准确率意味着额外的20亿收入。但当你很小的时候,这就没有意义了。我听说这些团队花了一年时间为他们的电子商务网站开发一种新的推荐算法,然后发现99%的流量来自搜索引擎。他们的算法毫无用处。大多数用户甚至没有打开主页。

Despite the popularity, classical approaches are so natural that you could easily explain them to a toddler. They are like basic arithmetic — we use it every day, without even thinking.

尽管很受欢迎,但经典的方法是如此自然,你可以很容易地向蹒跚学步的孩子解释它们。它们就像基本的算术——我们每天都在使用它,甚至不用思考。

1.1 Supervised Learning 1.1监督学习

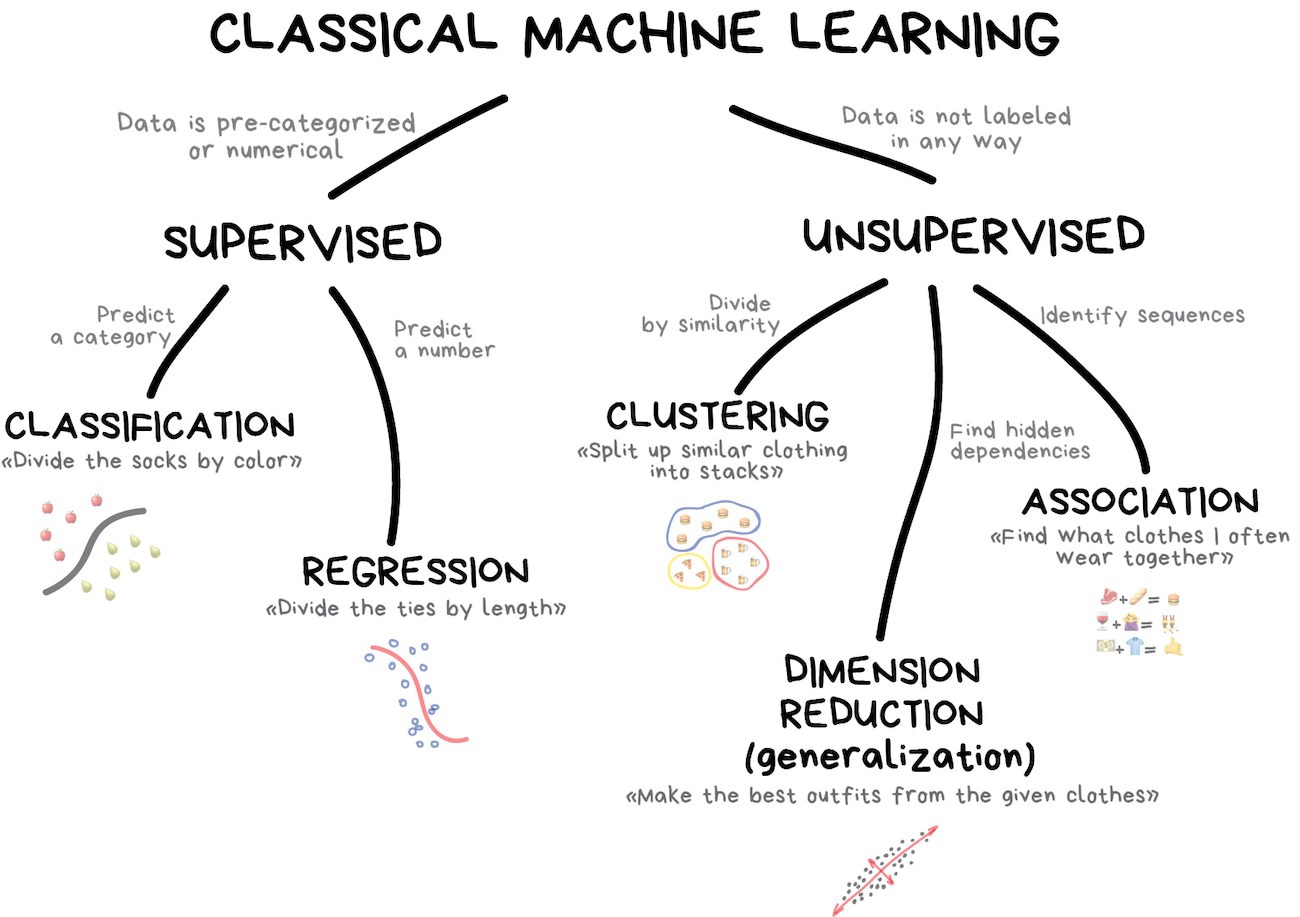

Classical machine learning is often divided into two categories – Supervised and Unsupervised Learning.

经典的机器学习通常分为两类——有监督学习和无监督学习。

In the first case, the machine has a “supervisor” or a “teacher” who gives the machine all the answers, like whether it’s a cat in the picture or a dog. The teacher has already divided (labeled) the data into cats and dogs, and the machine is using these examples to learn. One by one. Dog by cat.

在第一种情况下,机器有一个“主管”或“老师”,他给机器所有的答案,比如照片中的猫还是狗。老师已经将数据分为猫和狗,机器正在使用这些例子进行学习。一个接一个。一只狗一只猫。

Unsupervised learning means the machine is left on its own with a pile of animal photos and a task to find out who’s who. Data is not labeled, there’s no teacher, the machine is trying to find any patterns on its own. We’ll talk about these methods below.

无监督学习意味着机器只剩下一堆动物照片和一项找出谁是谁的任务。数据没有标签,没有老师,机器正试图自己找到任何模式。我们将在下面讨论这些方法。

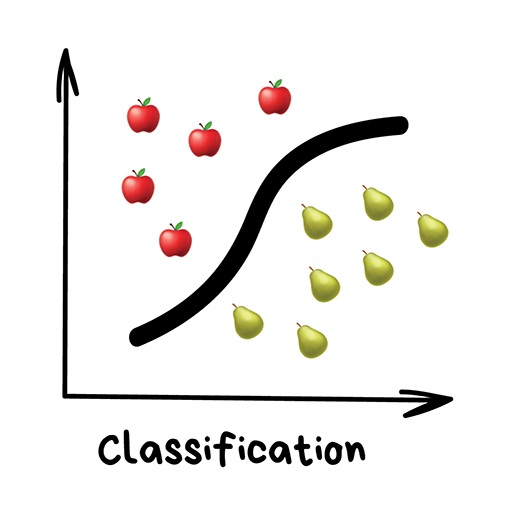

Clearly, the machine will learn faster with a teacher, so it’s more commonly used in real-life tasks. There are two types of such tasks: classification – an object’s category prediction, and regression – prediction of a specific point on a numeric axis.

很明显,这台机器在老师的指导下会学得更快,所以它更常用于现实生活中的任务。这类任务有两种类型:分类——对象的类别预测,回归——数字轴上特定点的预测。

Classification 分类

“Splits objects based at one of the attributes known beforehand. Separate socks by based on color, documents based on language, music by genre”

根据事先已知的一个属性拆分对象。根据颜色、根据语言和音乐类型划分袜子

Today used for:

- Spam filtering

- Language detection

- A search of similar documents

- Sentiment analysis

- Recognition of handwritten characters and numbers

- Fraud detection

今天用于:

- 垃圾邮件过滤

- 语言检测

- 搜索类似文档

- 情绪分析

- 识别手写字符和数字

- 欺诈检测

Popular algorithms: Naive Bayes, Decision Tree, Logistic Regression, K-Nearest Neighbours, Support Vector Machine

流行算法:朴素贝叶斯、决策树、逻辑回归、K近邻、支持向量机

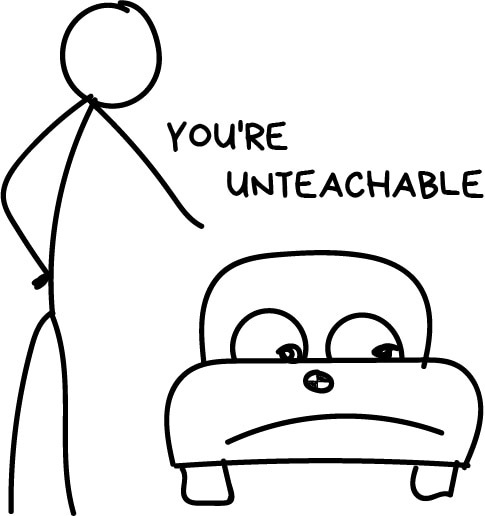

Machine learning is about classifying things, mostly. The machine here is like a baby learning to sort toys: here’s a robot, here’s a car, here’s a robo-car… Oh, wait. Error! Error!

机器学习主要是对事物进行分类。这里的机器就像一个婴儿在学习分类玩具:这是一个机器人,这是一辆汽车,这是机器人汽车。。。哦,等等。错误错误

In classification, you always need a teacher. The data should be labeled with features so the machine could assign the classes based on them. Everything could be classified — users based on interests (as algorithmic feeds do), articles based on language and topic (that’s important for search engines), music based on genre (Spotify playlists), and even your emails.

在分类方面,你总是需要一位老师。数据应该标有特征,这样机器就可以根据这些特征分配类。一切都可以分类——用户基于兴趣(就像算法提要一样),文章基于语言和主题(这对搜索引擎很重要),音乐基于流派(Spotify播放列表),甚至你的电子邮件。

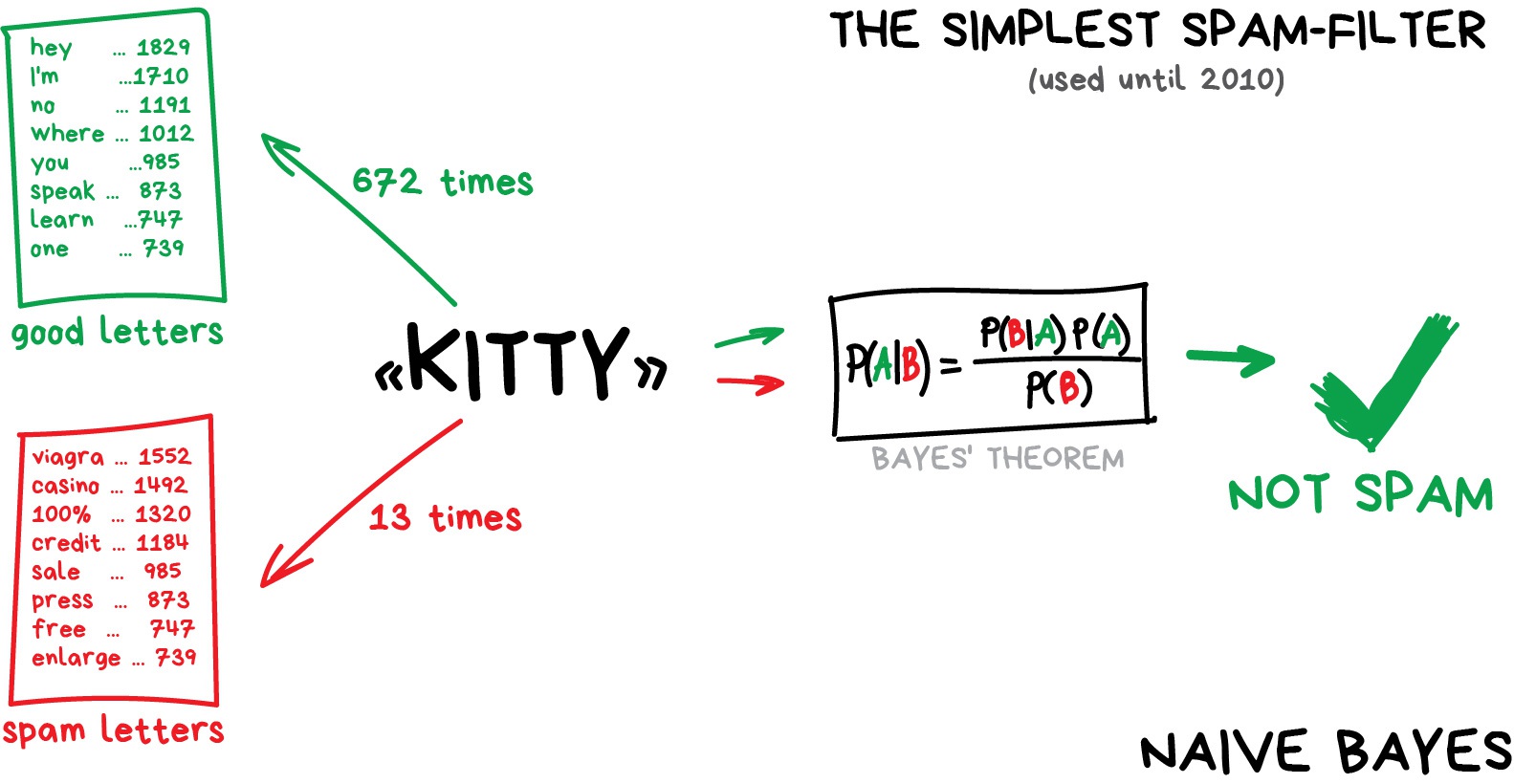

In spam filtering the Naive Bayes algorithm was widely used. The machine counts the number of “viagra” mentions in spam and normal mail, then it multiplies both probabilities using the Bayes equation, sums the results and yay, we have Machine Learning.

Naive Bayes算法在垃圾邮件过滤中得到了广泛的应用。该机器计算垃圾邮件和普通邮件中提到“伟哥”的次数,然后使用贝叶斯方程乘以这两种概率,对结果求和,是的,我们有机器学习。

Later, spammers learned how to deal with Bayesian filters by adding lots of “good” words at the end of the email. Ironically, the method was called Bayesian poisoning. Naive Bayes went down in history as the most elegant and first practically useful one, but now other algorithms are used for spam filtering.

后来,垃圾邮件发送者通过在电子邮件末尾添加大量“好”字,学会了如何处理贝叶斯过滤器。具有讽刺意味的是,这种方法被称为贝叶斯中毒。Naive Bayes作为最优雅、最早实用的算法而载入史册,但现在其他算法也被用于垃圾邮件过滤。

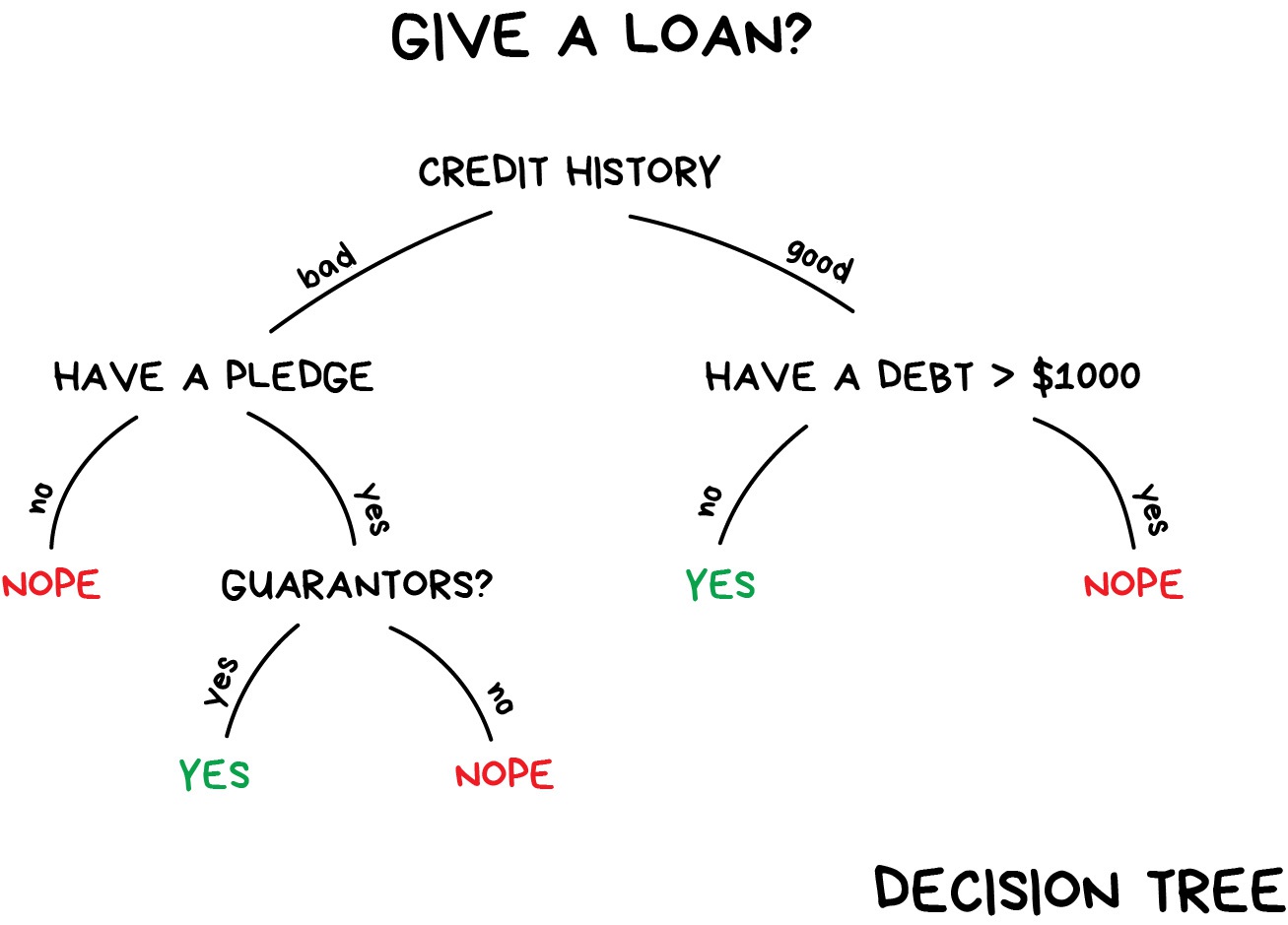

Here’s another practical example of classification. Let’s say you need some money on credit. How will the bank know if you’ll pay it back or not? There’s no way to know for sure. But the bank has lots of profiles of people who took money before. They have data about age, education, occupation and salary and – most importantly – the fact of paying the money back. Or not.

这是另一个实用的分类示例。比方说你需要一些贷款。银行怎么知道你是否会还钱?没有办法确定。但该银行有很多以前拿钱的人的档案。他们有关于年龄、教育、职业和工资的数据,最重要的是,还有还钱的事实。或者不。

Using this data, we can teach the machine to find the patterns and get the answer. There’s no issue with getting an answer. The issue is that the bank can’t blindly trust the machine answer. What if there’s a system failure, hacker attack or a quick fix from a drunk senior.

使用这些数据,我们可以教机器找到模式并得到答案。得到答案没有问题。问题是,银行不能盲目相信机器的答案。如果出现系统故障、黑客攻击或醉酒的学长快速修复,该怎么办。

To deal with it, we have Decision Trees. All the data automatically divided to yes/no questions. They could sound a bit weird from a human perspective, e.g., whether the creditor earns more than $128.12? Though, the machine comes up with such questions to split the data best at each step.

为了解决这个问题,我们有决策树。所有数据自动划分为是/否问题。从人类的角度来看,这听起来可能有点奇怪,例如,债权人的收入是否超过128.12美元?不过,这台机器会提出这样的问题,以便在每一步都能最好地分割数据。

That’s how a tree is made. The higher the branch — the broader the question. Any analyst can take it and explain afterward. He may not understand it, but explain easily! (typical analyst)

树就是这样造的。分支越高,问题就越广泛。任何分析师都可以接受并在事后解释。他可能听不懂,但很容易解释!(典型分析员)

Decision trees are widely used in high responsibility spheres: diagnostics, medicine, and finances.

决策树广泛应用于高责任领域:诊断、医学和金融。

The two most popular algorithms for forming the trees are CART and C4.5.

用于形成树的两种最流行的算法是CART和C4.5

Pure decision trees are rarely used today. However, they often set the basis for large systems, and their ensembles even work better than neural networks. We’ll talk about that later.

今天很少使用纯决策树。然而,它们通常为大型系统奠定基础,它们的集成甚至比神经网络工作得更好。我们稍后再谈。

When you google something, that’s precisely the bunch of dumb trees which are looking for a range of answers for you. Search engines love them because they’re fast.

当你在谷歌上搜索某个东西时,那正是一群愚蠢的树在为你寻找一系列答案。搜索引擎喜欢它们,因为它们很快。

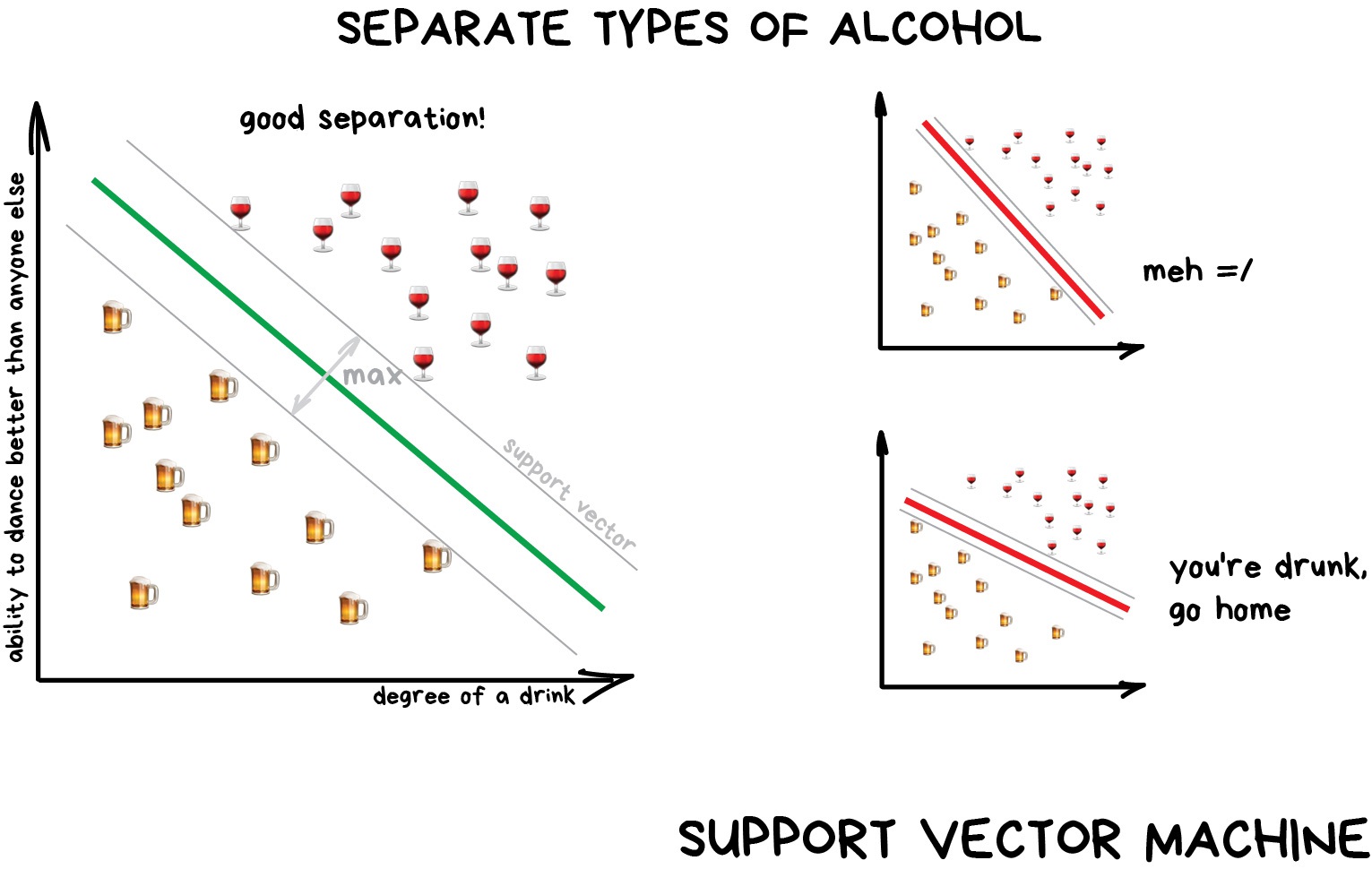

Support Vector Machines (SVM) is rightfully the most popular method of classical classification. It was used to classify everything in existence: plants by appearance in photos, documents by categories, etc.

支持向量机(SVM)是最流行的经典分类方法。它被用来对现存的一切进行分类:植物按照片中的外观分类,文件按类别分类等等。

The idea behind SVM is simple – it’s trying to draw two lines between your data points with the largest margin between them. Look at the picture:

SVM背后的想法很简单——它试图在数据点之间画两条线,并在它们之间留有最大的余量。请看图片:

There’s one very useful side of the classification — anomaly detection. When a feature does not fit any of the classes, we highlight it. Now that’s used in medicine — on MRIs, computers highlight all the suspicious areas or deviations of the test. Stock markets use it to detect abnormal behaviour of traders to find the insiders. When teaching the computer the right things, we automatically teach it what things are wrong.

分类有一个非常有用的方面——异常检测。当一个功能不适合任何类别时,我们会突出显示它。现在它被用于医学——在核磁共振成像上,计算机会突出显示测试的所有可疑区域或偏差。股票市场利用它来检测交易员的异常行为,以找到内部人士。当教计算机正确的东西时,我们会自动教它什么是错误的。

Today, neural networks are more frequently used for classification. Well, that’s what they were created for.

如今,神经网络更频繁地用于分类。好吧,这就是他们被创造的目的。

The rule of thumb is the more complex the data, the more complex the algorithm. For text, numbers, and tables, I’d choose the classical approach. The models are smaller there, they learn faster and work more clearly. For pictures, video and all other complicated big data things, I’d definitely look at neural networks.

经验法则是数据越复杂,算法就越复杂。对于文本、数字和表格,我会选择经典的方法。那里的模型更小,学习更快,工作更清晰。对于图片、视频和所有其他复杂的大数据,我肯定会研究神经网络。

Just five years ago you could find a face classifier built on SVM. Today it’s easier to choose from hundreds of pre-trained networks. Nothing has changed for spam filters, though. They are still written with SVM. And there’s no good reason to switch from it anywhere.

就在五年前,你还可以找到一个基于SVM的人脸分类器。如今,从数百个经过预训练的网络中进行选择变得更加容易。不过,垃圾邮件过滤器没有任何变化。它们仍然是用SVM编写的。而且没有充分的理由从任何地方切换。

Even my website has SVM-based spam detection in comments

甚至我的网站在评论中也有基于SVM的垃圾邮件检测

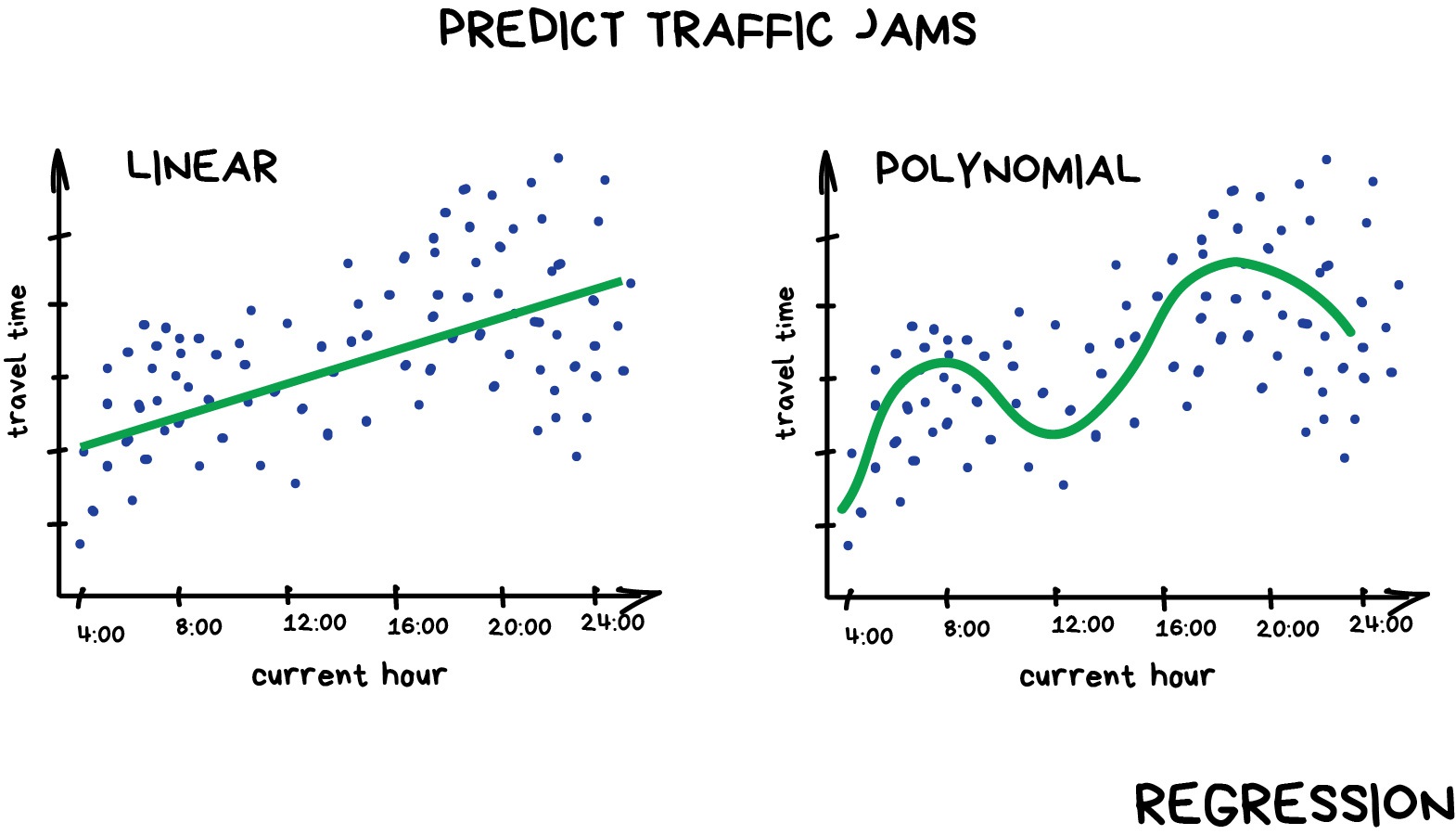

Regression 回归

“Draw a line through these dots. Yep, that’s the machine learning”

“在这些点之间划一条线。是的,这就是机器学习”

Today this is used for:

- Stock price forecasts 股票价格预测

- Demand and sales volume analysis 需求和销售量分析

- Medical diagnosis 医学诊断

- Any number-time correlations 任意数字时间相关性

Popular algorithms are Linear and Polynomial regressions.

常用的算法是线性回归和多项式回归。

Regression is basically classification where we forecast a number instead of category. Examples are car price by its mileage, traffic by time of the day, demand volume by growth of the company etc. Regression is perfect when something depends on time.

回归基本上是分类,我们预测一个数字而不是类别。例如,按里程计算的汽车价格、按时间计算的交通量、按公司增长计算的需求量等。当某些事情取决于时间时,回归是完美的。

Everyone who works with finance and analysis loves regression. It’s even built-in to Excel. And it’s super smooth inside — the machine simply tries to draw a line that indicates average correlation. Though, unlike a person with a pen and a whiteboard, machine does so with mathematical accuracy, calculating the average interval to every dot.

每个从事金融和分析工作的人都喜欢回归。它甚至内置在Excel中。它的内部非常平滑——机器只是试图画一条线来表示平均相关性。不过,和拿着笔和白板的人不同,这台机器能准确地计算出每个点的平均间隔。

When the line is straight — it’s a linear regression, when it’s curved – polynomial. These are two major types of regression. The other ones are more exotic. Logistic regression is a black sheep in the flock. Don’t let it trick you, as it’s a classification method, not regression.

当直线是直线时——它是线性回归,当它是曲线时——多项式。这是两种主要的回归类型。其他的更具异国情调。逻辑回归是羊群中的害群之马。不要让它欺骗你,因为这是一种分类方法,而不是回归。

It’s okay to mess with regression and classification, though. Many classifiers turn into regression after some tuning. We can not only define the class of the object but memorize how close it is. Here comes a regression.

不过,搞砸回归和分类是可以的。许多分类器经过一些调整后会变成回归。我们不仅可以定义对象的类,还可以记住它的接近程度。

If you want to get deeper into this, check these series: Machine Learning for Humans. I really love and recommend it!

如果你想更深入地了解这一点,请查看以下系列:面向人类的机器学习。我真的很喜欢并推荐它!

1.2 Unsupervised learning 1.2无监督学习

Unsupervised was invented a bit later, in the ‘90s. It is used less often, but sometimes we simply have no choice.

无监督是在90年代发明的。它的使用频率较低,但有时我们别无选择。

Labeled data is luxury. But what if I want to create, let’s say, a bus classifier? Should I manually take photos of million fucking buses on the streets and label each of them? No way, that will take a lifetime, and I still have so many games not played on my Steam account.

标记数据是一种奢侈。但是,如果我想创建一个总线分类器呢?我应该手动拍摄一百万辆他妈的公交车在街上的照片,并给每辆贴上标签吗?不可能,那将需要一辈子的时间,而且我的Steam帐户上还有很多游戏没有玩。

There’s a little hope for capitalism in this case. Thanks to social stratification, we have millions of cheap workers and services like Mechanical Turk who are ready to complete your task for $0.05. And that’s how things usually get done here.

在这种情况下,资本主义还有一点希望。由于社会分层,我们有数百万像机械土耳其人这样的廉价工人和服务,他们准备以0.05美元的价格完成您的任务。这里通常就是这样做的。

Or you can try to use unsupervised learning. But I can’t remember any good practical application for it, though. It’s usually useful for exploratory data analysis but not as the main algorithm. Specially trained meatbag with Oxford degree feeds the machine with a ton of garbage and watches it. Are there any clusters? No. Any visible relations? No. Well, continue then. You wanted to work in data science, right?

或者你可以尝试使用无监督学习。但我不记得它有什么好的实际应用。它通常用于探索性数据分析,但不是主要的算法。受过专门训练的牛津学位的肉包往机器里倒一吨垃圾,然后看着它。有集群吗?没有。有明显的关系吗?不,那就继续。你想从事数据科学工作,对吧?

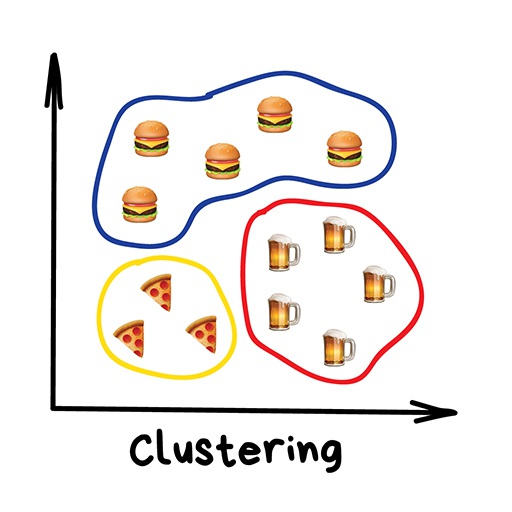

Clustering 聚类

“Divides objects based on unknown features. Machine chooses the best way”“根据未知功能划分对象。机器选择最佳方式”

Nowadays used:

- For market segmentation (types of customers, loyalty) 针对市场细分(客户类型、忠诚度)

- To merge close points on a map 合并地图上的闭合点的步骤

- For image compression 用于图像压缩

- To analyze and label new data 分析和标记新数据

- To detect abnormal behavior 检测异常行为

Popular algorithms: K-means_clustering, Mean-Shift, DBSCAN

K-means_clustering

Mean-Shift

DBSCAN

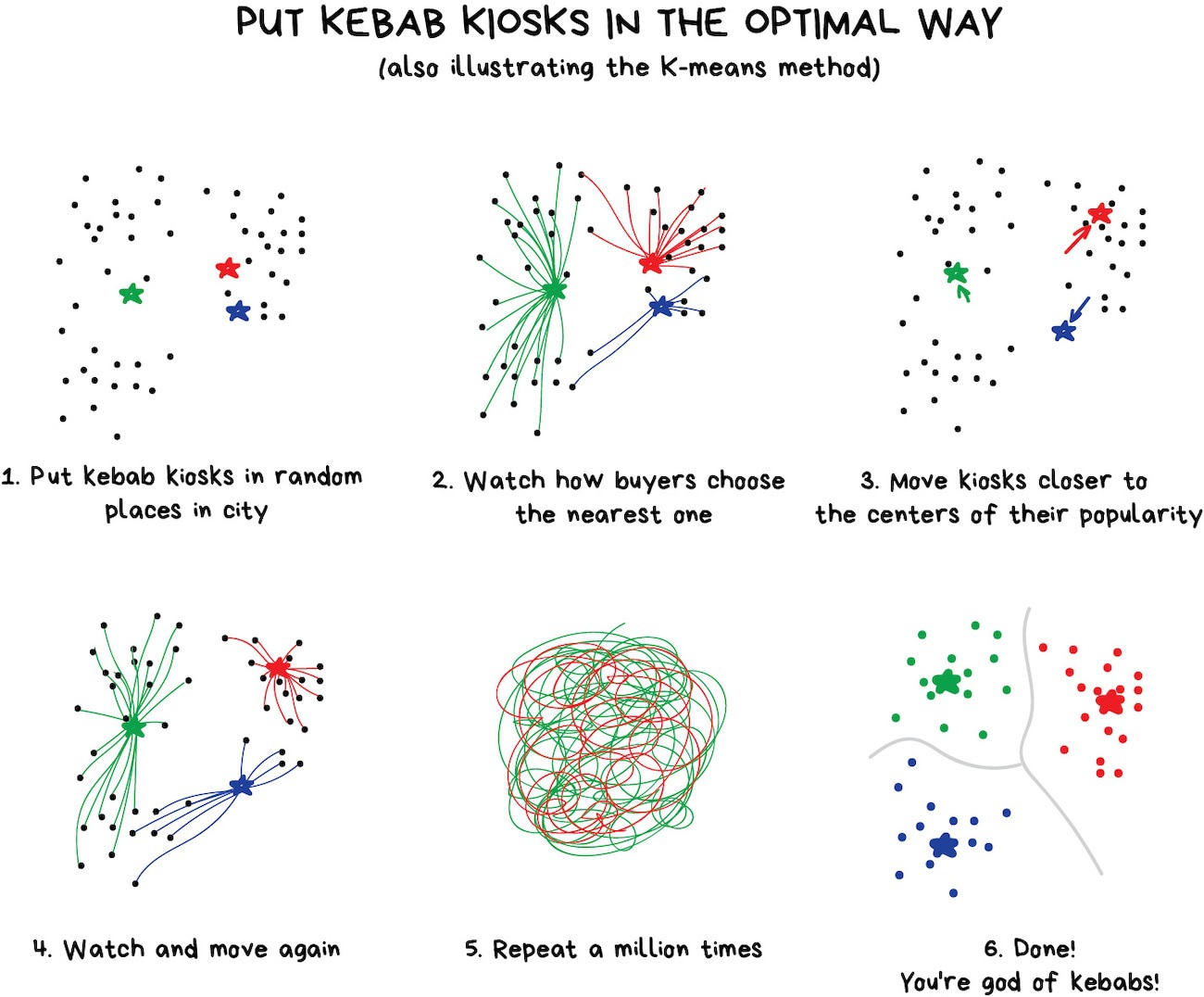

Clustering is a classification with no predefined classes. It’s like dividing socks by color when you don’t remember all the colors you have. Clustering algorithm trying to find similar (by some features) objects and merge them in a cluster. Those who have lots of similar features are joined in one class. With some algorithms, you even can specify the exact number of clusters you want.

聚类是一种没有预定义类的分类。这就像当你不记得所有的颜色时,按颜色划分袜子。聚类算法试图找到相似的(通过某些特征)对象并将它们合并到一个聚类中。那些有许多相似特征的人被加入到一个类中。使用某些算法,您甚至可以指定所需的簇的确切数量。

An excellent example of clustering — markers on web maps. When you’re looking for all vegan restaurants around, the clustering engine groups them to blobs with a number. Otherwise, your browser would freeze, trying to draw all three million vegan restaurants in that hipster downtown.

聚类的一个很好的例子——网络地图上的标记。当你在寻找周围所有的纯素食餐厅时,集群引擎会用一个数字将它们分组。否则,你的浏览器就会冻结,试图吸引市中心时髦人群中的300万家纯素食餐厅。

Apple Photos and Google Photos use more complex clustering. They’re looking for faces in photos to create albums of your friends. The app doesn’t know how many friends you have and how they look, but it’s trying to find the common facial features. Typical clustering.

苹果照片和谷歌照片使用更复杂的聚类。他们正在照片中查找人脸,以创建你朋友的相册。该应用程序不知道你有多少朋友,他们看起来怎么样,但它正在努力寻找常见的面部特征。典型的集群。

Another popular issue is image compression. When saving the image to PNG you can set the palette, let’s say, to 32 colors. It means clustering will find all the “reddish” pixels, calculate the “average red” and set it for all the red pixels. Fewer colors — lower file size — profit!

另一个流行的问题是图像压缩。将图像保存为PNG时,可以将调色板设置为32种颜色。这意味着聚类将找到所有“红色”像素,计算“平均红色”,并将其设置为所有红色像素。更少的颜色—更低的文件大小—利润!

However, you may have problems with colors like Cyan-like colors. Is it green or blue? Here comes the K-Means algorithm.

但是,您可能对青色等颜色有问题-比如颜色。它是绿色的还是蓝色的?K-Means算法来了。

It randomly sets 32 color dots in the palette. Now, those are centroids. The remaining points are marked as assigned to the nearest centroid. Thus, we get kind of galaxies around these 32 colors. Then we’re moving the centroid to the center of its galaxy and repeat that until centroids stop moving.

它在调色板中随机设置32个色点。现在,这些是质心。剩余的点被标记为已指定给最近的质心。因此,我们得到了围绕这32种颜色的星系。然后我们将质心移动到其星系的中心,并重复这一过程,直到质心停止移动。

All done. Clusters defined, stable, and there are exactly 32 of them. Here is a more real-world explanation:

全部完成。集群是定义的,是稳定的,并且正好有32个集群。以下是一个更真实的解释:

Searching for the centroids is convenient. Though, in real life clusters not always circles. Let’s imagine you’re a geologist. And you need to find some similar minerals on the map. In that case, the clusters can be weirdly shaped and even nested. Also, you don’t even know how many of them to expect. 10? 100?

搜索质心很方便。然而,在现实生活中,集群并不总是圆形的。让我们想象一下你是一名地质学家。你需要在地图上找到一些类似的矿物。在这种情况下,簇的形状可能很奇怪,甚至可以嵌套。此外,你甚至不知道他们中有多少值得期待。10?100?

K-means does not fit here, but DBSCAN can be helpful. Let’s say, our dots are people at the town square. Find any three people standing close to each other and ask them to hold hands. Then, tell them to start grabbing hands of those neighbors they can reach. And so on, and so on until no one else can take anyone’s hand. That’s our first cluster. Repeat the process until everyone is clustered. Done.

K-means不适合这里,但DBSCAN可能会有所帮助。比方说,我们的圆点是城镇广场上的人。找任意三个人站得很近,让他们手牵着手。然后,告诉他们开始与他们能接触到的邻居握手。等等,等等,直到没有人能握住任何人的手。这是我们的第一个集群。重复此过程,直到所有人都聚集在一起。完成。

A nice bonus: a person who has no one to hold hands with — is an anomaly.

一个很好的好处是:一个没有人可以牵手的人——是一种反常现象。

It all looks cool in motion:

这一切看起来都很酷:

Interested in clustering? Check out this piece The 5 Clustering Algorithms Data Scientists Need to Know

对集群感兴趣吗?看看这篇文章数据科学家需要知道的5种聚类算法

The 5 Clustering Algorithms Data Scientists Need to Know

Just like classification, clustering could be used to detect anomalies. User behaves abnormally after signing up? Let the machine ban him temporarily and create a ticket for the support to check it. Maybe it’s a bot. We don’t even need to know what “normal behavior” is, we just upload all user actions to our model and let the machine decide if it’s a “typical” user or not.

就像分类一样,聚类也可以用来检测异常。用户注册后行为异常?让机器暂时禁止他,并创建一个票证供支持人员检查。也许这是一个机器人。我们甚至不需要知道什么是“正常行为”,我们只需将所有用户操作上传到我们的模型中,让机器决定它是否是“典型”用户。

This approach doesn’t work that well compared to the classification one, but it never hurts to try.

与分类方法相比,这种方法效果不太好,但尝试一下也不会有什么坏处。

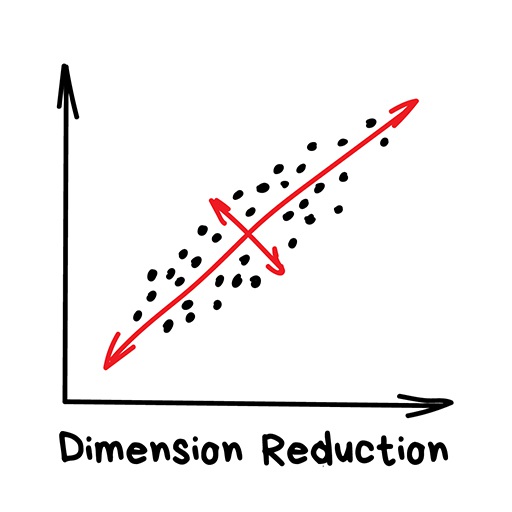

Dimensionality Reduction (Generalization) 降维(泛化)

“Assembles specific features into more high-level ones”

“将特定功能汇编成更高级的功能”

Nowadays is used for:

- Recommender systems (★) 推荐系统

- Beautiful visualizations 美丽的可视化

- Topic modeling and similar document search 主题建模和类似文档搜索

- Fake image analysis 假图像分析

- Risk management 风险管理

Popular algorithms: Principal Component Analysis (PCA), Singular Value Decomposition (SVD), Latent Dirichlet allocation (LDA), Latent Semantic Analysis (LSA, pLSA, GLSA), t-SNE (for visualization)

流行算法:主成分分析(PCA)、奇异值分解(SVD)、潜在狄利克雷分配(LDA)、潜在语义分析(LSA、pLSA、GLSA)、t-SNE(用于可视化)

Principal Component Analysis-PAC

Singular Value Decomposition-SVD

Latent Dirichlet allocation-LDA

Latent Semantic Analysis-LSA/pLSA/GLSA

t-SNE

Previously these methods were used by hardcore data scientists, who had to find “something interesting” in huge piles of numbers. When Excel charts didn’t help, they forced machines to do the pattern-finding. That’s how they got Dimension Reduction or Feature Learning methods.

以前,这些方法是由核心数据科学家使用的,他们必须在大量数据中找到“有趣的东西”。当Excel图表没有帮助时,他们强迫机器进行模式查找。这就是他们获得降维或特征学习方法的原因。

Projecting 2D-data to a line (PCA)将2D数据投影到直线(PCA)

It is always more convenient for people to use abstractions, not a bunch of fragmented features. For example, we can merge all dogs with triangle ears, long noses, and big tails to a nice abstraction — “shepherd”. Yes, we’re losing some information about the specific shepherds, but the new abstraction is much more useful for naming and explaining purposes. As a bonus, such “abstracted” models learn faster, overfit less and use a lower number of features.

人们总是更方便地使用抽象,而不是一堆碎片化的特性。例如,我们可以将所有三角形耳朵、长鼻和大尾巴的狗合并为一个很好的抽象概念——“牧羊人”。是的,我们正在丢失一些关于特定牧羊人的信息,但新的抽象对于命名和解释目的更有用。额外的好处是,这种“抽象”模型学习速度更快,过拟合更少,使用的特征数量更少。

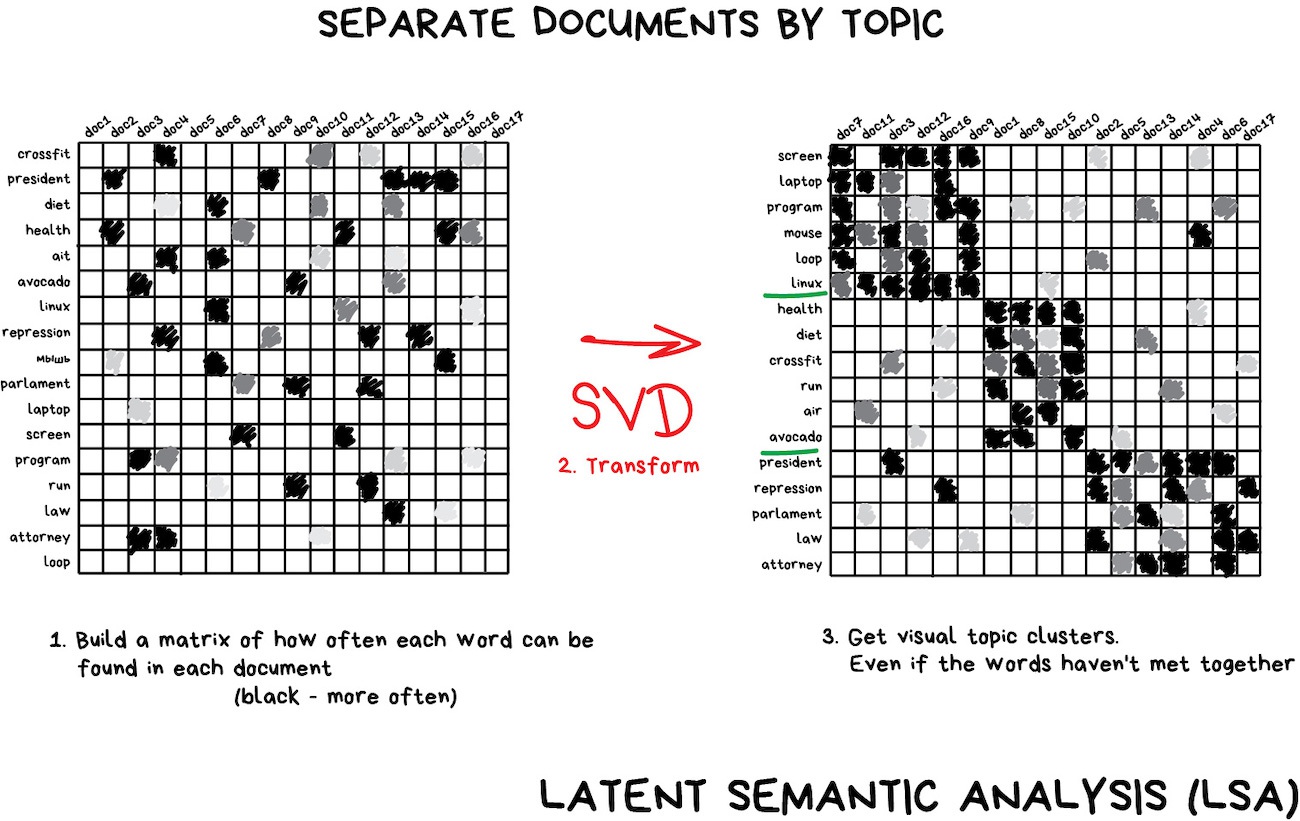

These algorithms became an amazing tool for Topic Modeling. We can abstract from specific words to their meanings. This is what Latent semantic analysis (LSA) does. It is based on how frequently you see the word on the exact topic. Like, there are more tech terms in tech articles, for sure. The names of politicians are mostly found in political news, etc. 这些算法成为主题建模的一个惊人工具。我们可以从特定的单词中抽象出它们的含义。这就是潜在语义分析(LSA)的作用。这取决于你在确切的主题上看到这个词的频率。比如,科技文章中肯定有更多的科技术语。政治家的名字大多出现在政治新闻等中。

Yes, we can just make clusters from all the words at the articles, but we will lose all the important connections (for example the same meaning of battery and accumulator in different documents). LSA will handle it properly, that’s why its called “latent semantic”. 是的,我们可以根据文章中的所有单词进行聚类,但我们将失去所有重要的连接(例如,不同文档中电池和蓄电池的含义相同)。LSA会正确处理它,这就是为什么它被称为“潜在语义”。

So we need to connect the words and documents into one feature to keep these latent connections — it turns out that Singular decomposition (SVD) nails this task, revealing useful topic clusters from seen-together words. 因此,我们需要将单词和文档连接到一个功能中,以保持这些潜在的连接——事实证明,奇异分解(SVD)固定了这项任务,从一起看到的单词中揭示了有用的主题集群。

Recommender Systems and Collaborative Filtering is another super-popular use of the dimensionality reduction method. Seems like if you use it to abstract user ratings, you get a great system to recommend movies, music, games and whatever you want.

推荐系统和协作过滤是降维方法的另一个非常流行的用途。似乎如果你用它来抽象用户评分,你会得到一个很好的系统来推荐电影、音乐、游戏和任何你想要的东西。

Here I can recommend my favorite book “Programming Collective Intelligence”. It was my bedside book while studying at university!

在这里我可以推荐我最喜欢的书“编程集体智能”。这是我在大学学习时的床边书!

Programming Collective Intelligence

It’s barely possible to fully understand this machine abstraction, but it’s possible to see some correlations on a closer look. Some of them correlate with user’s age — kids play Minecraft and watch cartoons more; others correlate with movie genre or user hobbies.

几乎不可能完全理解这种机器抽象,但可以仔细观察一些相关性。其中一些与用户的年龄有关——孩子们玩《我的世界》,看动画片的次数更多;其他则与电影类型或用户爱好相关。

Machines get these high-level concepts even without understanding them, based only on knowledge of user ratings. Nicely done, Mr.Computer. Now we can write a thesis on why bearded lumberjacks love My Little Pony.

机器甚至在不了解这些高级概念的情况下,仅基于用户评级的知识,就可以获得这些概念。干得好,电脑先生。现在我们可以写一篇关于为什么留胡子的伐木工喜欢我的小马的论文了。

Association rule learning 关联规则学习

“Look for patterns in the orders’ stream”

“在订单流中查找模式”

Nowadays is used:

- To forecast sales and discounts 预测销售额和折扣

- To analyze goods bought together 分析一起购买的商品

- To place the products on the shelves 把产品放在货架上

- To analyze web surfing patterns 分析网络冲浪模式

Popular algorithms: Apriori, Euclat, FP-growth

流行算法:Apriori、Euclat、FP growth

This includes all the methods to analyze shopping carts, automate marketing strategy, and other event-related tasks. When you have a sequence of something and want to find patterns in it — try these thingys.

这包括分析购物车、自动化营销策略和其他与事件相关的任务的所有方法。当你有一系列的东西并想在其中找到模式时,试试这些东西。

Say, a customer takes a six-pack of beers and goes to the checkout. Should we place peanuts on the way? How often do people buy them together? Yes, it probably works for beer and peanuts, but what other sequences can we predict? Can a small change in the arrangement of goods lead to a significant increase in profits?

比如说,一位顾客拿了六包啤酒去结账。我们应该在路上放花生吗?人们多久一起买一次?是的,它可能适用于啤酒和花生,但我们还能预测其他哪些序列?货物排列上的一个小变化能导致利润的显著增加吗?

Same goes for e-commerce. The task is even more interesting there — what is the customer going to buy next time?

电子商务也是如此。这里的任务更有趣——顾客下次要买什么?

No idea why rule-learning seems to be the least elaborated upon category of machine learning. Classical methods are based on a head-on look through all the bought goods using trees or sets. Algorithms can only search for patterns, but cannot generalize or reproduce those on new examples.

不知道为什么规则学习似乎是机器学习中阐述最少的一类。传统的方法是基于使用树或集合对所有购买的商品进行正面查看。算法只能搜索模式,但不能在新的例子中推广或复制这些模式。

In the real world, every big retailer builds their own proprietary solution, so nooo revolutions here for you. The highest level of tech here — recommender systems. Though, I may be not aware of a breakthrough in the area. Let me know in the comments if you have something to share.

在现实世界中,每个大型零售商都会构建自己的专有解决方案,所以这里没有革命。这里的最高技术水平——推荐系统。不过,我可能不知道在这方面有什么突破。如果你有什么要分享的,请在评论中告诉我。

Part 2. Reinforcement Learning 第2部分。强化学习

“Throw a robot into a maze and let it find an exit”

“把一个机器人扔进迷宫,让它找到出口”

Nowadays used for:

- Self-driving cars 自动驾驶汽车

- Robot vacuums 机器人吸尘器

- Games 游戏

- Automating trading 自动化交易

- Enterprise resource management 企业资源管理

Popular algorithms: Q-Learning, SARSA, DQN, A3C, Genetic algorithm

热门算法:Q-Learning、SARSA、DQN、A3C、遗传算法

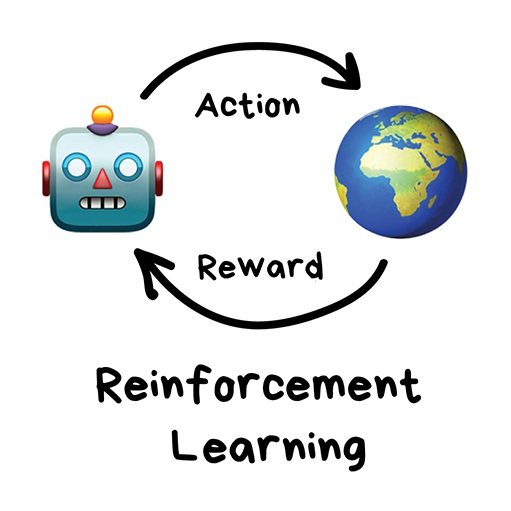

Finally, we get to something looks like real artificial intelligence. In lots of articles reinforcement learning is placed somewhere in between of supervised and unsupervised learning. They have nothing in common! Is this because of the name?

最后,我们看到了一些看起来像真正的人工智能的东西。在许多文章中,强化学习被置于有监督和无监督学习之间。他们没有共同点!这是因为这个名字吗?

Reinforcement learning is used in cases when your problem is not related to data at all, but you have an environment to live in. Like a video game world or a city for self-driving car.

强化学习用于当你的问题与数据无关,但你有一个可以生活的环境时。比如电子游戏世界或自动驾驶汽车的城市。

Knowledge of all the road rules in the world will not teach the autopilot how to drive on the roads. Regardless of how much data we collect, we still can’t foresee all the possible situations. This is why its goal is to minimize error, not to predict all the moves.

了解世界上所有的道路规则并不能教会自动驾驶仪如何在道路上行驶。不管我们收集了多少数据,我们仍然无法预见所有可能的情况。这就是为什么它的目标是最大限度地减少误差,而不是预测所有的移动。

Surviving in an environment is a core idea of reinforcement learning. Throw poor little robot into real life, punish it for errors and reward it for right deeds. Same way we teach our kids, right?

在环境中生存是强化学习的核心理念。把可怜的小机器人丢进现实生活中,惩罚它的错误,奖励它的正确行为。和我们教孩子的方式一样,对吧?

More effective way here — to build a virtual city and let self-driving car to learn all its tricks there first. That’s exactly how we train auto-pilots right now. Create a virtual city based on a real map, populate with pedestrians and let the car learn to kill as few people as possible. When the robot is reasonably confident in this artificial GTA, it’s freed to test in the real streets. Fun!

更有效的方法是建立一个虚拟城市,让自动驾驶汽车先在那里学习它的所有技巧。这正是我们现在训练自动驾驶的方式。在真实地图的基础上创建一个虚拟城市,挤满行人,让汽车学会尽可能少地杀人。当机器人对这种人造GTA相当有信心时,它就可以自由地在真实的街道上进行测试。享乐

There may be two different approaches — Model-Based and Model-Free.

可能有两种不同的方法——基于模型和无模型。

Model-Based means that car needs to memorize a map or its parts. That’s a pretty outdated approach since it’s impossible for the poor self-driving car to memorize the whole planet.

基于模型意味着汽车需要记住地图或其部件。这是一种相当过时的方法,因为糟糕的自动驾驶汽车不可能记住整个星球。

In Model-Free learning, the car doesn’t memorize every movement but tries to generalize situations and act rationally while obtaining a maximum reward.

在无模型学习中,汽车不会记住每一个动作,而是试图概括情况并理性行事,同时获得最大的奖励。

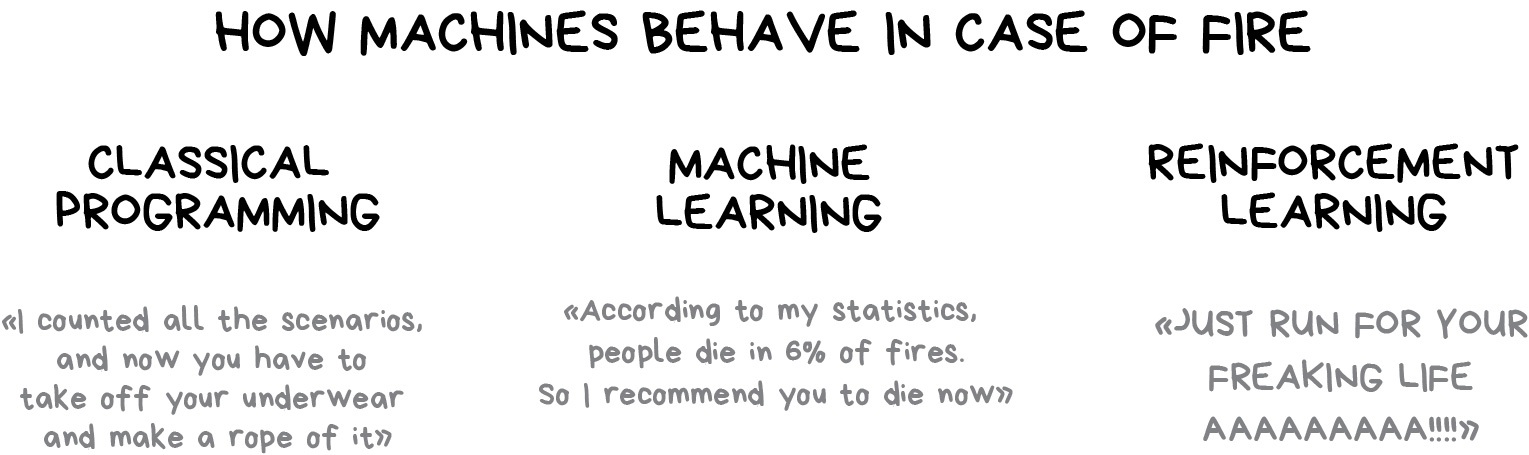

how machines behave in case of fire?

classical programming:i counted all the scenarios, and now you have to take off your underwear and make a rope of it

meching learning:according to my statistics, people die in 6% of fires.So I recommend you to die now.

reinforcement learning:just run for your freaking life AAAA!!!

发生火灾时机器如何表现?

经典编程:我统计了所有的场景,现在你必须脱掉你的内衣,用它做一根绳子

机械学习:根据我的统计,6%的人死于火灾。所以我建议你现在就去死。

强化学习:为你该死的生活奔跑吧AAAA!!!

Remember the news about AI beating a top player at the game of Go? Despite shortly before this it being proved that the number of combinations in this game is greater than the number of atoms in the universe.

还记得人工智能在围棋比赛中击败顶尖棋手的新闻吗?尽管在此之前不久,已经证明了这个游戏中组合的数量大于宇宙中原子的数量。

AI beating a top player at the game of Go

proved

This means the machine could not remember all the combinations and thereby win Go (as it did chess). At each turn, it simply chose the best move for each situation, and it did well enough to outplay a human meatbag.

这意味着机器无法记住所有组合,从而赢得围棋(就像下棋一样)。在每一个转弯处,它都会简单地为每种情况选择最好的动作,而且它做得足够好,胜过了人类的肉袋子。

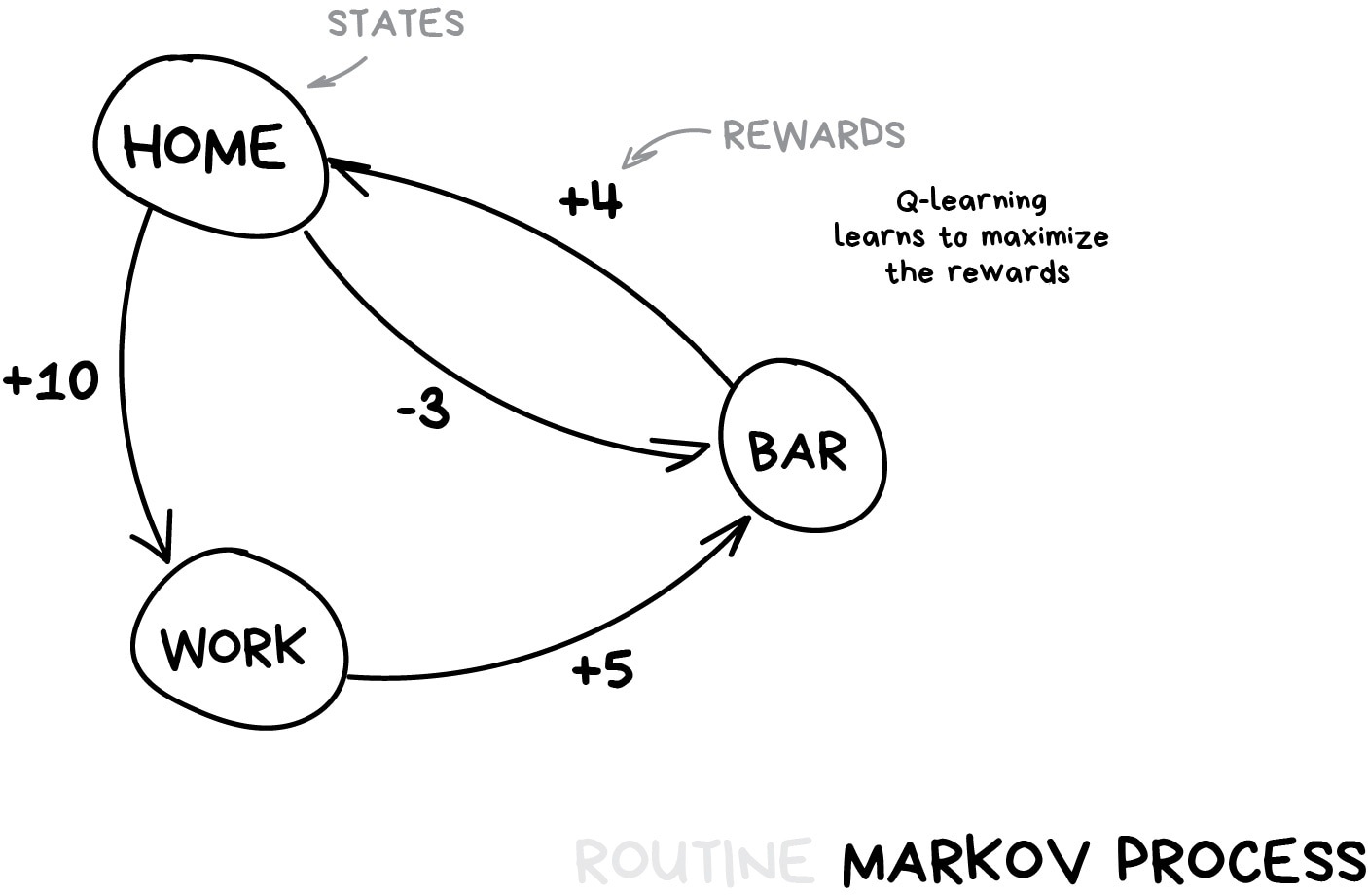

This approach is a core concept behind Q-learning and its derivatives (SARSA & DQN). ‘Q’ in the name stands for “Quality” as a robot learns to perform the most “qualitative” action in each situation and all the situations are memorized as a simple markovian process.

这种方法是Q学习及其衍生物(SARSA和DQN)背后的核心概念。”名称中的Q代表“质量”,因为机器人学会在每种情况下执行最“定性”的动作,所有情况都被记忆为一个简单的马尔可夫过程。

Such a machine can test billions of situations in a virtual environment, remembering which solutions led to greater reward. But how can it distinguish previously seen situations from a completely new one? If a self-driving car is at a road crossing and the traffic light turns green — does it mean it can go now? What if there’s an ambulance rushing through a street nearby?

这样的机器可以在虚拟环境中测试数十亿种情况,记住哪些解决方案会带来更大的回报。但是,它如何区分以前看到的情况和全新的情况呢?如果一辆自动驾驶汽车在十字路口,红绿灯变绿,这是否意味着它现在可以行驶?如果有一辆救护车在附近的街道上飞驰怎么办?

The answer today is “no one knows”. There’s no easy answer. Researchers are constantly searching for it but meanwhile only finding workarounds. Some would hardcode all the situations manually that let them solve exceptional cases, like the trolley problem. Others would go deep and let neural networks do the job of figuring it out. This led us to the evolution of Q-learning called Deep Q-Network (DQN). But they are not a silver bullet either.

今天的答案是“没人知道”。没有简单的答案。研究人员一直在寻找它,但同时只找到变通办法。有些人会手动对所有情况进行硬编码,以解决特殊情况,如手推车问题。其他人会深入研究,让神经网络来解决这个问题。这导致了Q学习的发展,称为深度Q网络(DQN)。但它们也不是灵丹妙药。

Reinforcement Learning for an average person would look like a real artificial intelligence. Because it makes you think wow, this machine is making decisions in real life situations! This topic is hyped right now, it’s advancing with incredible pace and intersecting with a neural network to clean your floor more accurately. Amazing world of technologies!

强化学习对于一个普通人来说就像一个真正的人工智能。因为它让你觉得哇,这台机器是在现实生活中做出决定的!这个话题现在被炒得沸沸扬扬,它以令人难以置信的速度前进,并与神经网络交叉,更准确地清洁你的地板。技术的奇妙世界!

Off-topic. When I was a student, genetic algorithms (link has cool visualization) were really popular. This is about throwing a bunch of robots into a single environment and making them try reaching the goal until they die. Then we pick the best ones, cross them, mutate some genes and rerun the simulation. After a few milliard years, we will get an intelligent creature. Probably. Evolution at its finest.

脱离主题。当我还是一个学生的时候,遗传算法(链接有很酷的可视化)真的很流行。这是关于把一群机器人扔到一个单一的环境中,让它们尝试达到目标,直到死亡。然后我们挑选最好的,将它们交叉,使一些基因发生突变,然后重新运行模拟。几百万年后,我们将得到一个聪明的生物。可能进化到了极致。

Genetic algorithms are considered as part of reinforcement learning and they have the most important feature proved by decade-long practice: no one gives a shit about them.

遗传算法被认为是强化学习的一部分,经过十年的实践证明,它们有一个最重要的特点:没有人在乎它们。

Humanity still couldn’t come up with a task where those would be more effective than other methods. But they are great for student experiments and let people get their university supervisors excited about “artificial intelligence” without too much labour. And youtube would love it as well.

人类仍然无法想出一个比其他方法更有效的任务。但它们非常适合学生实验,让人们不用太多劳动就能让大学主管对“人工智能”感到兴奋。youtube也会喜欢的。

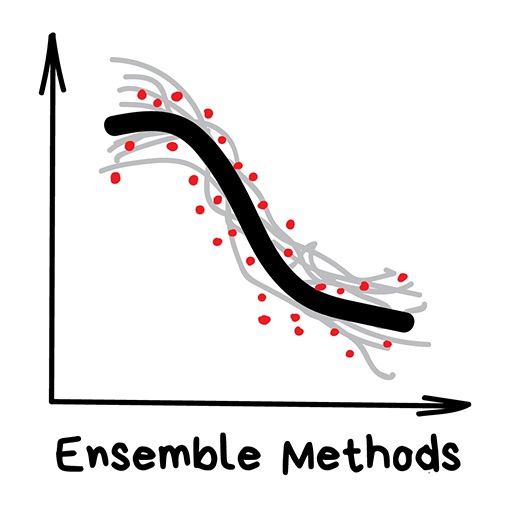

Part 3. Ensemble Methods 第3部分。集成方法

“Bunch of stupid trees learning to correct errors of each other”

“一群愚蠢的树学会互相纠正错误”

Nowadays is used for:

- Everything that fits classical algorithm approaches (but works better) 所有符合经典算法方法的东西(但效果更好)

- Search systems (★) 搜索系统

- Computer vision 计算机视觉

- Object detection 物体检测

Popular algorithms: Random Forest, Gradient Boosting

流行算法:随机森林,梯度增强

Random Forest

Gradient Boosting

It’s time for modern, grown-up methods. Ensembles and neural networks are two main fighters paving our path to a singularity. Today they are producing the most accurate results and are widely used in production.

是时候采用现代、成熟的方法了。集合和神经网络是为我们通往奇点铺平道路的两个主要斗士。如今,它们正在产生最准确的结果,并在生产中广泛使用。

However, the neural networks got all the hype today, while the words like “boosting” or “bagging” are scarce hipsters on TechCrunch.

然而,神经网络今天得到了所有的炒作,而像“助推”或“装袋”这样的词在TechCrunch上很少出现。

Despite all the effectiveness the idea behind these is overly simple. If you take a bunch of inefficient algorithms and force them to correct each other’s mistakes, the overall quality of a system will be higher than even the best individual algorithms.

尽管如此,这些背后的想法还是过于简单。如果你采用一堆低效的算法,强迫它们互相纠正错误,那么系统的整体质量甚至会高于最好的单个算法。

You’ll get even better results if you take the most unstable algorithms that are predicting completely different results on small noise in input data. Like Regression and Decision Trees. These algorithms are so sensitive to even a single outlier in input data to have models go mad.

如果你采用最不稳定的算法,在输入数据中的小噪声上预测完全不同的结果,你会得到更好的结果。比如回归树和决策树。这些算法对输入数据中的单个异常值都非常敏感,以至于模型都会发疯。

In fact, this is what we need.

事实上,这正是我们所需要的。

We can use any algorithm we know to create an ensemble. Just throw a bunch of classifiers, spice it up with regression and don’t forget to measure accuracy. From my experience: don’t even try a Bayes or kNN here. Although “dumb”, they are really stable. That’s boring and predictable. Like your ex.

我们可以使用我们所知道的任何算法来创建集合。只需抛出一堆分类器,用回归来增加趣味性,别忘了测量准确性。根据我的经验:甚至不要在这里尝试贝叶斯或kNN。虽然“笨”,但他们确实很稳定。这很无聊,而且是可以预测的。就像你的前任。

Instead, there are three battle-tested methods to create ensembles.

相反,有三种经过战斗考验的方法来创建乐团。

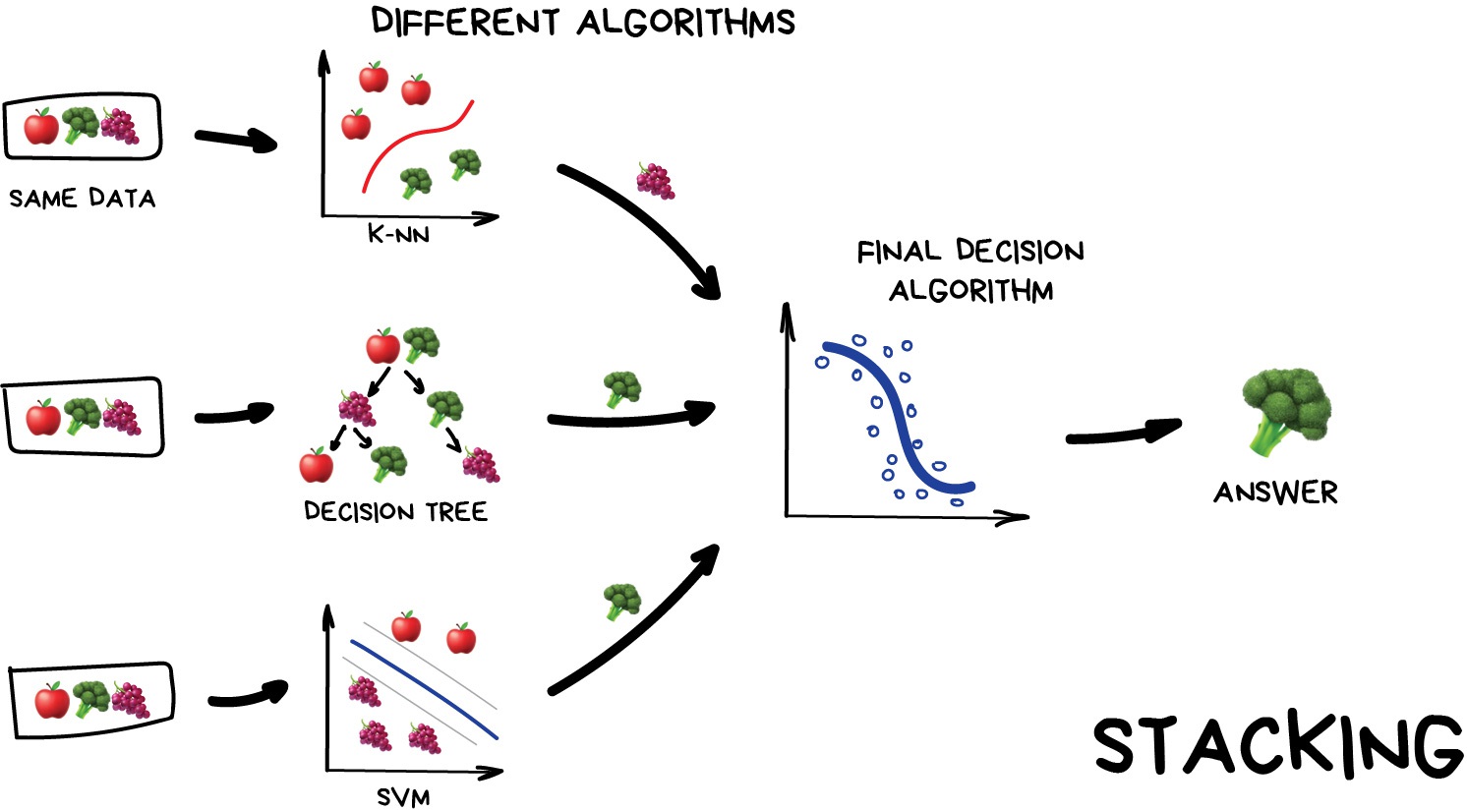

Stacking Output of several parallel models is passed as input to the last one which makes a final decision. Like that girl who asks her girlfriends whether to meet with you in order to make the final decision herself.

几个并行模型的叠加输出作为输入传递给最后一个模型,后者做出最终决定。就像那个女孩问她的女朋友是否和你见面,以便自己做出最终决定。

Emphasis here on the word “different”. Mixing the same algorithms on the same data would make no sense. The choice of algorithms is completely up to you. However, for final decision-making model, regression is usually a good choice.

这里强调“不同”这个词。在相同的数据上混合使用相同的算法是没有意义的。算法的选择完全取决于你。然而,对于最终的决策模型,回归通常是一个不错的选择。

Based on my experience stacking is less popular in practice, because two other methods are giving better accuracy.

根据我的经验,堆叠在实践中不太受欢迎,因为另外两种方法提供了更好的准确性。

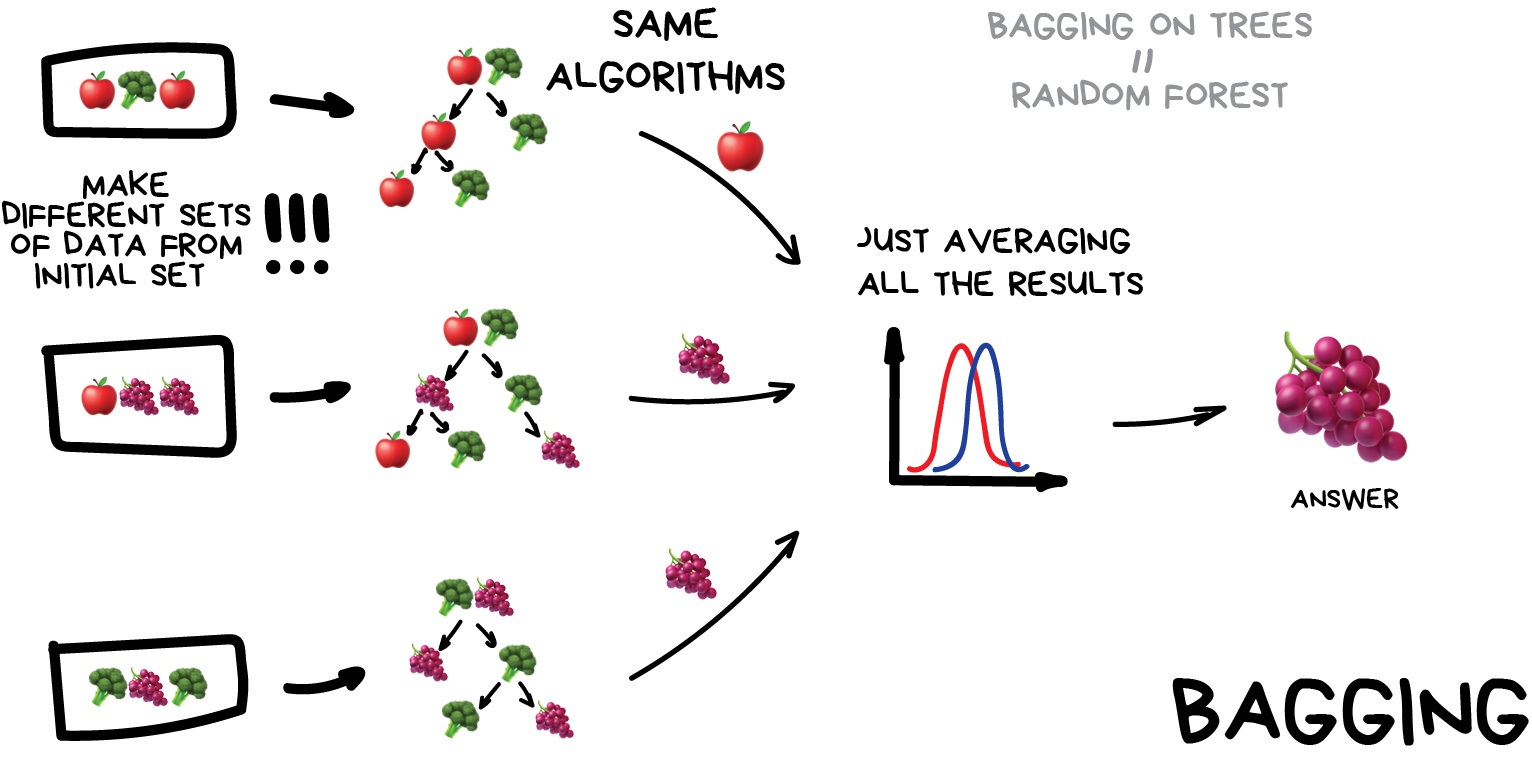

Bagging aka Bootstrap AGGregatING. Use the same algorithm but train it on different subsets of original data. In the end — just average answers.

装袋又称Bootstrap聚集。使用相同的算法,但在原始数据的不同子集上进行训练。最后——只是一般的答案。

Data in random subsets may repeat. For example, from a set like “1-2-3” we can get subsets like “2-2-3”, “1-2-2”, “3-1-2” and so on. We use these new datasets to teach the same algorithm several times and then predict the final answer via simple majority voting.

随机子集中的数据可能重复。例如,从“1-2-3”这样的集合中,我们可以得到“2-2-3”、“1-2-2”、“3-1-2”等子集。我们使用这些新的数据集多次教授相同的算法,然后通过简单多数投票预测最终答案。

The most famous example of bagging is the Random Forest algorithm, which is simply bagging on the decision trees (which were illustrated above). When you open your phone’s camera app and see it drawing boxes around people’s faces — it’s probably the results of Random Forest work. Neural networks would be too slow to run real-time yet bagging is ideal given it can calculate trees on all the shaders of a video card or on these new fancy ML processors.

装袋最著名的例子是随机森林算法,它只是在决策树上装袋(如上所述)。当你打开手机的相机应用程序,看到它在人们的脸上画方框时,这可能是随机森林工作的结果。神经网络运行速度太慢,无法实时运行,但考虑到它可以在视频卡的所有着色器或这些新的高级ML处理器上计算树,因此装袋是理想的。

In some tasks, the ability of the Random Forest to run in parallel is more important than a small loss in accuracy to the boosting, for example. Especially in real-time processing. There is always a trade-off.

例如,在某些任务中,随机森林并行运行的能力比助推精度的小损失更重要。尤其是在实时处理中。总有一种权衡。

Boosting Algorithms are trained one by one sequentially. Each subsequent one paying most of its attention to data points that were mispredicted by the previous one. Repeat until you are happy.

Boosting算法是按顺序逐个训练的。随后的每一个都将大部分注意力放在前一个预测错误的数据点上。重复,直到你感到高兴。

Same as in bagging, we use subsets of our data but this time they are not randomly generated. Now, in each subsample we take a part of the data the previous algorithm failed to process. Thus, we make a new algorithm learn to fix the errors of the previous one.

与装袋一样,我们使用数据的子集,但这次它们不是随机生成的。现在,在每个子样本中,我们获取先前算法未能处理的部分数据。因此,我们使一个新的算法学习修复前一个算法的错误。

The main advantage here — a very high, even illegal in some countries precision of classification that all cool kids can envy. The cons were already called out — it doesn’t parallelize. But it’s still faster than neural networks. It’s like a race between a dump truck and a racecar. The truck can do more, but if you want to go fast — take a car.

这里的主要优势是——分类精度非常高,在一些国家甚至是非法的,所有酷孩子都会羡慕。缺点已经被调用了——它没有并行化。但它仍然比神经网络更快。这就像一场自卸车和赛车之间的比赛。卡车可以做得更多,但如果你想走得快,那就开车吧。

If you want a real example of boosting — open Facebook or Google and start typing in a search query. Can you hear an army of trees roaring and smashing together to sort results by relevancy? That’s because they are using boosting.

如果你想要一个真正的提升示例——打开Facebook或谷歌,开始输入搜索查询。你能听到一大群树咆哮着砸在一起,根据相关性对结果进行排序吗?这是因为他们在使用助推。

Nowadays there are three popular tools for boosting, you can read a comparative report in CatBoost vs. LightGBM vs. XGBoost

现在有三种流行的助推工具,你可以阅读CatBoost与LightGBM与XGBoost的比较报告

CatBoost vs. LightGBM vs. XGBoost

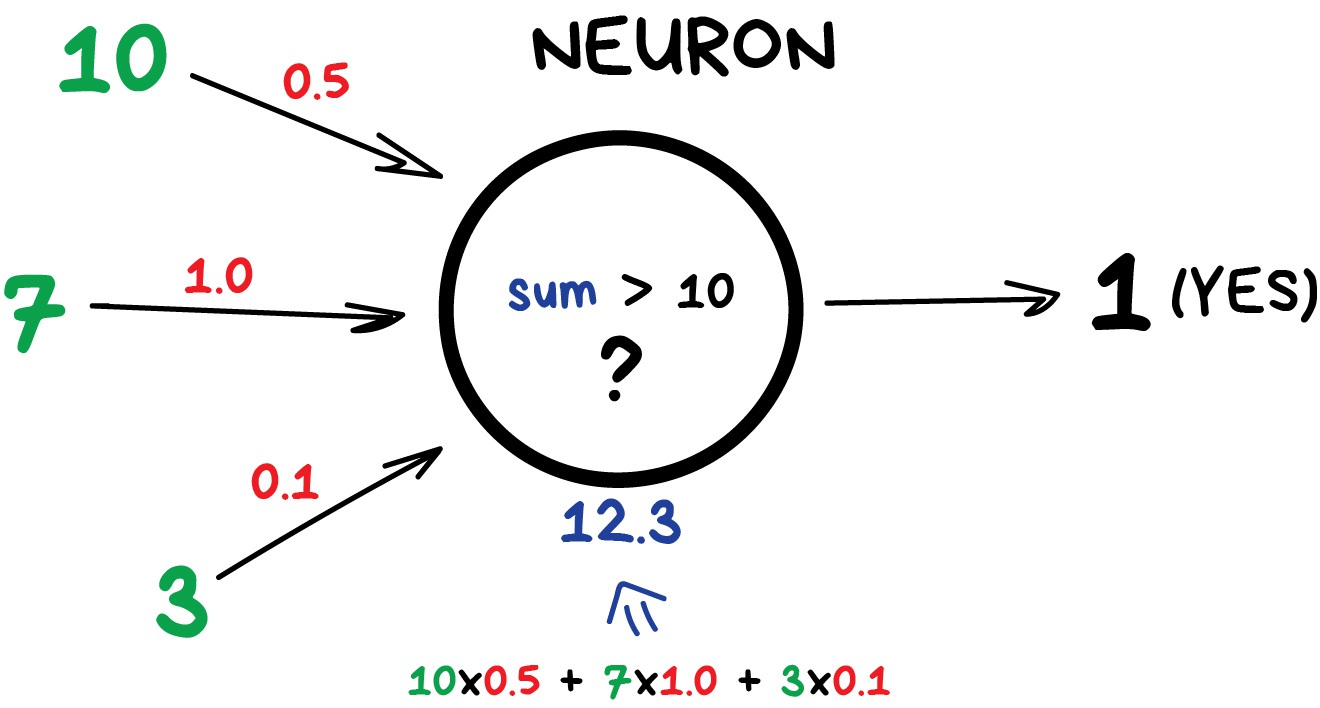

Part 4. Neural Networks and Deep Leaning 第4部分。神经网络与深度学习

“We have a thousand-layer network, dozens of video cards, but still no idea where to use it. Let’s generate cat pics!”

“我们有一个千层网络,几十个视频卡,但仍然不知道在哪里使用。让我们生成猫的照片!”

Used today for:

- Replacement of all algorithms above 替换上述所有算法

- Object identification on photos and videos 照片和视频上的对象识别

- Speech recognition and synthesis 语音识别与合成

- Image processing, style transfer 图像处理、风格转换

- Machine translation 机器翻译

Popular architectures: Perceptron, Convolutional Network (CNN), Recurrent Networks (RNN), Autoencoders

流行架构:感知器、卷积网络(CNN)、递归网络(RNN)、自动编码器

Perceptron

Convolutional Network-CNN

Recurrent Networks-RNN

Autoencoders

If no one has ever tried to explain neural networks to you using “human brain” analogies, you’re happy. Tell me your secret. But first, let me explain it the way I like.

如果从来没有人试图用“人脑”的类比来向你解释神经网络,你会很高兴。告诉我你的秘密。但首先,让我用我喜欢的方式解释一下。

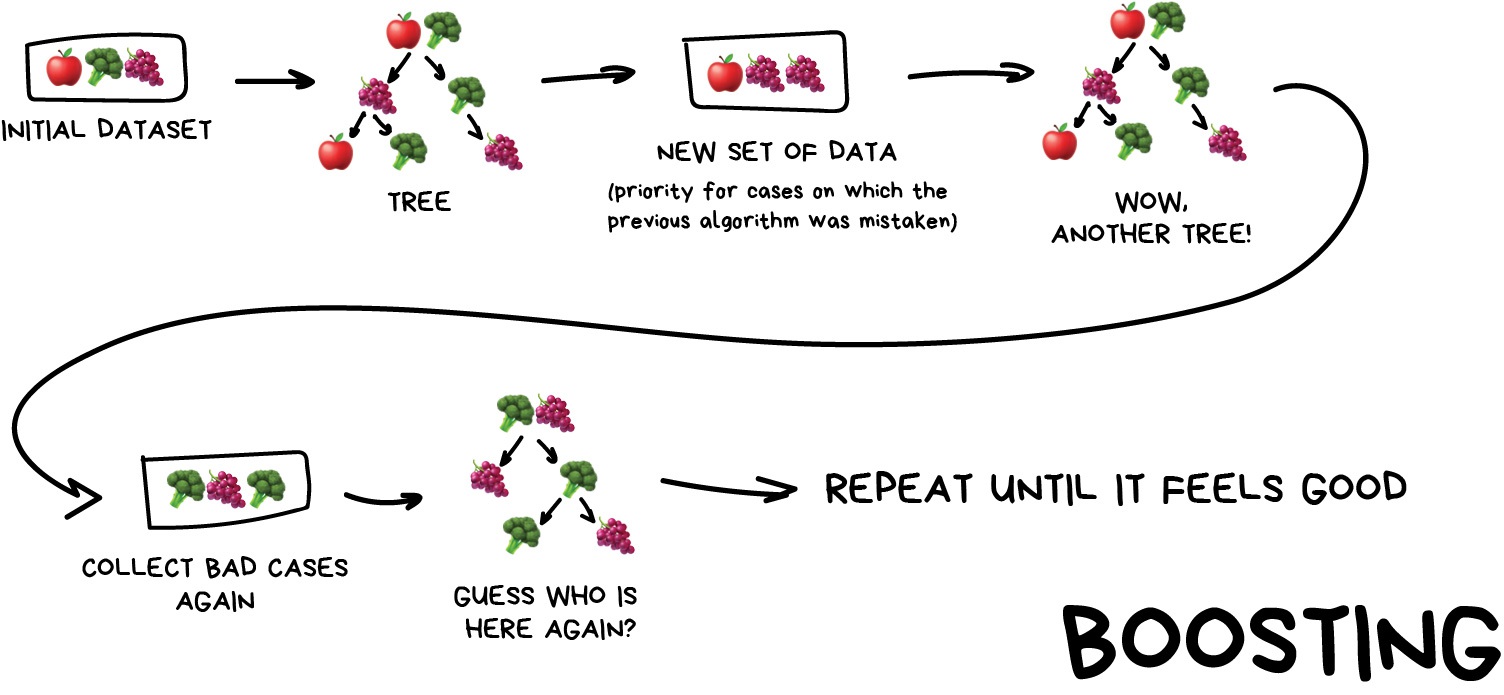

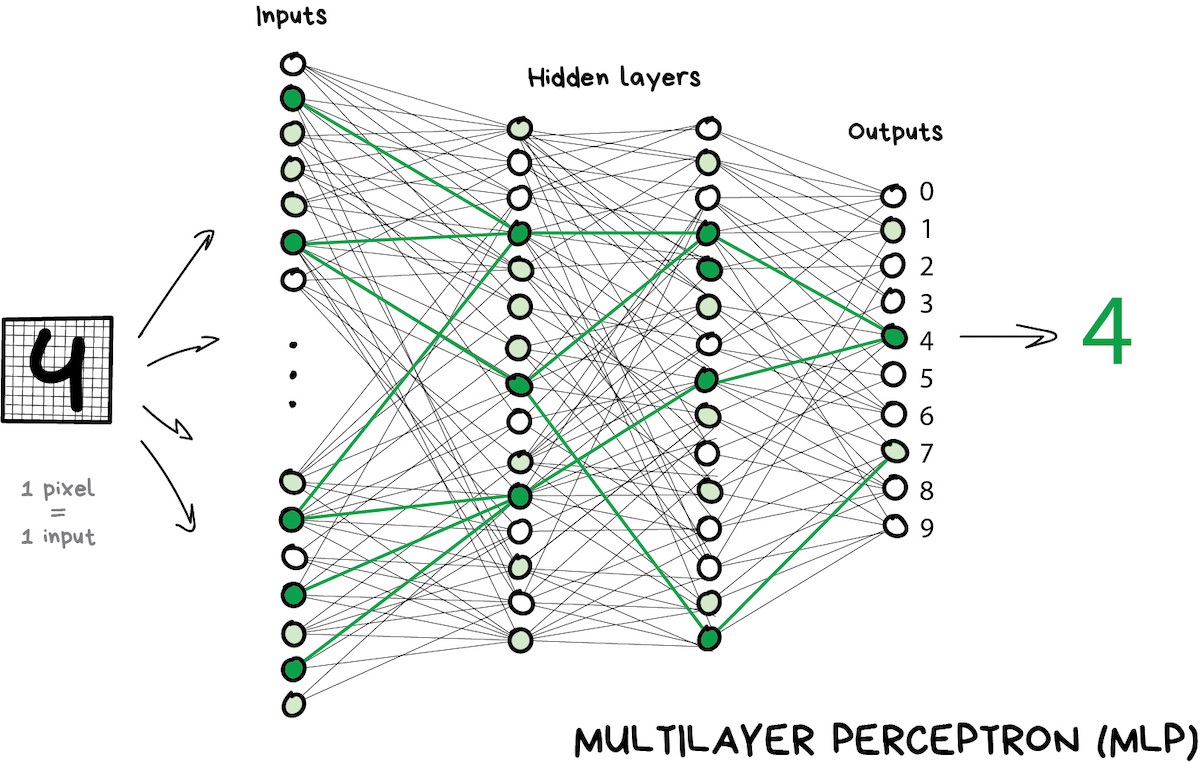

Any neural network is basically a collection of neurons and connections between them. Neuron is a function with a bunch of inputs and one output. Its task is to take all numbers from its input, perform a function on them and send the result to the output.

任何神经网络基本上都是神经元及其之间连接的集合。神经元是一个具有一组输入和一个输出的函数。它的任务是从输入中获取所有数字,对它们执行函数,并将结果发送到输出。

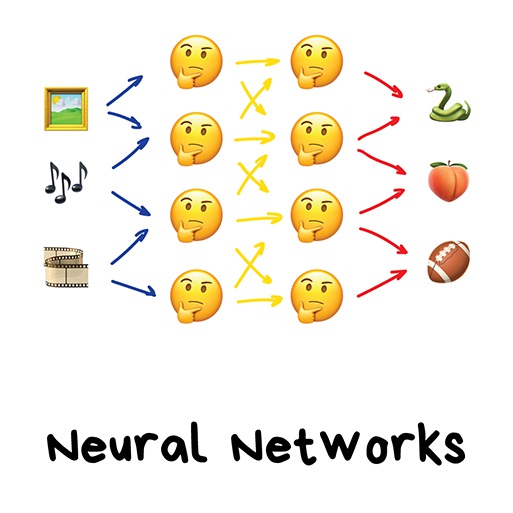

Here is an example of a simple but useful in real life neuron: sum up all numbers from the inputs and if that sum is bigger than N — give 1 as a result. Otherwise — zero.

这里有一个简单但在现实生活中有用的神经元的例子:将输入的所有数字相加,如果总和大于N,则给出1。否则——零。

Connections are like channels between neurons. They connect outputs of one neuron with the inputs of another so they can send digits to each other. Each connection has only one parameter — weight. It’s like a connection strength for a signal. When the number 10 passes through a connection with a weight 0.5 it turns into 5.

连接就像神经元之间的通道。它们将一个神经元的输出与另一个神经元输入连接起来,以便相互发送数字。每个连接只有一个参数——权重。这就像信号的连接强度。当数字10通过一个具有0.5重量的连接时,它变成了5。

These weights tell the neuron to respond more to one input and less to another. Weights are adjusted when training — that’s how the network learns. Basically, that’s all there is to it.

这些权重告诉神经元对一个输入的反应更多,对另一个输入反应更少。训练时会调整权重——这就是网络学习的方式。基本上,这就是它的全部。

To prevent the network from falling into anarchy, the neurons are linked by layers, not randomly. Within a layer neurons are not connected, but they are connected to neurons of the next and previous layers. Data in the network goes strictly in one direction — from the inputs of the first layer to the outputs of the last.

为了防止网络陷入无政府状态,神经元是分层连接的,而不是随机连接的。在一层内,神经元不相连,但它们与下一层和前一层的神经元相连。网络中的数据严格地朝着一个方向发展——从第一层的输入到最后一层的输出。

If you throw in a sufficient number of layers and put the weights correctly, you will get the following: by applying to the input, say, the image of handwritten digit 4, black pixels activate the associated neurons, they activate the next layers, and so on and on, until it finally lights up the exit in charge of the four. The result is achieved.

如果你放入足够多的层并正确放置权重,你会得到以下结果:通过应用于输入,比如手写数字4的图像,黑色像素激活相关的神经元,它们激活下一层,以此类推,直到它最终照亮负责这四层的出口。取得了效果。

When doing real-life programming nobody is writing neurons and connections. Instead, everything is represented as matrices and calculated based on matrix multiplication for better performance. My favourite video on this and its sequel below describe the whole process in an easily digestible way using the example of recognizing hand-written digits. Watch them if you want to figure this out.

在进行真实编程时,没有人在编写神经元和连接。相反,所有内容都表示为矩阵,并基于矩阵乘法进行计算,以获得更好的性能。我最喜欢的这段视频及其后续视频以识别手写数字为例,以易于理解的方式描述了整个过程。如果你想弄清楚这一点,请注意他们。

A network that has multiple layers that have connections between every neuron is called a perceptron (MLP) and considered the simplest architecture for a novice. I didn’t see it used for solving tasks in production.

一个具有多层且每个神经元之间都有连接的网络被称为感知器(MLP),被认为是新手最简单的架构。我没有看到它被用于解决生产中的任务。

After we constructed a network, our task is to assign proper ways so neurons will react correctly to incoming signals. Now is the time to remember that we have data that is samples of ‘inputs’ and proper ‘outputs’. We will be showing our network a drawing of the same digit 4 and tell it ‘adapt your weights so whenever you see this input your output would emit 4’.

在我们构建了一个网络后,我们的任务是分配正确的方式,使神经元对传入的信号做出正确的反应。现在是时候记住,我们拥有的数据是“输入”和正确“输出”的样本了。我们将向我们的网络展示同一数字4的图形,并告诉它“调整你的权重,这样每当你看到这个输入时,你的输出就会发出4”。

To start with, all weights are assigned randomly. After we show it a digit it emits a random answer because the weights are not correct yet, and we compare how much this result differs from the right one. Then we start traversing network backward from outputs to inputs and tell every neuron ‘hey, you did activate here but you did a terrible job and everything went south from here downwards, let’s keep less attention to this connection and more of that one, mkay?’.

首先,所有权重都是随机分配的。在我们给它显示一个数字后,它会发出一个随机答案,因为权重还不正确,我们比较这个结果与正确的结果有多大差异。然后,我们开始从输出到输入反向遍历网络,并告诉每个神经元“嘿,你确实在这里激活了,但你做得很糟糕,一切都从这里向下发展,让我们少关注这个连接,多关注那个连接,mkay?”。

After hundreds of thousands of such cycles of ‘infer-check-punish’, there is a hope that the weights are corrected and act as intended. The science name for this approach is Backpropagation, or a ‘method of backpropagating an error’. Funny thing it took twenty years to come up with this method. Before this we still taught neural networks somehow.

经过数十万次这样的“推断-检查-惩罚”循环,有希望纠正权重并按预期行事。这种方法的科学名称是反向传播,或“反向传播错误的方法”。有趣的是,花了二十年的时间才想出这个方法。在此之前,我们仍然以某种方式教授神经网络。

My second favorite vid is describing this process in depth, but it’s still very accessible.

我第二喜欢的视频是深入描述这个过程,但它仍然很容易理解。

Neural Networks and Deep Learning

A well trained neural network can fake the work of any of the algorithms described in this chapter (and frequently works more precisely). This universality is what made them widely popular. Finally we have an architecture of human brain they said we just need to assemble lots of layers and teach them on any possible data they hoped. Then the first AI winter started, then it thawed, and then another wave of disappointment hit.

经过良好训练的神经网络可以伪造本章中描述的任何算法的工作(而且通常工作得更精确)。这种普遍性使它们广受欢迎。最后,我们有了人类大脑的架构,他们说我们只需要组装很多层,并根据他们希望的任何可能的数据教授他们。然后第一个人工智能的冬天开始了,然后它解冻了,然后又一波失望袭来。

It turned out networks with a large number of layers required computation power unimaginable at that time. Nowadays any gamer PC with geforces outperforms the datacenters of that time. So people didn’t have any hope then to acquire computation power like that and neural networks were a huge bummer.

事实证明,具有大量层的网络需要当时难以想象的计算能力。如今,任何一台拥有geforces的游戏PC都胜过当时的数据中心。因此,当时人们对获得这样的计算能力没有任何希望,而神经网络是一个巨大的障碍。

And then ten years ago deep learning rose.

十年前,深度学习兴起了。

There’s a nice Timeline of machine learning describing the rollercoaster of hopes & waves of pessimism.

有一个很好的机器学习时间表,描述了希望和悲观情绪的过山车。

In 2012 convolutional neural networks acquired an overwhelming victory in ImageNet competition that made the world suddenly remember about methods of deep learning described in the ancient 90s. Now we have video cards!

2012年,卷积神经网络在ImageNet竞争中取得了压倒性的胜利,这让世界突然想起了90年代描述的深度学习方法。现在我们有了视频卡!

overwhelming victory in ImageNet competition

Differences of deep learning from classical neural networks were in new methods of training that could handle bigger networks. Nowadays only theoretics would try to divide which learning to consider deep and not so deep. And we, as practitioners are using popular ‘deep’ libraries like Keras, TensorFlow & PyTorch even when we build a mini-network with five layers. Just because it’s better suited than all the tools that came before. And we just call them neural networks.

深度学习与经典神经网络的不同之处在于可以处理更大网络的新训练方法。如今,只有理论家才会试图划分哪门学问应该考虑得很深和不那么深。作为从业者,我们正在使用流行的“深度”库,如Keras、TensorFlow和PyTorch,即使我们构建了一个有五层的迷你网络。只是因为它比以前的所有工具都更适合。我们称之为神经网络。

I’ll tell about two main kinds nowadays.

我现在要讲两种主要的。

Convolutional Neural Networks (CNN) 卷积神经网络(CNN)

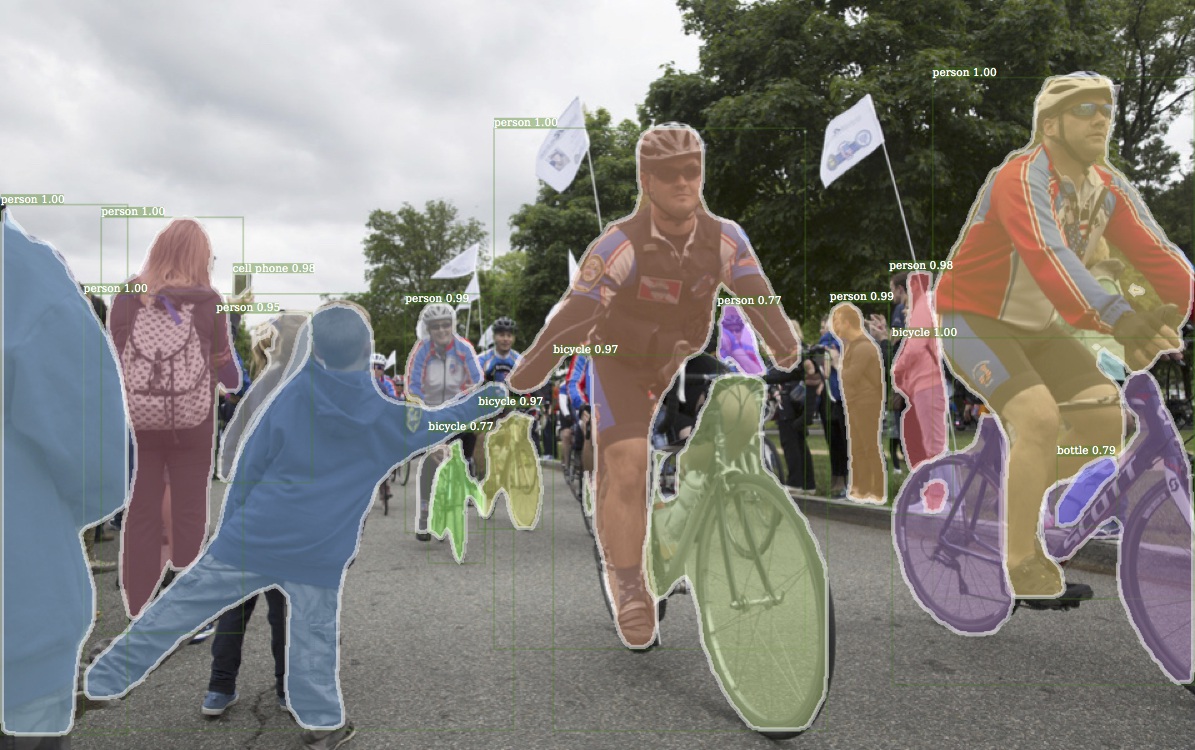

Convolutional neural networks are all the rage right now. They are used to search for objects on photos and in videos, face recognition, style transfer, generating and enhancing images, creating effects like slow-mo and improving image quality. Nowadays CNNs are used in all the cases that involve pictures and video s. Even in your iPhone several of these networks are going through your nudes to detect objects in those. If there is something to detect, heh.

卷积神经网络现在风靡一时。它们用于搜索照片和视频中的对象、人脸识别、风格转换、生成和增强图像、创建慢动作等效果并提高图像质量。如今,所有涉及图片和视频的案例都使用了细胞神经网络。即使在你的iPhone中,其中几个网络也会通过你的裸体来检测其中的物体。如果有什么要探测的,呵呵。

Image above is a result produced by Detectron that was recently open-sourced by Facebook

上图是Detectron最近由Facebook开源的结果

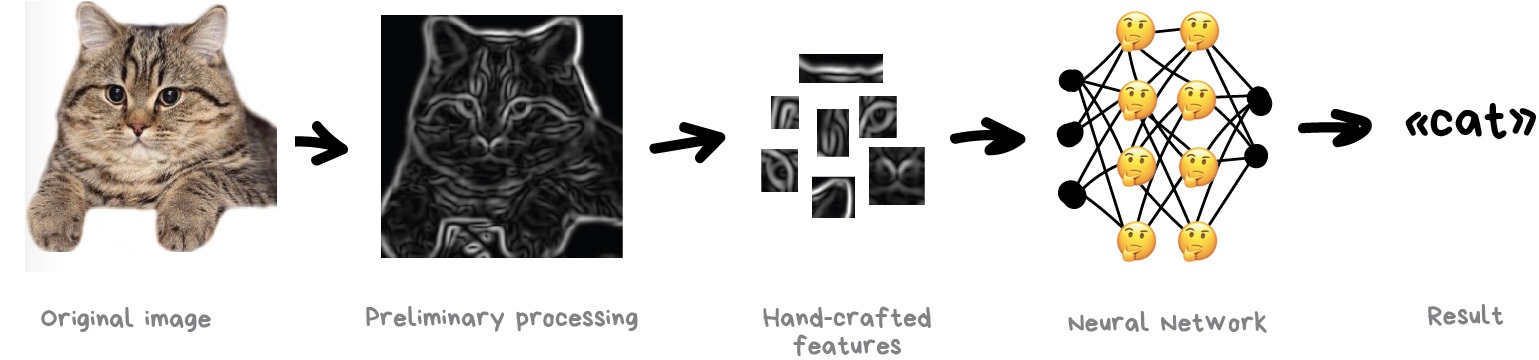

A problem with images was always the difficulty of extracting features out of them. You can split text by sentences, lookup words’ attributes in specialized vocabularies, etc. But images had to be labeled manually to teach the machine where cat ears or tails were in this specific image. This approach got the name ‘handcrafting features’ and used to be used almost by everyone.

图像的一个问题总是难以从中提取特征。你可以按句子分割文本,在专业词汇表中查找单词的属性等。但图像必须手动标记,才能告诉机器猫耳朵或尾巴在这个特定图像中的位置。这种方法被称为“手工制作功能”,过去几乎每个人都在使用。

There are lots of issues with the handcrafting.

手工制作有很多问题。

First of all, if a cat had its ears down or turned away from the camera: you are in trouble, the neural network won’t see a thing.

首先,如果一只猫低着耳朵或转身离开相机:你有麻烦了,神经网络什么都看不见。

Secondly, try naming on the spot 10 different features that distinguish cats from other animals. I for one couldn’t do it, but when I see a black blob rushing past me at night — even if I only see it in the corner of my eye — I would definitely tell a cat from a rat. Because people don’t look only at ear form or leg count and account lots of different features they don’t even think about. And thus cannot explain it to the machine.

其次,试着当场命名10种不同的特征,将猫与其他动物区分开来。就我而言,我做不到,但当我在晚上看到一个黑色的斑点从我身边掠过时——即使我只是从眼角看到它——我肯定会分辨猫和老鼠。因为人们不仅仅看耳朵的形状或腿的数量,还考虑了很多他们甚至没有想过的不同特征。因此无法向机器解释。

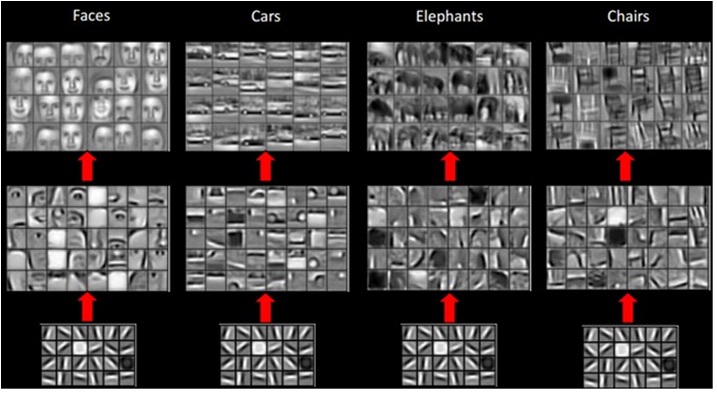

So it means the machine needs to learn such features on its own, building on top of basic lines. We’ll do the following: first, we divide the whole image into 8x8 pixel blocks and assign to each a type of dominant line – either horizontal [-], vertical [|] or one of the diagonals [/]. It can also be that several would be highly visible — this happens and we are not always absolutely confident.

因此,这意味着机器需要在基本线的基础上自行学习这些功能。我们将执行以下操作:首先,我们将整个图像划分为8x8像素的块,并为每个块分配一种类型的主导线——水平[-]、垂直[|]或其中一条对角线[/]。也可能有几个会非常明显——这种情况发生了,我们并不总是绝对有信心。

Output would be several tables of sticks that are in fact the simplest features representing objects edges on the image. They are images on their own but built out of sticks. So we can once again take a block of 8x8 and see how they match together. And again and again…

输出将是几张棍子表,这些棍子实际上是表示图像上对象边缘的最简单特征。它们是自己的图像,但却是用棍子做成的。所以我们可以再次取一个8x8的块,看看它们是如何匹配在一起的。一次又一次…

This operation is called convolution, which gave the name for the method. Convolution can be represented as a layer of a neural network, because each neuron can act as any function.

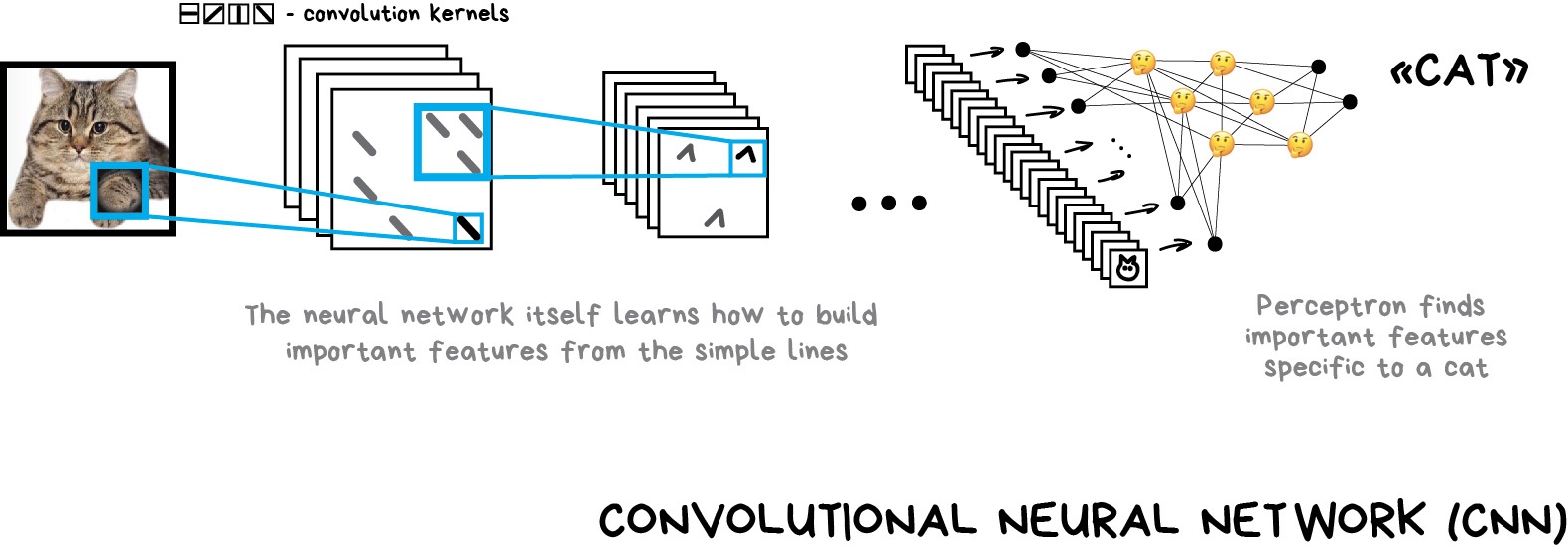

这个操作被称为卷积,它为该方法命名。卷积可以表示为神经网络的一层,因为每个神经元都可以作为任何函数。

When we feed our neural network with lots of photos of cats it automatically assigns bigger weights to those combinations of sticks it saw the most frequently. It doesn’t care whether it was a straight line of a cat’s back or a geometrically complicated object like a cat’s face, something will be highly activating.

当我们给神经网络提供大量猫的照片时,它会自动为它最频繁看到的棍子组合分配更大的权重。它不在乎它是猫背部的一条直线,还是像猫脸这样几何复杂的物体,有些东西会非常活跃。

As the output, we would put a simple perceptron which will look at the most activated combinations and based on that differentiate cats from dogs.

作为输出,我们将放置一个简单的感知器,它将观察最活跃的组合,并以此为基础区分猫和狗。

The beauty of this idea is that we have a neural net that searches for the most distinctive features of the objects on its own. We don’t need to pick them manually. We can feed it any amount of images of any object just by googling billions of images with it and our net will create feature maps from sticks and learn to differentiate any object on its own.

这个想法的美妙之处在于,我们有一个神经网络,可以自己搜索物体最独特的特征。我们不需要手动挑选它们。我们只需在谷歌上搜索数十亿张图像,就可以向它提供任何物体的任何数量的图像,我们的网络将从棒中创建特征图,并学会独立区分任何物体。

For this I even have a handy unfunny joke:

对此,我甚至有一个不好笑的笑话:

Give your neural net a fish and it will be able to detect fish for the rest of its life. Give your neural net a fishing rod and it will be able to detect fishing rods for the rest of its life…

给你的神经网络一条鱼,它将能够在余生中检测到鱼。给你的神经网络一根鱼竿,它将能够在余生中检测鱼竿…

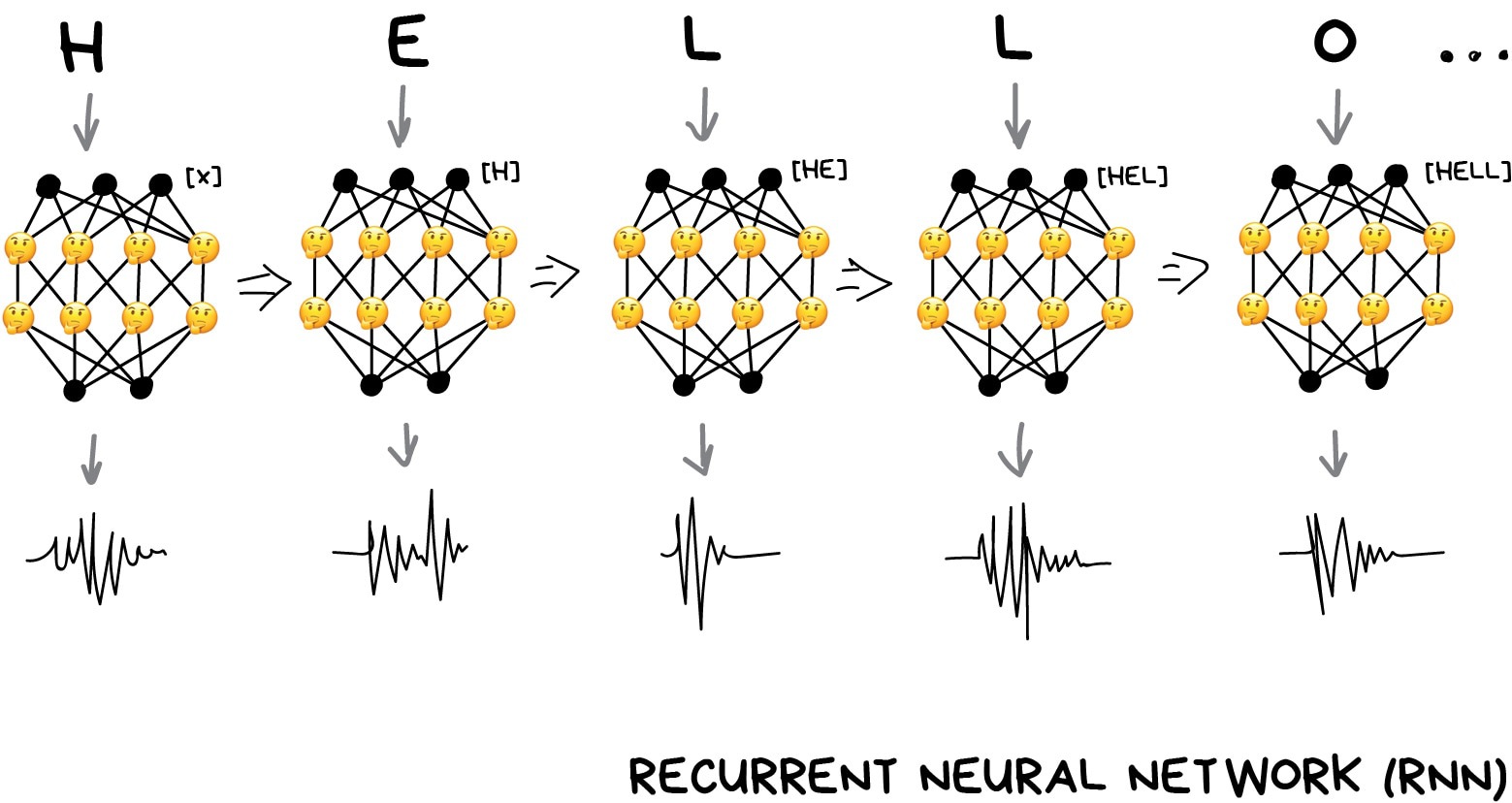

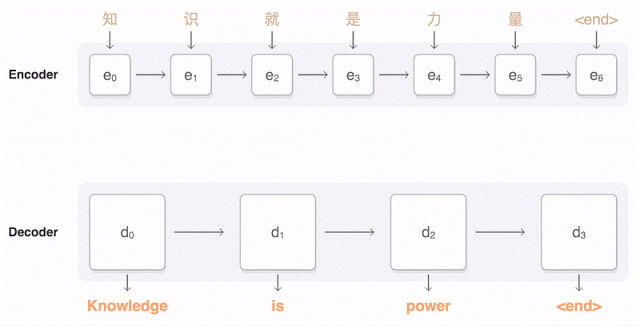

Recurrent Neural Networks (RNN) 递归神经网络

The second most popular architecture today. Recurrent networks gave us useful things like neural machine translation (here is my post about it), speech recognition and voice synthesis in smart assistants. RNNs are the best for sequential data like voice, text or music.

当今第二流行的建筑。递归网络为我们提供了一些有用的东西,比如神经机器翻译(这是我的帖子)、语音识别和智能助手中的语音合成。RNN最适用于语音、文本或音乐等顺序数据。

Machine Translation-From the Cold War to Deep Learning

Remember Microsoft Sam, the old-school speech synthesizer from Windows XP? That funny guy builds words letter by letter, trying to glue them up together. Now, look at Amazon Alexa or Assistant from Google. They don’t only say the words clearly, they even place the right accents!

还记得微软的Sam吗?那是Windows XP的老式语音合成器?那个有趣的家伙一个字母一个字母地造单词,试图把它们粘在一起。现在,看看亚马逊Alexa或谷歌助手。他们不仅把单词说得很清楚,他们甚至用正确的口音!

All because modern voice assistants are trained to speak not letter by letter, but on whole phrases at once. We can take a bunch of voiced texts and train a neural network to generate an audio-sequence closest to the original speech.

这一切都是因为现代语音助理被训练成不是一个字母一个字母地说话,而是同时说出整个短语。我们可以取一堆有声文本,训练神经网络生成最接近原始语音的音频序列。

In other words, we use text as input and its audio as the desired output. We ask a neural network to generate some audio for the given text, then compare it with the original, correct errors and try to get as close as possible to ideal.

换句话说,我们使用文本作为输入,使用它的音频作为所需的输出。我们要求神经网络为给定的文本生成一些音频,然后将其与原始文本进行比较,纠正错误,并尽可能接近理想。

Sounds like a classical learning process. Even a perceptron is suitable for this. But how should we define its outputs? Firing one particular output for each possible phrase is not an option — obviously.

听起来像是一个经典的学习过程。即使是感知器也适用于此。但我们应该如何定义其产出?显然,为每个可能的短语触发一个特定的输出不是一种选择。

Here we’ll be helped by the fact that text, speech or music are sequences. They consist of consecutive units like syllables. They all sound unique but depend on previous ones. Lose this connection and you get dubstep.

在这里,文本、语音或音乐都是序列这一事实将对我们有所帮助。它们像音节一样由连续的单元组成。它们听起来都很独特,但依赖于以前的版本。失去此连接,您将获得dubstep。

We can train the perceptron to generate these unique sounds, but how will it remember previous answers? So the idea is to add memory to each neuron and use it as an additional input on the next run. A neuron could make a note for itself - hey, we had a vowel here, the next sound should sound higher (it’s a very simplified example).

我们可以训练感知器来产生这些独特的声音,但它将如何记住以前的答案?因此,我们的想法是为每个神经元添加内存,并在下一次运行时将其用作额外的输入。神经元可以为自己做一个音符——嘿,我们这里有一个元音,下一个声音应该听起来更高(这是一个非常简单的例子)。

That’s how recurrent networks appeared. 循环网络就是这样出现的。

This approach had one huge problem - when all neurons remembered their past results, the number of connections in the network became so huge that it was technically impossible to adjust all the weights.

这种方法有一个巨大的问题——当所有神经元都记得他们过去的结果时,网络中的连接数量变得如此巨大,以至于从技术上讲不可能调整所有的权重。

When a neural network can’t forget, it can’t learn new things (people have the same flaw).

当神经网络无法忘记时,它就无法学习新事物(人们也有同样的缺陷)。

The first decision was simple: limit the neuron memory. Let’s say, to memorize no more than 5 recent results. But it broke the whole idea.

第一个决定很简单:限制神经元的记忆。比方说,记住不超过5个最近的结果。但它打破了整个想法。

A much better approach came later: to use special cells, similar to computer memory. Each cell can record a number, read it or reset it. They were called long and short-term memory (LSTM) cells.

后来出现了一种更好的方法:使用类似于计算机内存的特殊单元。每个细胞都可以记录、读取或重置一个数字。它们被称为长短期记忆(LSTM)细胞。

Now, when a neuron needs to set a reminder, it puts a flag in that cell. Like “it was a consonant in a word, next time use different pronunciation rules”. When the flag is no longer needed, the cells are reset, leaving only the “long-term” connections of the classical perceptron. In other words, the network is trained not only to learn weights but also to set these reminders.

现在,当神经元需要设置提醒时,它会在该细胞中设置一个标志。就像“它是一个单词中的一个辅音,下次使用不同的发音规则”。当不再需要标志时,单元被重置,只留下经典感知器的“长期”连接。换句话说,网络不仅被训练来学习权重,还被训练来设置这些提醒。

Simple, but it works!

很简单,但它有效!

You can take speech samples from anywhere. BuzzFeed, for example, took Obama’s speeches and trained a neural network to imitate his voice. As you see, audio synthesis is already a simple task. Video still has issues, but it’s a question of time.

你可以从任何地方采集语音样本。例如,BuzzFeed采用了奥巴马的演讲,并训练了一个神经网络来模仿他的声音。正如您所看到的,音频合成已经是一项简单的任务。视频仍然有问题,但这是时间问题。

There are many more network architectures in the wild. I recommend a good article called Neural Network Zoo, where almost all types of neural networks are collected and briefly explained.

现在有更多的网络体系结构。我推荐一篇名为《神经网络动物园》的好文章,其中收集并简要解释了几乎所有类型的神经网络。

The End: when the war with the machines? 结局:什么时候和机器开战?

The main problem here is that the question “when will the machines become smarter than us and enslave everyone?” is initially wrong. There are too many hidden conditions in it.

这里的主要问题是,“机器什么时候会变得比我们更聪明并奴役所有人?”这个问题最初是错误的。其中有太多的隐藏条件。

We say “become smarter than us” like we mean that there is a certain unified scale of intelligence. The top of which is a human, dogs are a bit lower, and stupid pigeons are hanging around at the very bottom.

我们说“变得比我们更聪明”,就像我们的意思是智力有一定的统一尺度一样。它的顶部是一个人,狗有点低,愚蠢的鸽子在最底部徘徊。

That’s wrong.

这是错误的。

If this were the case, every human must beat animals in everything but it’s not true. The average squirrel can remember a thousand hidden places with nuts — I can’t even remember where are my keys.

如果是这样的话,每个人都必须在任何事情上打败动物,但事实并非如此。一般松鼠都能记住一千个藏着坚果的地方——我甚至记不起我的钥匙在哪里了。

So intelligence is a set of different skills, not a single measurable value? Or is remembering nuts stashed locations not included in intelligence?

所以智力是一套不同的技能,而不是一个单一的可测量的价值?还是情报中没有包括记住坚果的藏匿地点?

An even more interesting question for me - why do we believe that the human brain possibilities are limited? There are many popular graphs on the Internet, where the technological progress is drawn as an exponent and the human possibilities are constant. But is it?

对我来说,还有一个更有趣的问题——为什么我们认为人脑的可能性是有限的?互联网上有许多流行的图表,其中技术进步被画成指数,人类的可能性是恒定的。但是是吗?

Ok, multiply 1680 by 950 right now in your mind. I know you won’t even try, lazy bastards. But give you a calculator — you’ll do it in two seconds. Does this mean that the calculator just expanded the capabilities of your brain?

好的,现在在你的脑海中用1680乘以950。我知道你甚至不会尝试,懒惰的混蛋。但给你一个计算器,你两秒钟就能算出。这是否意味着计算器只是扩展了你大脑的功能?

If yes, can I continue to expand them with other machines? Like, use notes in my phone to not to remember a shitload of data? Oh, seems like I’m doing it right now. I’m expanding the capabilities of my brain with the machines.

如果是,我可以继续用其他机器扩展它们吗?比如,用我手机里的笔记来不记住大量数据?哦,看来我现在正在做。我正在用机器扩展我大脑的能力。

Think about it. Thanks for reading.

想想看。谢谢你的阅读。