PyTorch-26H-1

PyTorch-26H-1 主页:https://www.freecodecamp.org/news/learn-pytorch-for-deep-learning-in-day/

youtub:https://youtu.be/V_xro1bcAuA

github:https://github.com/mrdbourke/pytorch-deep-learning

Learn PyTorch for Deep Learning: Zero to Mastery book:https://www.learnpytorch.io/

PyTorch documentation:https://pytorch.org/docs/stable/index.html

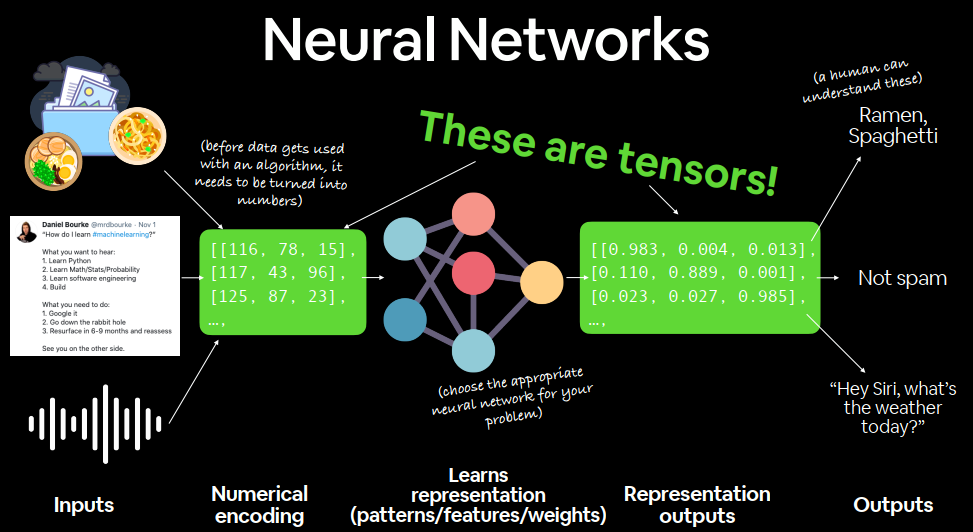

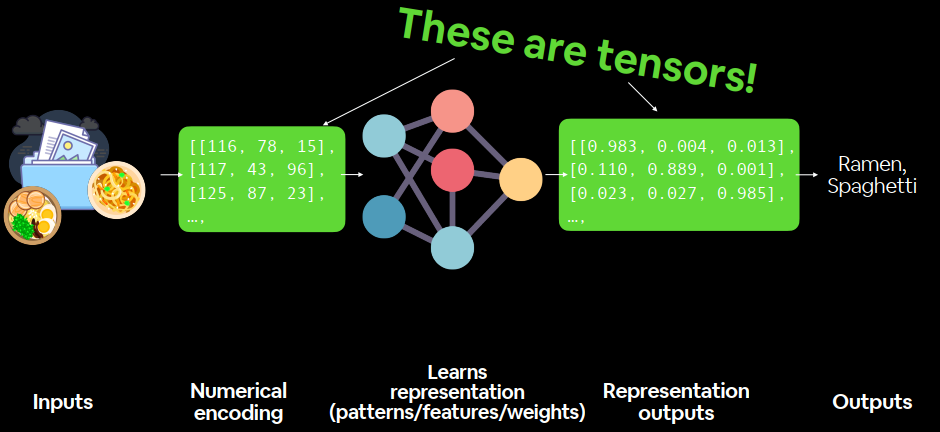

Chapter 0 – PyTorch Fundamentals what is deep learning? Machine learning is turning things (data) into numbers and finding patterns in those numbers.

Deep Learning ∈ Machine Learning ∈ Aritfical Intelligence

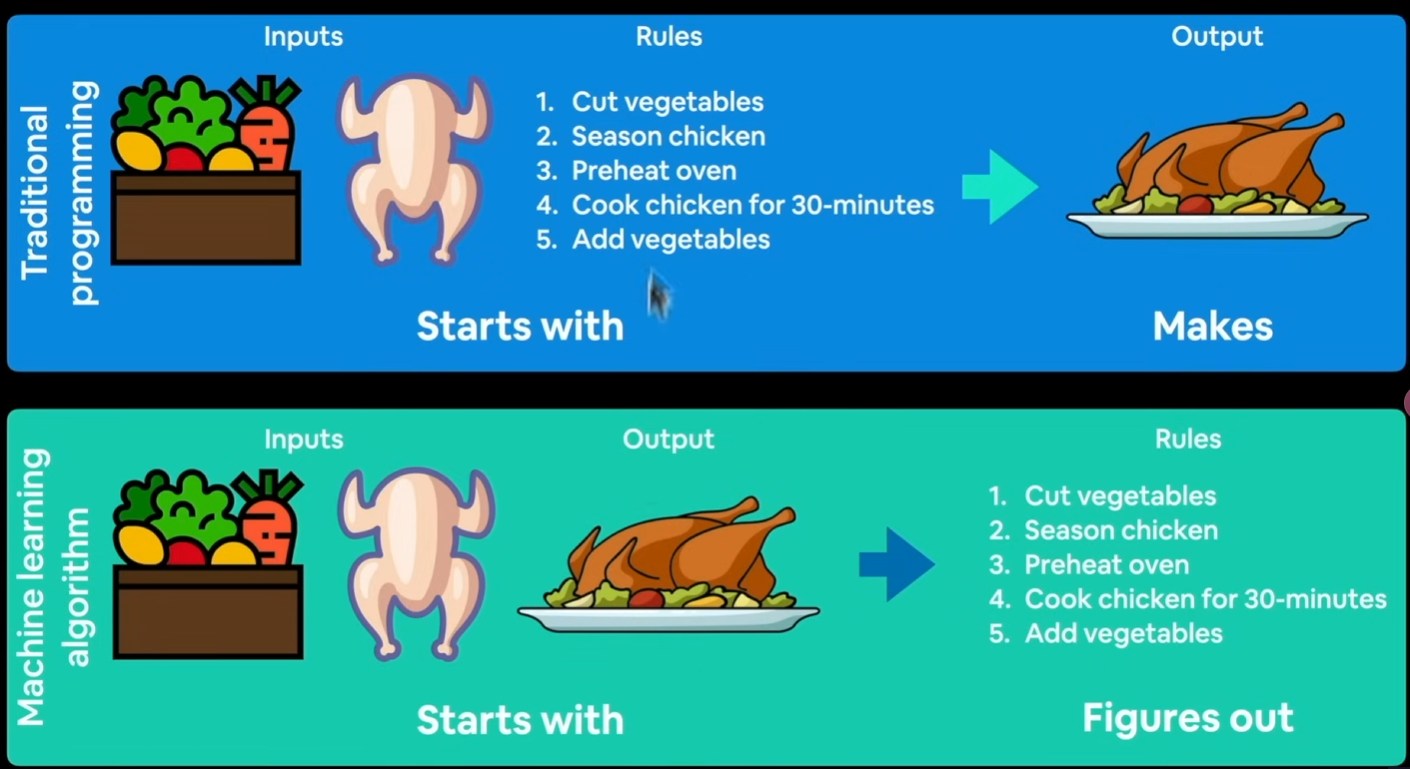

传统程序:输入+规则→输出

Why use machine/deep learning? 对于复杂的问题,无法找到所有的规则。

The number one rule of ML “If you can build a simple rule-based system that doesn’t require machine learning, do that.”

Problems with long lists of rules- when the traditional approach fails, machine learning/deep learning may help.

Continually changing environments- deep learning can adapt (learn’) to new scenarios.

Discovering insights within large collections of data- can you imagine trying to hand-craft rules for what 101 different kinds of food look like?

What deep learning is not good for

When you need explainability- -the patterns learned by a deep learning model are typically uninterpretable by a human.

When the traditional approach is a better option一if you can accomplish what you need with a simple rule-based system.

When errors are unacceptable一since the outputs of deep learning model aren’t always predictable.

When you don’t have much data一deep learning models usually require a fairly large amount of data to produce great results.

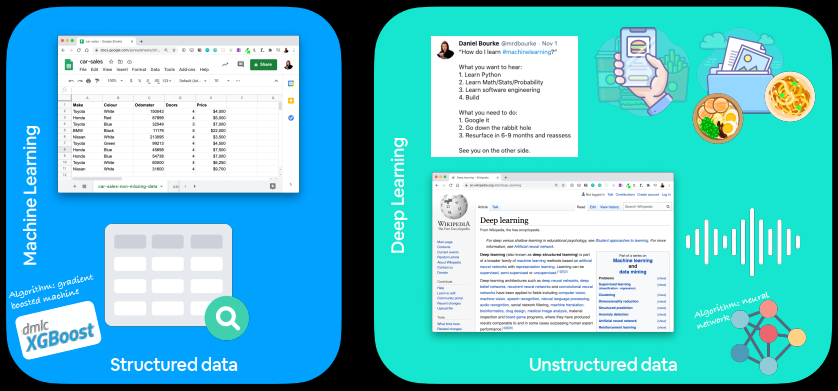

Machine learning vs deep learning 适合处理结构化数据

常见算法:

适合处理非结构化数据

常见算法:

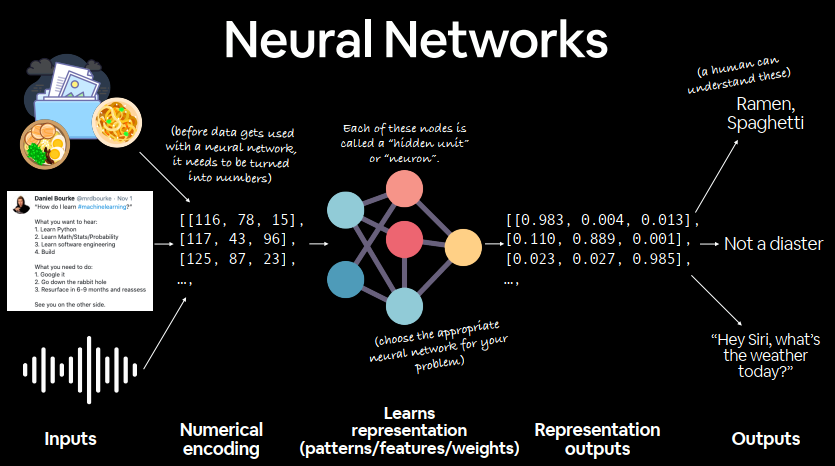

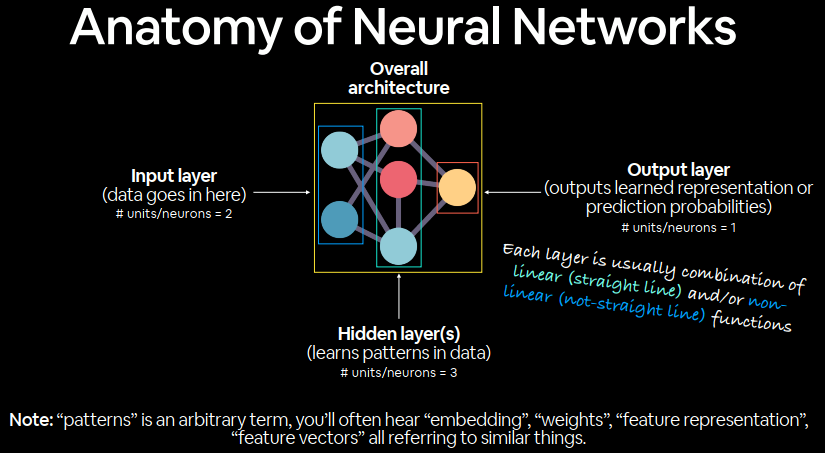

Anatomy of neural networks 数据→数字→神经网络→权重→输出

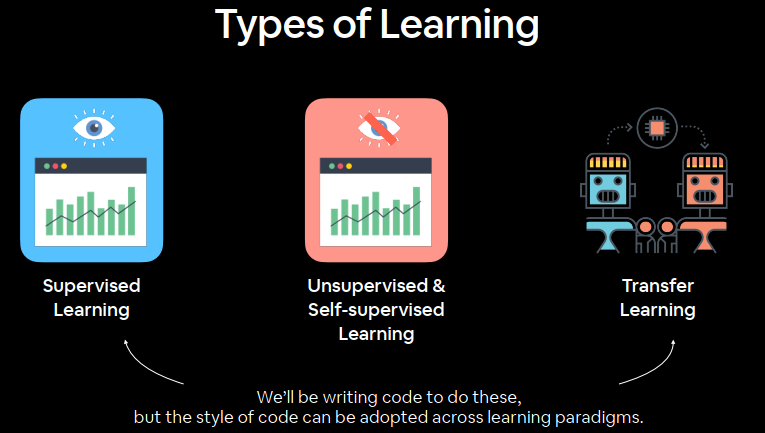

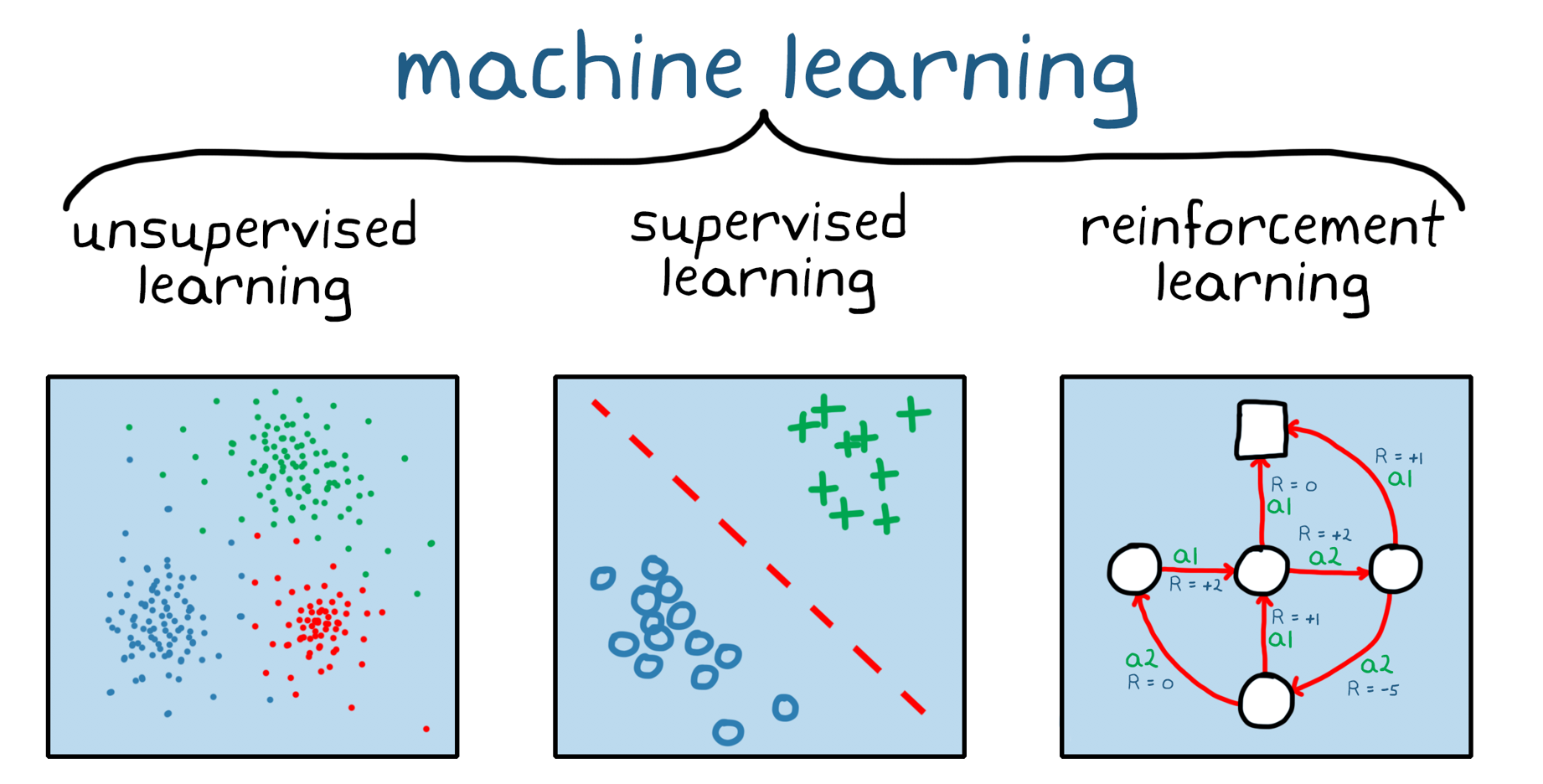

Different learning paradigms 监督学习:大量已知数据标注。

无监督学习:自动分析数据。

迁移学习:将学习到的模式嵌入到新的模型中。

强化学习reinforcement learning:奖励想要的结果。

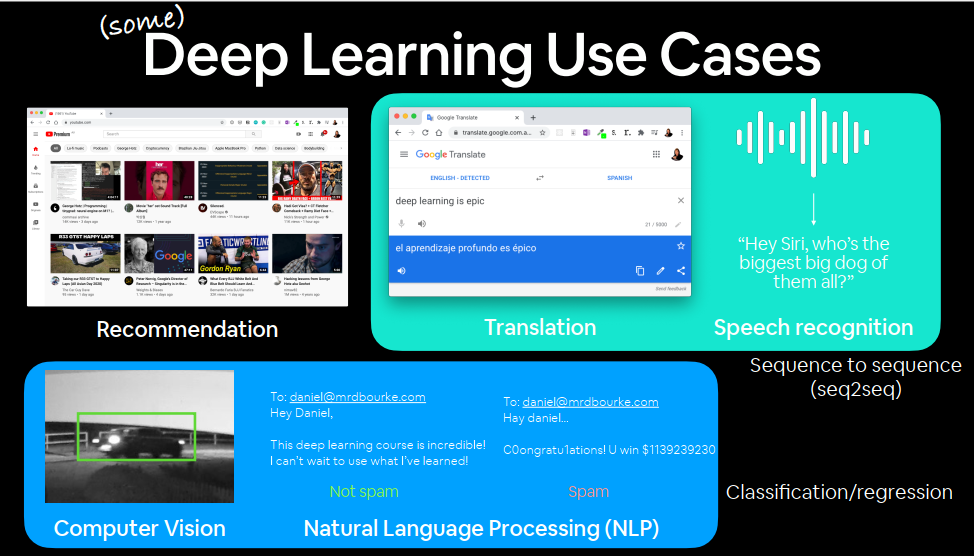

What can deep learning be used for? CV

NATURAL LANGUAGE PROGRESS

SEQUENCE IN AND SEQUENCE OUT

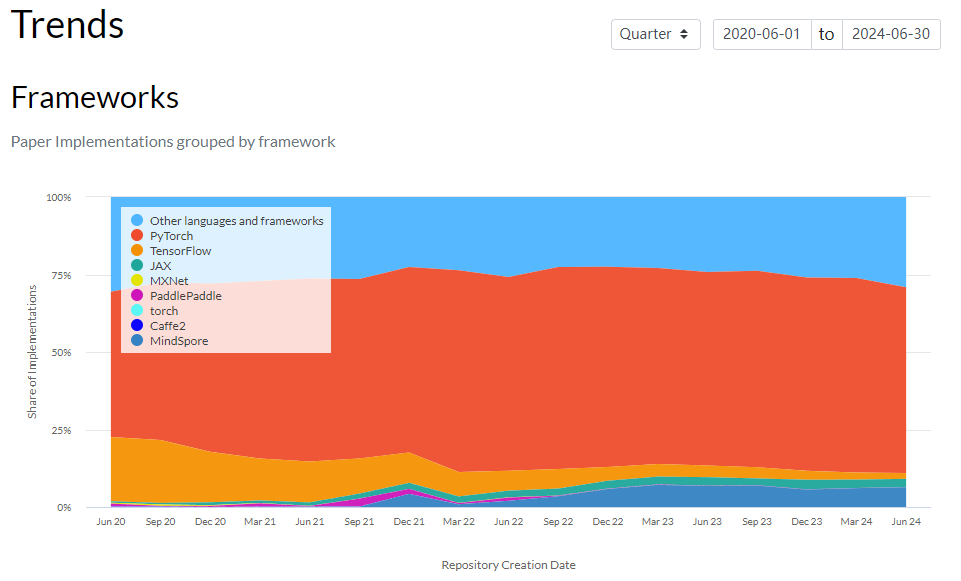

What is/why PyTorch? Most popular research deep learning framework

Write fast deep learning code in Python (able to run on a GPU/many GPUs)

Able to access many pre-built deep learning models (Torch Hub/torchvision.models)

Whole stack: preprocess data, model data, deploy model in your application/cloud

Originally designed and used in-house by Facebook/Meta (now opensource and used by companies such as Tesla, Microsoft, OpenAI)

paperswithcode

GPU(Graphics Processing Unit)

TPU(Tensor Processing Unit)

What are tensors? Outline Now:

PyTorch basics & fundamentals (dealing with tensors and tensor operations)

PyTorch 基础和基本原理(处理张量和张量运算)

Later:

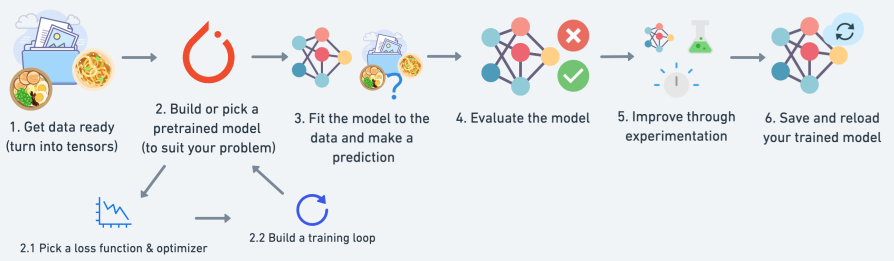

Preprocessing data (getting it into tensors)

预处理数据(将数据转化为张量)

Building and using pretrained deep learning models

构建和使用预训练的深度学习模型

Fitting a model to the data (learning patterns)

根据数据拟合模型(学习模式)

Making predictions with a model (using patterns)

使用模型进行预测(使用模式)

Evaluating model predictions

评估模型预测

Saving and loading models

保存和加载模型

Using a trained model to make predictions on custom data

使用训练好的模型对自定义数据进行预测

一点科学,一点艺术。

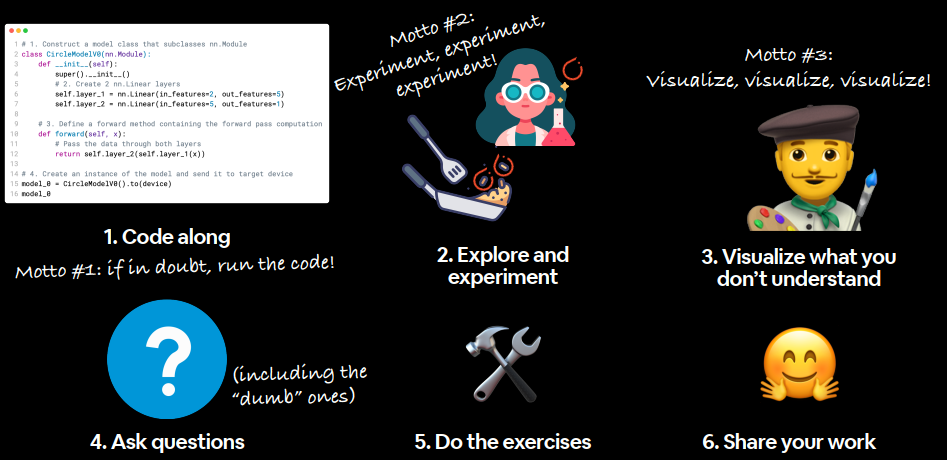

How to (and how not to) approach this course 1、code alone

2、explore and experiment

3、visuallize what you don’t understand

4、ask questions

5、do the exercises

6、share your work

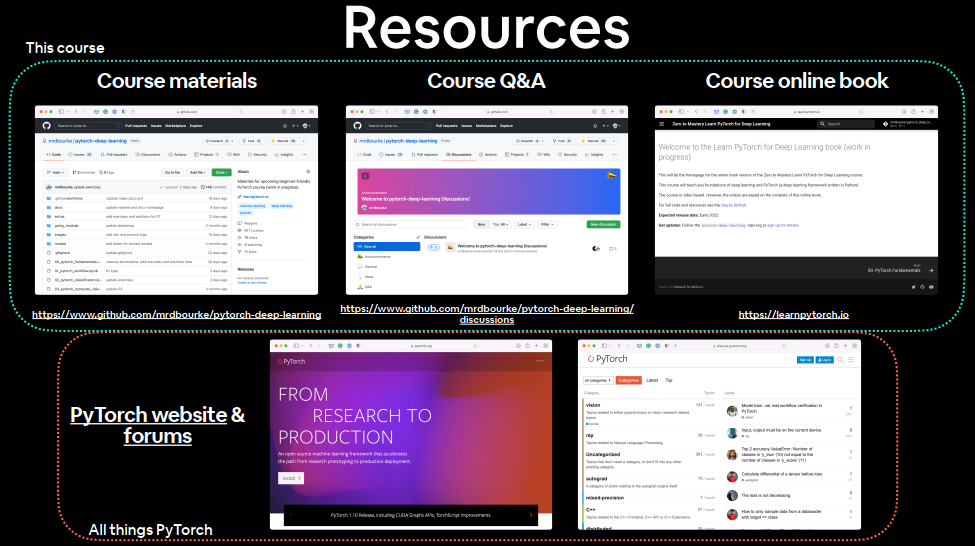

Important resources 课程资料:Course materials

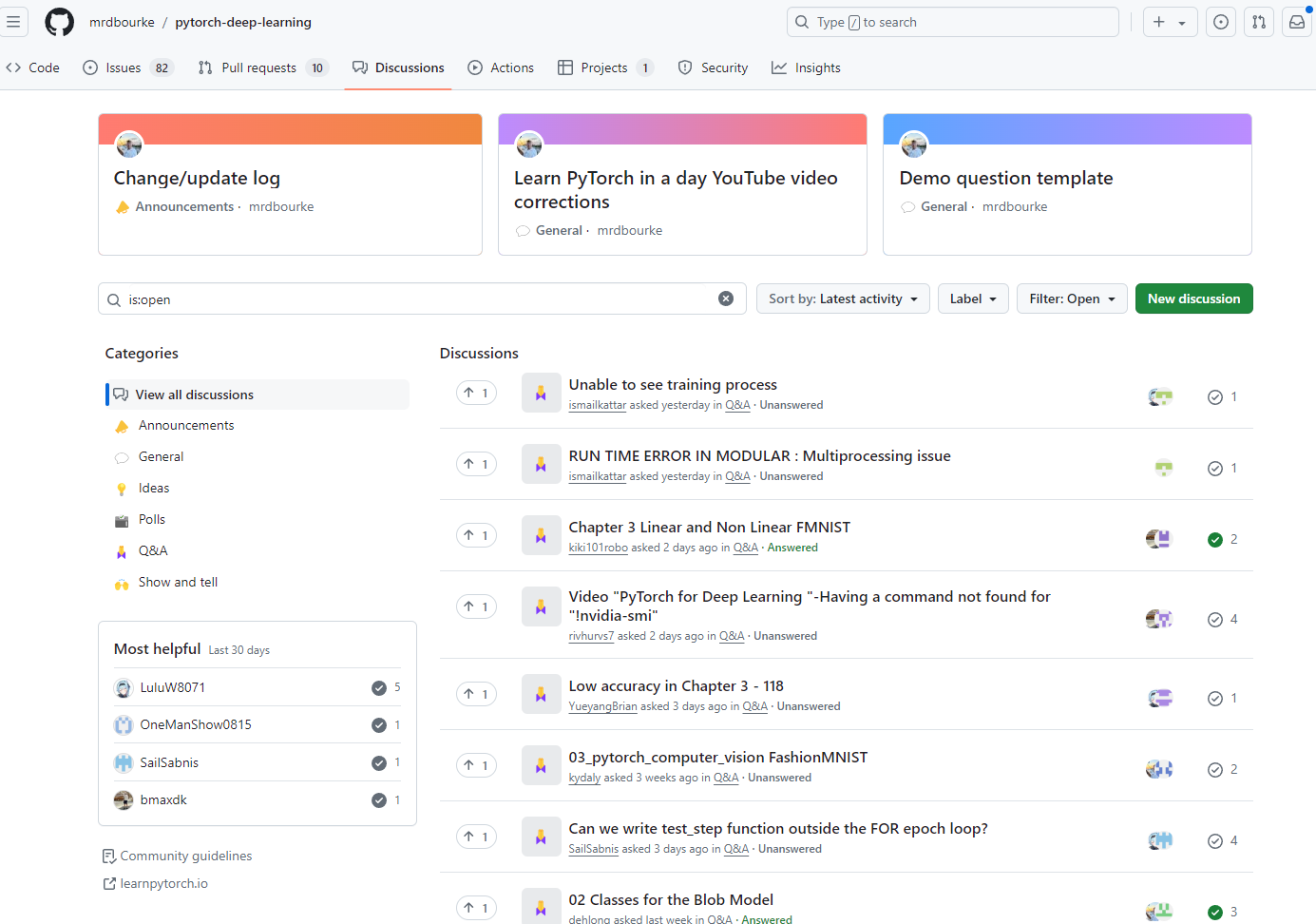

问答:Course Q&A

书籍:Course online book

pytorch_org

PyTorch documentation

出问题去讨论区

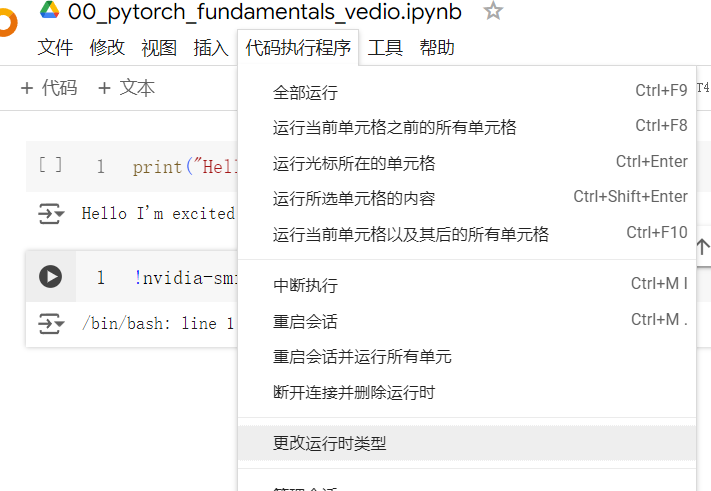

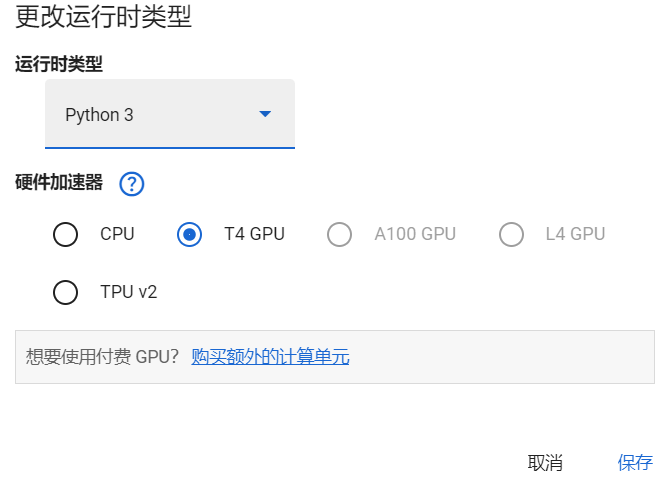

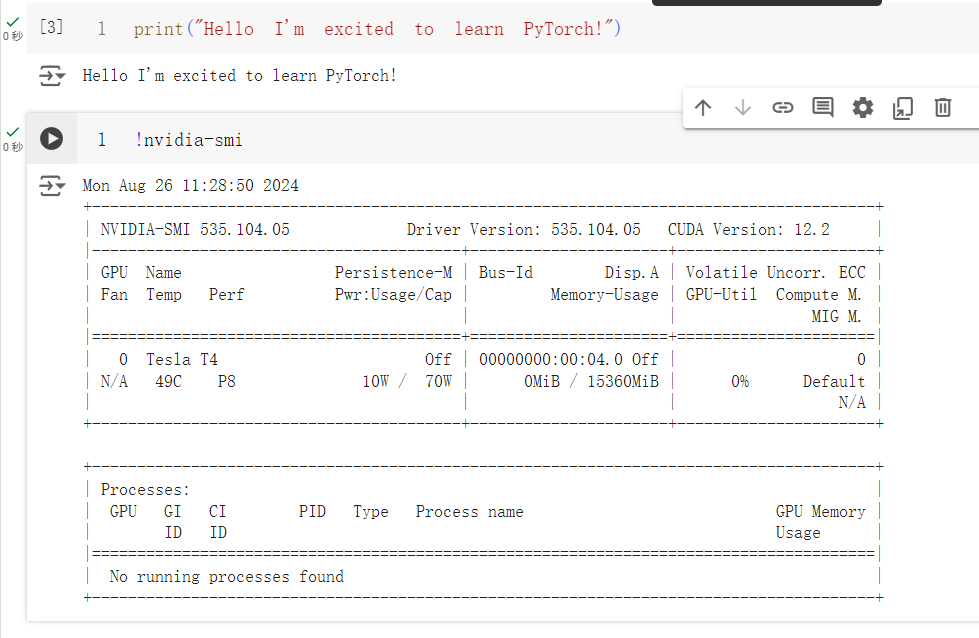

Getting setup Google colab

00_pytorch_fundamentals_vedio.ipynb

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 print ("Hello I'm excited to learn PyTorch!" )>Hello I'm excited to learn PyTorch! !nvidia-smi Mon Aug 26 11:28:50 2024 +---------------------------------------------------------------------------------------+ | NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 | |-----------------------------------------+----------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+======================+======================| | 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 | | N/A 49C P8 10W / 70W | 0MiB / 15360MiB | 0% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ +---------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=======================================================================================| | No running processes found | +---------------------------------------------------------------------------------------+ import torch import pandas as pd import numpy as np import matplotlib.pyplot as plt print(torch.__version__) >2.3.1+cu121

Introduction to tensors torch.Tensor

A torch.Tensor is a multi-dimensional matrix containing elements of a single data type.

scalar→标量 1 2 3 4 5 6 7 8 9 10 11 12 scalar = torch.tensor(7 ) scalar scalar.ndim scalar.item()

vecotr→向量 拥有大小和方向

1 2 3 4 5 6 7 8 9 10 11 12 vector = torch.tensor([7 ,7 ]) vector vector.ndim vector.shape

MATRIX→矩阵 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 MATRIX = torch.tensor([[7 ,8 ], [9 ,10 ]]) MATRIX MATRIX.ndim MATRIX[0 ] MATRIX[1 ] MATRIX.shape

TENSOR→张量 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 TENSOR = torch.tensor([[[1 , 2 , 3 ], [3 , 6 , 9 ], [2 , 4 , 6 ]]]) TENSOR TENSOR.ndim TENSOR.shape TENSOR[0 ]

1 2 3 4 5 6 7 8 9 10 11 12 13 TENSOR = torch.tensor([[[1 , 2 ], [3 , 6 ], [2 , 4 ]]]) TENSOR.ndim TENSOR.shape TENSOR[0 ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 TENSOR = torch.tensor([[[1 , 2 ], [3 , 6 ], [2 , 4 ]], [[1 , 2 ], [3 , 6 ], [2 , 4 ]]]) TENSOR.ndim TENSOR.shape TENSOR[0 ] TENSOR[1 ]

1 2 3 4 5 6 7 8 TENSOR = torch.tensor([[[1 , 2 ], [3 , 6 ], [2 , 4 ]], [[1 , 2 ], [3 , 6 ], [2 , 4 ], [2 , 4 ]]])

Name

解释

维度

scalar标量

一个数字

0

Lower(a)

vector向量

带有方向的数字(例如带有方向的风速),但也可以有许多其他数字

1

Lower(y)

matrix矩阵

二维数字数组

2

Upper(Q)

tensor张量

n 维数字数组

n,0 维张量是标量,1 维张量是矢量

Upper(X)

Creating tensors Why random tensors?

start with random numbersy → look at data → update random numbers → look at data → update random numbers

1 2 3 random_tensor = torch.rand(size=(3 , 4 )) random_tensor, random_tensor.dtype

1 2 3 4 (tensor([[0.6541, 0.4807, 0.2162, 0.6168], [0.4428, 0.6608, 0.6194, 0.8620], [0.2795, 0.6055, 0.4958, 0.5483]]), torch.float32)

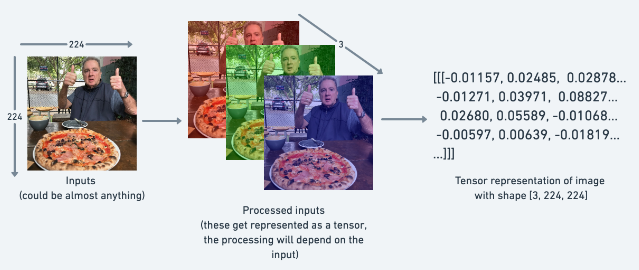

1 2 3 random_image_size_tensor = torch.rand(size=(224 , 224 , 3 )) random_image_size_tensor.shape, random_image_size_tensor.ndim

1 (torch.Size([224, 224, 3]), 3)

图像表示为具有形状的张量,[3, 224, 224]这意味着[colour_channels, height, width],图像具有3颜色通道(红色、绿色、蓝色)、像素高度224和像素宽度224。

Zeros and ones 1 2 3 zeros = torch.zeros(size=(3 , 4 )) zeros

1 2 3 tensor([[0., 0., 0., 0.], [0., 0., 0., 0.], [0., 0., 0., 0.]])

1 2 3 4 ones = torch.ones(size=(3 , 4 )) ones ones.dtype

1 2 3 4 tensor([[1., 1., 1., 1.], [1., 1., 1., 1.], [1., 1., 1., 1.]]) torch.float32

Creating a range of tensors and tensors-like

1 2 3 one_to_ten = torch.arange(start=1 , end=11 , step=1 ) one_to_ten

1 tensor([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

1 2 3 ten_zeros = torch.zeros_like(input =one_to_ten) ten_zeros

1 tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0])

1 2 3 ten_zeros = torch.oness_like(input =one_to_ten) ten_zeros

1 tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1])

Tensor datatypes torch.Tensor-Data tpyes

Precision in computing #:~:text=In%20computer%20science%2C%20the%20precision,used%20to%20express%20a%20value)

张量数据类型是使用 PyTorch 和深度学习时会遇到的 3 个大错误之一:

张量数据类型不正确datatype

张量形状不正确shape

张量不在正确的设备上device

1 2 3 4 5 float_32_tensor = torch.tensor([3.0 , 6.0 , 9.0 ], dtype=None ) float_32_tensor float_32_tensor.dtype

1 2 tensor([3., 6., 9.]) torch.float32

1 2 3 4 5 float_32_tensor = torch.tensor([3.0 , 6.0 , 9.0 ], dtype=torch.float16) float_32_tensor float_32_tensor.dtype

1 2 tensor([3., 6., 9.]), dtype=torch.float16 torch.float16

1 2 3 4 5 6 7 float_32_tensor = torch.tensor([3.0 , 6.0 , 9.0 ], dtype=None , device=None , requires_grad=False ) float_32_tensor float_32_tensor.dtype

1 2 tensor([3., 6., 9.]) torch.float32

1 2 float_16_tensor = float_32_tensor.type (torch.float16) float_16_tensor

1 tensor([3., 6., 9.], dtype=torch.float16)

进行了自动类型转换,向上转换

float16 × float32 → float32

1 2 test = float_16_tensor * float_32_tensor test, test.dtype

1 (tensor([ 9., 36., 81.]), torch.float32)

int64 × float32 → float32

1 2 int_32_tensor = torch.tensor([3 , 6 , 9 ], dtype=torch.int64) int_32_tensor, int_32_tensor.dtype

1 (tensor([3, 6, 9]), torch.int64)

1 2 test = float_32_tensor * int_32_tensor test, test.dtype

1 (tensor([ 9., 36., 81.]), torch.float32)

long × float32 → float32

1 2 int_32_tensor = torch.tensor([3 , 6 , 9 ], dtype=torch.long) int_32_tensor, int_32_tensor.dtpye

1 (tensor([3, 6, 9]), torch.int64)

1 2 test = float_32_tensor * int_32_tensor test, test.dtype

1 (tensor([ 9., 36., 81.]), torch.float32)

shape/size():形状

dtpye:数据类型

device:设备

1 2 3 4 5 6 7 8 some_tensor = torch.rand(3 , 4 ) print (some_tensor)print (f"Shape of tensor: {some_tensor.shape} " ) print (f"Datatype of tensor: {some_tensor.dtype} " )print (f"Device tensor is stored on: {some_tensor.device} " )

1 2 3 4 5 6 tensor([[0.4688, 0.0055, 0.8551, 0.0646], [0.6538, 0.5157, 0.4071, 0.2109], [0.9960, 0.3061, 0.9369, 0.7008]]) Shape of tensor: torch.Size([3, 4]) Datatype of tensor: torch.float32 Device tensor is stored on: cpu

Manipulating tensors

Addition:加法

Substraction:减法

Multiplication (element-wise):乘法

Division:除法

Matrix multiplication:矩阵乘法

basic operations 1 2 3 tensor = torch.tensor([1 , 2 , 3 ]) tensor + 10

1 2 # Tensors don't change unless reassigned tensor

1 2 3 tensor = tensor - 10 tensor

PyTorch也有一些内置函数,如torch.mul()(乘法的缩写)和torch.add()来执行基本操作。

Matrix multiplication How to Multiply Matrices

矩阵乘法需要记住的两个主要规则是:

内部尺寸必须匹配 :

得到的矩阵具有外部尺寸的形状 :

1 2 3 import torchtensor = torch.tensor([1 , 2 , 3 ]) tensor.shape

1 2 torch.matmul(tensor, tensor)

1 2 torch.mm(tensor, tensor)

1 2 3 4 5 6 7 %%time value = 0 for i in range (len (tensor)): value += tensor[i] * tensor[i] value

1 2 3 4 5 6 7 8 CPU times: user 773 µs, sys: 0 ns, total: 773 µs Wall time: 499 µs tensor(14) ```` ```python % %time torch.matmul(tensor, tensor)

1 2 3 CPU times: user 146 µs, sys: 83 µs, total: 229 µs Wall time: 171 µs tensor(14)

深度学习中最常见的错误之一(形状错误) matrixmultiplication矩阵可视化

由于深度学习的大部分内容是对矩阵进行乘法和执行运算,并且矩阵对于可以组合的形状和大小有严格的规则,因此在深度学习中遇到的最常见错误之一就是形状不匹配。

1 2 3 4 5 6 7 8 9 10 tensor_A = torch.tensor([[1 , 2 ], [3 , 4 ], [5 , 6 ]], dtype=torch.float32) tensor_B = torch.tensor([[7 , 10 ], [8 , 11 ], [9 , 12 ]], dtype=torch.float32) torch.matmul(tensor_A, tensor_B)

1 2 ... RuntimeError: mat1 and mat2 shapes cannot be multiplied (3x2 and 3x2)

通过矩阵的内部维度匹配来使矩阵乘法在tensor_A和之间进行tensor_B。

使用转置(切换给定张量的维度)。

您可以使用以下任一方式在 PyTorch 中执行转置:

torch.transpose(input, dim0, dim1), 其中 input 是需要转置的张量, 和 dim0 是 dim1 需要交换的维度。tensor.T,tensor 是需要转置的张量。

1 2 3 4 5 6 7 8 print (tensor_A)print (tensor_B.T)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 tensor([[1., 2.], [3., 4.], [5., 6.]]) tensor([[ 7., 8., 9.], [10., 11., 12.]]) ```` ```python # The operation works when tensor_B is transposed print(f"Original shapes: tensor_A = {tensor_A.shape}, tensor_B = {tensor_B.shape}\n") print(f"New shapes: tensor_A = {tensor_A.shape} (same as above), tensor_B.T = {tensor_B.T.shape}\n") print(f"Multiplying: {tensor_A.shape} * {tensor_B.T.shape} <- inner dimensions match\n") print("Output:\n") output = torch.matmul(tensor_A, tensor_B.T) print(output) print(f"\nOutput shape: {output.shape}")

1 2 3 4 5 6 7 8 9 10 11 12 13 Original shapes: tensor_A = torch.Size([3, 2]), tensor_B = torch.Size([3, 2]) New shapes: tensor_A = torch.Size([3, 2]) (same as above), tensor_B.T = torch.Size([2, 3]) Multiplying: torch.Size([3, 2]) * torch.Size([2, 3]) <- inner dimensions match Output: tensor([[ 27., 30., 33.], [ 61., 68., 75.], [ 95., 106., 117.]]) Output shape: torch.Size([3, 3])

像这样的矩阵乘法也称为两个矩阵的点积。 神经网络充满了矩阵乘法和点积。

该torch.nn.Linear()模块(我们稍后会看到它的实际作用),也称为前馈层或全连接层,实现输入x和权重矩阵之间的矩阵乘法A。

x是该层的输入(深度学习是将多个层torch.nn.Linear()和其他层堆叠在一起)。

A是由该层创建的权重矩阵,它开始是随机数,随着神经网络学习更好地表示数据中的模式,这些随机数会进行调整(请注意“ T”,这是因为权重矩阵被转置了)。

注意:您可能还经常看到W或另一个字母,X用于展示权重矩阵。

b是用于稍微偏移权重和输入的偏差项。y是输出(对输入进行操作以期发现其中的模式)。

这是一个线性函数(你可能在高中或其他地方见过类似 $y = mx+b$ 的函数),可以用来画一条直线!

让我们尝试一下线性层。

1 2 3 4 5 6 7 8 9 torch.manual_seed(42 ) linear = torch.nn.Linear(in_features=2 , out_features=6 ) x = tensor_A output = linear(x) print (f"Input shape: {x.shape} \n" )print (f"Output:\n{output} \n\nOutput shape: {output.shape} " )

1 2 3 4 5 6 7 8 9 Input shape: torch.Size([3, 2]) Output: tensor([[2.2368, 1.2292, 0.4714, 0.3864, 0.1309, 0.9838], [4.4919, 2.1970, 0.4469, 0.5285, 0.3401, 2.4777], [6.7469, 3.1648, 0.4224, 0.6705, 0.5493, 3.9716]], grad_fn=<AddmmBackward0>) Output shape: torch.Size([3, 6])

Finding the min, max, mean & sum 最小最大平均求和 1 2 3 x = torch.arange(0 , 100 , 10 ) x

1 tensor([ 0, 10, 20, 30, 40, 50, 60, 70, 80, 90])

1 2 3 4 5 6 7 8 9 print (f"Minimum: {x.min ()} " ) print (f"Maximum: {x.max ()} " )print (f"Mean: {x.type (torch.float32).mean()} " ) print (f"Sum: {x.sum ()} " )

1 2 3 4 Minimum: 0 Maximum: 90 Mean: 45.0 Sum: 450

查找最大最小索引位置 torch.argmax(),torch.argmin()。

1 2 3 4 5 6 7 tensor = torch.arange(10 , 100 , 10 ) print (f"Tensor: {tensor} " )print (f"Index where max value occurs: {tensor.argmax()} " )print (f"Index where min value occurs: {tensor.argmin()} " )

1 2 3 Tensor: tensor([10, 20, 30, 40, 50, 60, 70, 80, 90]) Index where max value occurs: 8 Index where min value occurs: 0

更改数据类型 torch.Tensor.type(dtype=None)

1 2 3 tensor = torch.arange(10. , 100. , 10. ) tensor.dtype

1 2 3 tensor_float16 = tensor.type (torch.float16) tensor_float16

1 tensor([10., 20., 30., 40., 50., 60., 70., 80., 90.], dtype=torch.float16)

1 2 3 tensor_int8 = tensor.type (torch.int8) tensor_int8

1 tensor([10, 20, 30, 40, 50, 60, 70, 80, 90], dtype=torch.int8)

数字越小(例如 32、16、8),计算机存储的值就越不精确。存储量越少,通常计算速度越快,整体模型就越小。基于移动端的神经网络通常使用 8 位整数,与 float32 相比,它们更小、运行速度更快,但准确度较低。

torch.Tensor-doc

重塑、视图、堆叠、压缩、解压、置换

方法

描述

torch.reshape(input, shape)

重塑input为shape(如果兼容),也可以使用torch.Tensor.reshape()。

Tensor.view(shape)

shape返回与原始张量不同的但共享相同数据的原始张量的视图。

torch.stack(tensors, dim=0)

沿新维度(dim)连接一系列张量(tensor),所有tensors必须大小相同。

torch.squeeze(input)

挤压input以删除所有具有值的维度1。

torch.unsqueeze(input, dim)

返回在 处添加input的维度值。1dim

torch.permute(input, dims)

返回原始输入的视图,其尺寸已置换(重新排列)为 dims。

Reshaping-重塑 1 2 3 4 import torchx = torch.arange(1. , 8. ) x, x.shape

1 (tensor([1., 2., 3., 4., 5., 6., 7.]), torch.Size([7]))

1 2 3 x_reshaped = x.reshape(1 , 7 ) x_reshaped, x_reshaped.shape

1 (tensor([[1., 2., 3., 4., 5., 6., 7.]]), torch.Size([1, 7]))

x.reshape(1, 8) → error,因为元素数和原来数组不相同。

x.reshape(2, 7) → error,因为在不增加元素数量的情况情况下将元素数量增加一倍。

x.reshape(7, 1) → 可以运行,行列转换。

View-视图 1 2 3 4 z = x.view(1 , 7 ) z, z.shape

1 (tensor([[1., 2., 3., 4., 5., 6., 7.]]), torch.Size([1, 7]))

改变张量的视图实际上只会创建同torch.view()一张量的新视图。

因此改变视图也会改变原始张量。

1 (tensor([[5., 2., 3., 4., 5., 6., 7.]]), tensor([5., 2., 3., 4., 5., 6., 7.]))

Stacking-堆叠 新的张量堆叠在其自身之上五次,torch.stack()。

1 2 3 x_stacked = torch.stack([x, x, x, x], dim=0 ) x_stacked

1 2 3 4 tensor([[5., 2., 3., 4., 5., 6., 7.], [5., 2., 3., 4., 5., 6., 7.], [5., 2., 3., 4., 5., 6., 7.], [5., 2., 3., 4., 5., 6., 7.]])

1 2 3 x_stacked = torch.stack([x, x, x, x], dim=1 ) x_stacked

1 2 3 4 5 6 7 tensor([[5., 5., 5., 5.], [2., 2., 2., 2.], [3., 3., 3., 3.], [4., 4., 4., 4.], [5., 5., 5., 5.], [6., 6., 6., 6.], [7., 7., 7., 7.]])

x_stacked = torch.stack([x, x, x, x], dim=2) 维度为2不行,因为原来的形状与二维不兼容。

torch.stack 和 torch.vstack 和 torch.hstack

torch.stack

1 torch.stack(tensors, dim=0, *, out=None) → Tensor

沿新维度连接一系列张量。

所有张量都需要具有相同的大小。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 x = torch.randn(2 , 3 ) x, x.size() x1 = torch.stack((x, x)) x1, x1.size() x2 = torch.stack((x, x), dim=1 ) x2, x2.size() x3 = torch.stack((x, x), dim=2 ) x3, x3.size() x4 = torch.stack((x, x), dim=-1 ) x4, x4.size()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 (tensor([[ 0.1801, 0.1566, -2.0349], [ 0.0183, -0.0088, -0.6409]]), torch.Size([2, 3])) (tensor([[[ 1.3646, -0.4994, -0.6540], [-0.3804, -0.2373, 1.8952]], [[ 1.3646, -0.4994, -0.6540], [-0.3804, -0.2373, 1.8952]]]), torch.Size([2, 2, 3])) (tensor([[[ 1.3646, -0.4994, -0.6540], [ 1.3646, -0.4994, -0.6540]], [[-0.3804, -0.2373, 1.8952], [-0.3804, -0.2373, 1.8952]]]), torch.Size([2, 2, 3])) (tensor([[[ 1.3646, 1.3646], [-0.4994, -0.4994], [-0.6540, -0.6540]], [[-0.3804, -0.3804], [-0.2373, -0.2373], [ 1.8952, 1.8952]]]), torch.Size([2, 3, 2])) (tensor([[[ 1.3646, 1.3646], [-0.4994, -0.4994], [-0.6540, -0.6540]], [[-0.3804, -0.3804], [-0.2373, -0.2373], [ 1.8952, 1.8952]]]), torch.Size([2, 3, 2]))

torch.vstack

1 torch.vstack(tensors, *, out=None ) → Tensor

按垂直顺序(按行)堆叠张量。

这相当于在所有一维张量被重塑后沿第一个轴进行连接torch.atleast_2d()。

1 2 3 4 5 6 7 a = torch.tensor([1 , 2 , 3 ]) b = torch.tensor([4 , 5 , 6 ]) torch.vstack((a,b)) a = torch.tensor([[1 ],[2 ],[3 ]]) b = torch.tensor([[4 ],[5 ],[6 ]]) torch.vstack((a,b))

1 2 3 4 5 6 7 8 9 tensor([[1, 2, 3], [4, 5, 6]]) tensor([[1], [2], [3], [4], [5], [6]])

torch.hstack

1 torch.hstack(tensors, *, out=None ) → Tensor

按水平顺序(按列)堆叠张量。

这相当于沿第一个轴对一维张量进行连接,并沿第二个轴对所有其他张量进行连接。

1 2 3 4 5 6 7 a = torch.tensor([1 , 2 , 3 ]) b = torch.tensor([4 , 5 , 6 ]) torch.hstack((a,b)) a = torch.tensor([[1 ],[2 ],[3 ]]) b = torch.tensor([[4 ],[5 ],[6 ]]) torch.hstack((a,b))

1 2 3 4 5 tensor([1, 2, 3, 4, 5, 6]) tensor([[1, 4], [2, 5], [3, 6]])

Squeeze-压缩 从张量中删除所有单一维度(将张量压缩为仅具有超过 1 的维度)

torch.squeeze

1 torch.squeeze(input : Tensor, dim: Optional [Union [int , List [int ]]]) → Tensor

返回已删除所有指定尺寸为input1的张量。

input:(A × 1 × B × C × 1 × D)

input:(A × 1 × B)

input:(A × 1 × B)

返回的张量与输入张量共享存储,因此改变一个张量的内容也会改变另一个张量的内容。

1 2 3 4 5 6 7 8 print (f"Previous tensor: {x_reshaped} " )print (f"Previous shape: {x_reshaped.shape} " )x_squeezed = x_reshaped.squeeze() print (f"\nNew tensor: {x_squeezed} " )print (f"New shape: {x_squeezed.shape} " )

1 2 3 4 5 Previous tensor: tensor([[5., 2., 3., 4., 5., 6., 7.]]) Previous shape: torch.Size([1, 7]) New tensor: tensor([5., 2., 3., 4., 5., 6., 7.]) New shape: torch.Size([7])

Unsqueeze-解压 在特定索引处添加维度值 1

torch.unsqueeze

1 torch.unsqueeze(input , dim) → Tensor

返回在指定位置插入一个维度为一的新张量。

返回的张量与该张量共享相同的底层数据。

可以使用dim范围内的值。负数将对应于= 处的应用。[-input.dim() - 1, input.dim() + 1)dimunsqueeze()dimdim + input.dim() + 1

可以使用 [-input.dim() - 1, input.dim() + 1) 范围内的 dim 值。负 dim 将对应于在 dim = dim + input.dim() + 1 处应用的 unsqueeze()。

1 2 3 4 5 6 7 8 9 10 11 12 13 print (f"Previous tensor: {x_squeezed} " )print (f"Previous shape: {x_squeezed.shape} " )x_unsqueezed = x_squeezed.unsqueeze(dim=0 ) print (f"\nNew tensor: {x_unsqueezed} " )print (f"New shape: {x_unsqueezed.shape} " )x_unsqueezed = x_squeezed.unsqueeze(dim=1 ) print (f"\nNew tensor: {x_unsqueezed} " )print (f"New shape: {x_unsqueezed.shape} " )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 Previous tensor: tensor([5., 2., 3., 4., 5., 6., 7.]) Previous shape: torch.Size([7]) New tensor: tensor([[5., 2., 3., 4., 5., 6., 7.]]) New shape: torch.Size([1, 7]) New tensor: tensor([[5.], [2.], [3.], [4.], [5.], [6.], [7.]]) New shape: torch.Size([7, 1])

Permute-置换 重新排列轴值的顺序

torch.permute

1 torch.permute(input , dims) → Tensor

返回维度已排列的原始张量输入的视图。1 2 3 4 5 6 >>> x = torch.randn(2 , 3 , 5 )>>> x.size()torch.Size([2 , 3 , 5 ]) >>> torch.permute(x, (2 , 0 , 1 )).size()torch.Size([5 , 2 , 3 ])

1 2 3 4 5 6 7 8 x_original = torch.rand(size=(224 , 224 , 3 )) x_permuted = x_original.permute(2 , 0 , 1 ) print (f"Previous shape: {x_original.shape} " )print (f"New shape: {x_permuted.shape} " )

1 2 Previous shape: torch.Size([224, 224, 3]) New shape: torch.Size([3, 224, 224])

因为排列返回一个视图(与原始共享相同的数据),所以排列张量中的值将与原始张量相同,如果更改视图中的值,它将更改原始的值。

Selecting data (indexing) 从张量中选择特定数据(例如,仅第一列或第二行)。可以使用索引。

1 2 3 4 import torchx = torch.arange(1 , 10 ).reshape(1 , 3 , 3 ) x, x.shape

1 2 3 4 (tensor([[[1, 2, 3], [4, 5, 6], [7, 8, 9]]]), torch.Size([1, 3, 3]))

索引值从外部维度 -> 内部维度(检查方括号)

1 2 3 4 print (f"First square bracket:\n{x[0 ]} " ) print (f"Second square bracket: {x[0 ][0 ]} " ) print (f"Third square bracket: {x[0 ][0 ][0 ]} " )

1 2 3 4 5 6 First square bracket: tensor([[1, 2, 3], [4, 5, 6], [7, 8, 9]]) Second square bracket: tensor([1, 2, 3]) Third square bracket: 1

:指定“此维度中的所有值”,然后使用逗号 ( ,) 添加另一个维度。

1 2 3 4 5 6 7 print (x[0 ][2 ][2 ])print (x[:, :, 2 ])

PyTorch tensors and NumPy NumPy 是一个流行的 Python 数值计算库,因此 PyTorch 具有与其良好交互的功能。

从 NumPy 到 PyTorch(以及返回)需要使用的两种主要方法是:

torch.from_numpy(ndarray) : NumPy 数组 -> PyTorch 张量。torch.Tensor.numpy() : PyTorch 张量 -> NumPy 数组。

NumPy array to tensor 1 2 3 4 5 6 7 import torchimport numpy as nparray = np.arange(1.0 , 8.0 ) tensor = torch.from_numpy(array) array, tensor

1 2 (array([1., 2., 3., 4., 5., 6., 7.]), tensor([1., 2., 3., 4., 5., 6., 7.], dtype=torch.float64))

默认情况下,NumPy 数组是使用数据类型创建的float64,如果将其转换为 PyTorch 张量,将保留相同的数据类型(如上)。

许多 PyTorch 计算默认使用float32。

转换 NumPy 数组 (float64) -> PyTorch 张量 (float64) -> PyTorch 张量 (float32),使用tensor = torch.from_numpy(array).type(torch.float32)。

改变数组,张量不变 tensor上面重新分配了

1 2 3 4 array = array + 1 array, tensor

1 2 (array([2., 3., 4., 5., 6., 7., 8.]), tensor([1., 2., 3., 4., 5., 6., 7.], dtype=torch.float64))

Tensor to NumPy array 1 2 3 4 tensor = torch.ones(7 ) numpy_tensor = tensor.numpy() tensor, numpy_tensor

1 2 (tensor([1., 1., 1., 1., 1., 1., 1.]), array([1., 1., 1., 1., 1., 1., 1.], dtype=float32))

改变张量,数组不变 1 2 3 tensor = tensor + 1 tensor, numpy_tensor

1 2 (tensor([2., 2., 2., 2., 2., 2., 2.]), array([1., 1., 1., 1., 1., 1., 1.], dtype=float32))

Reproducibility PyTorch-Reproducibility

Random seed-WiKi

伪随机性。

计算机的设计从根本上来说就是确定性的(每个步骤都是可预测的),所以它们产生的随机性是模拟随机性。

神经网络从随机数开始描述数据中的模式(这些数字是糟糕的描述),并尝试使用张量运算(以及一些我们尚未讨论的其他内容)来改进这些随机数,以更好地描述数据中的模式。

流程:start with random numbers -> tensor operations -> try to make better (again and again and again)

尽管随机性很好而且很强大,但有时还是希望随机性少一点,因此可以进行可重复的实验。

A计算机上运行与B计算机上运行相同的代码是否可以获得相同(或非常相似)的结果。

1 2 3 4 5 6 7 8 9 10 import torchrandom_tensor_A = torch.rand(3 , 4 ) random_tensor_B = torch.rand(3 , 4 ) print (f"Tensor A:\n{random_tensor_A} \n" )print (f"Tensor B:\n{random_tensor_B} \n" )print (f"Does Tensor A equal Tensor B? (anywhere)" )random_tensor_A == random_tensor_B

1 2 3 4 5 6 7 8 9 10 11 12 13 14 Tensor A: tensor([[0.8016, 0.3649, 0.6286, 0.9663], [0.7687, 0.4566, 0.5745, 0.9200], [0.3230, 0.8613, 0.0919, 0.3102]]) Tensor B: tensor([[0.9536, 0.6002, 0.0351, 0.6826], [0.3743, 0.5220, 0.1336, 0.9666], [0.9754, 0.8474, 0.8988, 0.1105]]) Does Tensor A equal Tensor B? (anywhere) tensor([[False, False, False, False], [False, False, False, False], [False, False, False, False]])

创建两个具有相同值的随机张量。

张量仍然包含随机值,但它们具有相同的特性。

这就是torch.manual_seed(seed)出现的位置,其中seed是一个整数(类似于42但可以是任何东西),它决定了随机性。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 import torchimport randomRANDOM_SEED=42 torch.manual_seed(seed=RANDOM_SEED) random_tensor_C = torch.rand(3 , 4 ) torch.random.manual_seed(seed=RANDOM_SEED) random_tensor_D = torch.rand(3 , 4 ) print (f"Tensor C:\n{random_tensor_C} \n" )print (f"Tensor D:\n{random_tensor_D} \n" )print (f"Does Tensor C equal Tensor D? (anywhere)" )random_tensor_C == random_tensor_D

1 2 3 4 5 6 7 8 9 10 11 12 13 14 Tensor C: tensor([[0.8823, 0.9150, 0.3829, 0.9593], [0.3904, 0.6009, 0.2566, 0.7936], [0.9408, 0.1332, 0.9346, 0.5936]]) Tensor D: tensor([[0.8823, 0.9150, 0.3829, 0.9593], [0.3904, 0.6009, 0.2566, 0.7936], [0.9408, 0.1332, 0.9346, 0.5936]]) Does Tensor C equal Tensor D? (anywhere) tensor([[True, True, True, True], [True, True, True, True], [True, True, True, True]])

在 GPU 上运行张量(并进行更快的计算) 深度学习算法需要大量的数值运算。

默认情况下这些操作通常在 CPU(计算机处理单元)上完成。

然而,还有另一种常见的硬件,称为 GPU(图形处理单元),它在执行神经网络所需的特定类型的操作(矩阵乘法)时通常比 CPU 快得多。

获取GPU Which GPU(s) to Get for Deep Learning: My Experience and Advice for Using GPUs in Deep Learning

Method Difficulty to setup Pros Cons How to setup

Google Colab

Easy

Free to use, almost zero setup required, can share work with others as easy as a link

Doesn’t save your data outputs, limited compute, subject to timeouts

Follow the Google Colab Guide

Use your own

Medium

Run everything locally on your own machine

GPUs aren’t free, require upfront cost

Follow the PyTorch installation guidelines

Cloud computing (AWS, GCP, Azure)

Medium-Hard

Small upfront cost, access to almost infinite compute

Can get expensive if running continually, takes some time to setup right

Follow the PyTorch installation guidelines

检查本机GPU

1 2 3 4 5 6 7 8 9 10 11 12 13 C:\Users\windows11>nvidia-smi Fri Oct 11 20:12:11 2024 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 551.68 Driver Version: 551.68 CUDA Version: 12.4 | |-----------------------------------------+------------------------+----------------------+ | GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 NVIDIA GeForce RTX 3090 WDDM | 00000000:73:00.0 On | N/A | | 30% 44C P8 30W / 350W | 2290MiB / 24576MiB | 3% Default | | | | N/A | +-----------------------------------------+------------------------+----------------------+

让 PyTorch 在 GPU 上运行 检查cuda包 一旦您准备好访问 GPU,下一步就是使用 PyTorch 来存储数据(张量)和计算数据(对张量执行操作)。

为此,您可以使用该torch.cuda包。

1 2 3 import torchtorch.cuda.is_available()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Fri Oct 11 12:55:41 2024 +---------------------------------------------------------------------------------------+ | NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 | |-----------------------------------------+----------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+======================+======================| | 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 | | N/A 37C P8 9W / 70W | 3MiB / 15360MiB | 0% Default | | | | N/A | +-----------------------------------------+----------------------+----------------------+ +---------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=======================================================================================| | No running processes found | +---------------------------------------------------------------------------------------+

如果上述输出为True,则 PyTorch 可以看到并使用 GPU;如果输出False,则它看不到 GPU,在这种情况下,您必须返回安装步骤。

设置device 让我们创建一个device变量来存储可用的设备类型。

1 2 3 device = "cuda" if torch.cuda.is_available() else "cpu" device

如果输出上述内容,”cuda”则意味着我们可以将所有 PyTorch 代码设置为使用可用的 CUDA 设备(GPU),如果输出”cpu”,则我们的 PyTorch 代码将坚持使用 CPU。

在 PyTorch 中,最佳做法是编写与设备无关的代码。这意味着代码将在 CPU(始终可用)或 GPU(如果可用)上运行。

best-practices:PyTroch CUDA semantics

多个GPU计算 如果您想要进行更快的计算,则可以使用 GPU,但如果您想进行更快的计算,则可以使用多个 GPU。

您可以计算 PyTorch 可以使用的 GPU 数量torch.cuda.device_count()。

1 2 torch.cuda.device_count()

了解 PyTorch 可以访问的 GPU 数量很有帮助,以防您想在一个 GPU 上运行特定进程,而在另一个 GPU 上运行另一个进程(PyTorch 还具有允许您在所有GPU 上运行进程的功能)。

将张量(和模型)放在 GPU 上 您可以通过对张量(和模型,我们稍后会看到)调用 to(device) 来将其放置在特定设备上。其中 device 是您希望张量(或模型)转到的目标设备。

为什么要这么做?

GPU 提供的数值计算速度比 CPU 快得多,并且如果 GPU 不可用,由于我们的设备无关代码(参见上文),它将在 CPU 上运行。

使用 to(device) 将张量放在 GPU 上(例如 some_tensor.to(device))将返回该张量的副本,例如,相同的张量将存在于 CPU 和 GPU 上。要覆盖张量,请重新分配它们:some_tensor = some_tensor.to(device)

创建一个张量并将其放在 GPU 上 1 2 3 4 5 6 7 8 9 10 11 device = "cuda" if torch.cuda.is_available() else "cpu" tensor = torch.tensor([1 , 2 , 3 ]) print (tensor, tensor.device)tensor_on_gpu = tensor.to(device) tensor_on_gpu

1 2 tensor([1, 2, 3]) cpu tensor([1, 2, 3], device='cuda:0')

二个张量有device=’cuda:0’,这意味着它存储在第 0 个可用的 GPU 上(GPU 的索引为 0,如果有两个可用的 GPU,则它们分别为’cuda:0’和’cuda:1’,最多为’cuda:n’)。

将张量移回 CPU 使用 NumPy 与张量进行交互(NumPy 不利用 GPU),您需要执行此操作。

torch.Tensor.numpy()使用方法tensor_on_gpu。

1 TypeError: can't convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

相反,为了将张量返回到 CPU 并可供 NumPy 使用,使用Tensor.cpu()。

1 2 3 tensor_back_on_cpu = tensor_on_gpu.cpu().numpy() tensor_back_on_cpu

上面返回了 CPU 内存中 GPU 张量的副本,原始张量仍然在 GPU 上。

1 tensor([1, 2, 3], device='cuda:0')

GPU上的随机种子 在cpu上的随机种子,使用torch.manual_seed()

1 2 3 4 5 6 7 8 import torchtorch.manual_seed(42 ) torch.cuda.manual_seed(42 ) torch.cuda.manual_seed_all(42 ) torch.backends.cudnn.deterministic = True torch.backends.cudnn.benchmark = False

通过上述设置,可以确保在 CPU 和 GPU 上运行的 PyTorch 代码具有可重复性。

练习 pytorch-deep-learning/extras/exercises

…\pytorch-deep-learning\extras\exercises\00_pytorch_fundamentals_exercises.ipynb

配置pytroch环境 PyTorch Get Started

pytorch 2.4.1

1 2 3 4 5 6 7 8 conda create -n pytorch241 python=3.8 conda activate pytorch241 conda install pytorch torchvision torchaudio pytorch-cuda=12.4 -c pytorch -c nvidia conda env remove -n pytorch241 conda clean -i