人工智能实践-Tensorflow笔记-MOOC-第三讲神经网络八股

[TOC]

人工智能实践-Tensorflow笔记-MOOC-第三讲神经网络八股 神经网络搭建八股 用Tensorflow API: tf.keras搭建网络八股 六步法

1-import 第一步: import 相关模块,如 import tensorflow as tf。

2-train, test 第二步: 指定输入网络的训练集和测试集,如指定训练集的输入 x_train 和标签y_train,测试集的输入 x_test 和标签 y_test。

3-model = tf.keras.models.Sequential 第三步: 逐层搭建网络结构,model = tf.keras.models.Sequential(),相当于走了一遍前向传播。

4-model.compile 第四步: 在 model.compile() 中配置训练方法,选择训练时使用的优化器、损失函数和最终评价指标。

5-model.fit 第五步: 在 model.fit() 中执行训练过程,告知训练集和测试集的输入值和标签、每个 batch 的大小(batchsize)和数据集的迭代次数(epoch)。

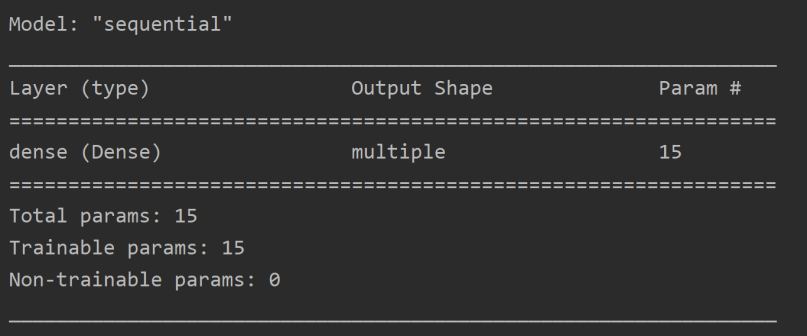

6-model.summary 第六步: 使用 model.summary() 打印网络结构,统计参数数目。

函数用法介绍 tf.keras.models.Sequential() 1 model = tf.keras.models.Sequential ([网络结构])

网络结构举例:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 tf.keras.layers.Flatten() tf.keras.layers.Dense("神经元个数" , activation = "激活函数" , kernel_regularizer = "哪种正则化" ) tf.keras.layers.Conv2D(filters = "卷积核个数" , kernel_size = "卷积核尺寸" , strides = "卷积步长" , padding = " valid" or "same" ) tf.keras.layers.LSTM()

model.compile() Compile 用于配置神经网络的训练方法,告知训练时使用的优化器、损失函数和准确率评测标准。

1 model.compile (optimizer = 优化器, loss = 损失函数, metrics = [“准确率”] )

optimizer optimizer 可以是字符串形式给出的优化器名字,也可以是函数形式,使用函数形式可以设置学习率、动量和超参数。

可选项:

1 2 3 4 ‘sgd’ or tf.keras.optimizers.SGD(lr='学习率' , momentum='动量参数' ) ‘adagrad’ or tf.keras.optimizers.Adagrad(lr='学习率' ) ‘adadelta’ or tf.keras.optimizers.Adadelta(lr='学习率' ) ‘adam’ or tf.keras.optimizers.Adam(lr='学习率' , beta_1=0.9 , beta_2=0.999 )

loss Loss 可以是字符串形式给出的损失函数的名字,也可以是函数形式。

可选项:

1 2 3 4 5 ‘mse’ or tf.keras.losses.MeanSquaredError() ‘sparse_categorical_crossentropy’ or tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False )

损失函数常需要经过 softmax 等函数将输出转化为概率分布的形式。

from_logits 则用来标注该损失函数是否需要转换为概率的形式, 取 False 时表示转化为概率分布,取 True 时表示没有转化为概率分布,直接输出。

Metrics Metrics 标注网络评测指标。

可选项:

1 2 3 'accuracy' : y_和y都是数值, 如y_=[1 ] y=[1 ]'categorical_accuracy' : y_和y都是独热码(概率分布), 如y_=[0 ,1 ,0 ] y=[0.256 ,0.695 ,0.048 ]'sparse_categorical_accuracy' : y_是数值, y是独热码(概率分布), 如y_=[1 ] y=[0.256 ,0.695 ,0.048 ]

model.fit() fit 函数用于执行训练过程

1 2 3 4 5 6 7 8 model.fit( 训练集的输入特征, 训练集的标签, batch_size, epochs, validation_data = (测试集的输入特征, 测试集的标签), validataion_split = 从测试集划分多少比例给训练集, validation_freq = 测试的 epoch 间隔次数)

model.summary() summary 函数用于打印网络结构和参数统计

例如鸢尾花分类的神经网络,是四输入三输出的一层网络

上图是 model.summary()对鸢尾花分类网络的网络结构和参数统计,对于一个输入为 4 输出为 3 的全连接网络,共有 15 个参数。

iris代码复现 用Sequential搭建网路 p8_iris_sequential.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 import tensorflow as tffrom sklearn import datasetsimport numpy as npx_train = datasets.load_iris().data y_train = datasets.load_iris().target np.random.seed(116 ) np.random.shuffle(x_train) np.random.seed(116 ) np.random.shuffle(y_train) tf.random.set_seed(116 ) model = tf.keras.models.Sequential([tf.keras.layers.Dense(3 , activation='softmax' , kernel_regularizer=tf.keras.regularizers.l2()) ]) model.compile (optimizer=tf.keras.optimizers.SGD(lr=0.1 ), loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=500 , validation_split=0.2 , validation_freq=20 ) model.summary()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 Train on 120 samples, validate on 30 samples Epoch 1/500 32/120 [=======>......................] - ETA: 0s - loss: 3.8177 - sparse_categorical_accuracy: 0.3438 120/120 [==============================] - 0s 2ms/sample - loss: 2.1962 - sparse_categorical_accuracy: 0.3500 Epoch 2/500 ... 32/120 [=======>......................] - ETA: 0s - loss: 0.3353 - sparse_categorical_accuracy: 1.0000 120/120 [==============================] - 0s 25us/sample - loss: 0.3535 - sparse_categorical_accuracy: 0.9500 Epoch 150/500 ... 32/120 [=======>......................] - ETA: 0s - loss: 0.3871 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 25us/sample - loss: 0.3718 - sparse_categorical_accuracy: 0.9417 Epoch 300/500 ... 32/120 [=======>......................] - ETA: 0s - loss: 0.3613 - sparse_categorical_accuracy: 0.9375 120/120 [==============================] - 0s 25us/sample - loss: 0.3658 - sparse_categorical_accuracy: 0.9583 Epoch 450/500 ... 32/120 [=======>......................] - ETA: 0s - loss: 0.3630 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 25us/sample - loss: 0.3486 - sparse_categorical_accuracy: 0.9583 Epoch 500/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3122 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 63us/sample - loss: 0.3333 - sparse_categorical_accuracy: 0.9667 - val_loss: 0.4002 - val_sparse_categorical_accuracy: 1.0000 Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense (Dense) multiple 15 ================================================================= Total params: 15 Trainable params: 15 Non-trainable params: 0 _________________________________________________________________

用类Class搭建网络 使用 Sequential 可以快速搭建网络结构,即上层输入下层输出。但是如果网络包含跳连等其他复杂(非顺序)网络结构, Sequential 就无法表示了。 这就需要使用 class 来声明网络结构。

1-import

2-train, test

3-class MyModel(Model) model=MyModel

4-model.compile

5-model.fit

6-model.summary

可以使用class类封装一个神经网络结构

1 2 3 4 5 6 7 8 9 10 class MyModel (Model ): def __init__ (self ): super (MyModel, self).__init__() //初始化网络结构 def call (self, x ): y = self.d1(x) return y model = MyModel()

使用 class 类封装网络结构,如上所示是一个 class 模板。

MyModel 表示声明的神经网络的名字,括号中的 Model 表示创建的类需要继承 tensorflow 库中的 Model 类。

类中需要定义两个函数,__init__() 函数为类的构造函数用于初始化类的参数, spuer(MyModel,self).__init__() 这行表示初始化父类的参数。

之后便可初始化网络结构,搭建出神经网络所需的各种网络结构块。

call()函数中调用__init__()函数中完成初始化的网络块,实现前向传播并返回推理值。使用 class 方式搭建鸢尾花网络结构的代码如下所示。

1 2 3 4 5 6 7 8 class IrisModel (Model ): def __init__ (self ): super (IrisModel, self).__init__() self.d1 = Dense(3 , activation='sigmoid' , kernel_regularizer=tf.keras.regularizers.l2()) def call (self, x ): y = self.d1(x) return y model = IrisModel

p11_iris_class.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 import tensorflow as tffrom tensorflow.keras.layers import Densefrom tensorflow.keras import Modelfrom sklearn import datasetsimport numpy as npx_train = datasets.load_iris().data y_train = datasets.load_iris().target np.random.seed(116 ) np.random.shuffle(x_train) np.random.seed(116 ) np.random.shuffle(y_train) tf.random.set_seed(116 ) class IrisModel (Model ): def __init__ (self ): super (IrisModel, self).__init__() self.d1 = Dense(3 , activation='softmax' , kernel_regularizer=tf.keras.regularizers.l2()) def call (self, x ): y = self.d1(x) return y model = IrisModel() model.compile (optimizer=tf.keras.optimizers.SGD(lr=0.1 ), loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=500 , validation_split=0.2 , validation_freq=20 ) model.summary()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 Train on 120 samples, validate on 30 samples Epoch 1/500 32/120 [=======>......................] - ETA: 0s - loss: 3.8177 - sparse_categorical_accuracy: 0.3438 120/120 [==============================] - 0s 2ms/sample - loss: 2.1962 - sparse_categorical_accuracy: 0.3500 Epoch 2/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3353 - sparse_categorical_accuracy: 1.0000 120/120 [==============================] - 0s 23us/sample - loss: 0.3535 - sparse_categorical_accuracy: 0.9500 Epoch 150/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3871 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 25us/sample - loss: 0.3718 - sparse_categorical_accuracy: 0.9417 Epoch 300/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3613 - sparse_categorical_accuracy: 0.9375 120/120 [==============================] - 0s 21us/sample - loss: 0.3658 - sparse_categorical_accuracy: 0.9583 Epoch 450/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3630 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 25us/sample - loss: 0.3486 - sparse_categorical_accuracy: 0.9583 Epoch 500/500 32/120 [=======>......................] - ETA: 0s - loss: 0.3122 - sparse_categorical_accuracy: 0.9688 120/120 [==============================] - 0s 66us/sample - loss: 0.3333 - sparse_categorical_accuracy: 0.9667 - val_loss: 0.4002 - val_sparse_categorical_accuracy: 1.0000 Model: "iris_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense (Dense) multiple 15 ================================================================= Total params: 15 Trainable params: 15 Non-trainable params: 0 _________________________________________________________________

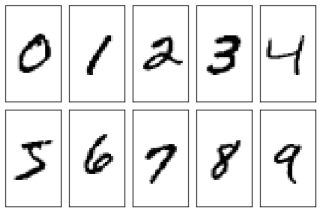

MNIST数据集

MNIST 数据集一共有 7 万张图片,是 28×28 像素的 0 到 9 手写数字数据集,其中 6 万张用于训练, 1 万张用于测试。每张图片包括 784(28×28)个像素点,使用全连接网络时可将 784 个像素点组成长度为 784 的一维数组,作为输入特征。数据集图片如下所示。

keras 函数库中提供了使用 mnist 数据集的接口,代码如下所示,可以使用load_data()直接从 mnist 中读取测试集和训练集

1 2 mnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data()

输入全连接网络时需要先将数据拉直为一维数组,把 784 个像素点的灰度值作为输入特征输入神经网络。

1 2 tf.keras.layers.Flatten()

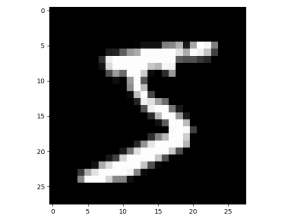

使用 plt 库中的两个函数可视化训练集中的图片。

1 2 plt.imshow(x_train[0 ], cmap='gray' ) plt.show()

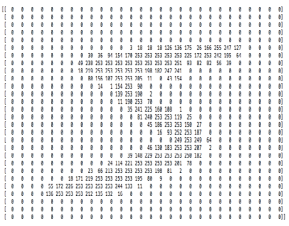

使用 print 打印出训练集中第一个样本以二位数组的形式打印出来,如下所示。

1 print ("x_train[0]:" , x_train[0 ])

1 2 print ("y_train[0]:" , y_train[0 ])

打印出测试集样本的形状,共有 10000 个 28 行 28 列的三维数据。

1 2 print ("x_test.shape:" , x_test.shape)

p13_mnist_datasets.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import tensorflow as tffrom matplotlib import pyplot as pltmnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data() plt.imshow(x_train[0 ], cmap='gray' ) plt.show() print ("x_train[0]:\n" , x_train[0 ])print ("y_train[0]:\n" , y_train[0 ])print ("x_train.shape:\n" , x_train.shape)print ("y_train.shape:\n" , y_train.shape)print ("x_test.shape:\n" , x_test.shape)print ("y_test.shape:\n" , y_test.shape)

训练MNIST数据集 使用 Sequential 实现手写数字识别 p14_mnist_sequential.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 import tensorflow as tfmnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

是使用测试集测试的准确率

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 60000/60000 [==============================] - 3s 48us/sample - loss: 0.0455 - sparse_categorical_accuracy: 0.9852 - val_loss: 0.0700 - val_sparse_categorical_accuracy: 0.9789 Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten (Flatten) multiple 0 _________________________________________________________________ dense (Dense) multiple 100480 _________________________________________________________________ dense_1 (Dense) multiple 1290 ================================================================= Total params: 101,770 Trainable params: 101,770 Non-trainable params: 0 _________________________________________________________________

使用 class 实现手写数字识别 p15_mnist_class.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 import tensorflow as tffrom tensorflow.keras.layers import Dense, Flattenfrom tensorflow.keras import Modelmnist = tf.keras.datasets.mnist (x_train, y_train), (x_test, y_test) = mnist.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 class MnistModel (Model ): def __init__ (self ): super (MnistModel, self).__init__() self.flatten = Flatten() self.d1 = Dense(128 , activation='relu' ) self.d2 = Dense(10 , activation='softmax' ) def call (self, x ): x = self.flatten(x) x = self.d1(x) y = self.d2(x) return y model = MnistModel() model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

训练时每个 step 给出的是训练集 accuracy 不具有参考价值,有实际评判价值的是 validation_freq 中设置的隔若干轮输出的测试集 accuracy。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 60000/60000 [==============================] - 3s 48us/sample - loss: 0.0440 - sparse_categorical_accuracy: 0.9869 - val_loss: 0.0836 - val_sparse_categorical_accuracy: 0.9741 Model: "mnist_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten (Flatten) multiple 0 _________________________________________________________________ dense (Dense) multiple 100480 _________________________________________________________________ dense_1 (Dense) multiple 1290 ================================================================= Total params: 101,770 Trainable params: 101,770 Non-trainable params: 0 _________________________________________________________________

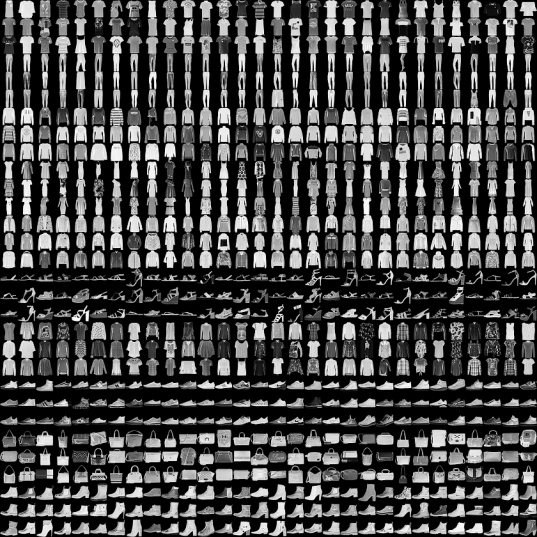

Fashion数据集 Fashion_mnist 数据集具有 mnist 近乎所有的特征,包括 60000 张训练图片和 10000 张测试图片,图片被分为十类,每张图像为 28×28 的分辨率。

类别如下所示:

图片示例如下:

由于 Fashion_mnist 数据集和 mnist 数据集具有相似的属性,所以对于 mnist 只需讲 mnist 数据集的加载换成 Fashion_mnist 就可以训练 Fashion 数据集了

p16_fashion_sequential.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 import tensorflow as tffashion = tf.keras.datasets.fashion_mnist (x_train, y_train),(x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(), tf.keras.layers.Dense(128 , activation='relu' ), tf.keras.layers.Dense(10 , activation='softmax' ) ]) model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 60000/60000 [==============================] - 3s 48us/sample - loss: 0.2981 - sparse_categorical_accuracy: 0.8900 - val_loss: 0.3429 - val_sparse_categorical_accuracy: 0.8777 Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten (Flatten) multiple 0 _________________________________________________________________ dense (Dense) multiple 100480 _________________________________________________________________ dense_1 (Dense) multiple 1290 ================================================================= Total params: 101,770 Trainable params: 101,770 Non-trainable params: 0 _________________________________________________________________

p16_fashion_class.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 import tensorflow as tffrom tensorflow.keras.layers import Dense, Flattenfrom tensorflow.keras import Modelfashion = tf.keras.datasets.fashion_mnist (x_train, y_train),(x_test, y_test) = fashion.load_data() x_train, x_test = x_train / 255.0 , x_test / 255.0 class MnistModel (Model ): def __init__ (self ): super (MnistModel, self).__init__() self.flatten = Flatten() self.d1 = Dense(128 , activation='relu' ) self.d2 = Dense(10 , activation='softmax' ) def call (self, x ): x = self.flatten(x) x = self.d1(x) y = self.d2(x) return y model = MnistModel() model.compile (optimizer='adam' , loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False ), metrics=['sparse_categorical_accuracy' ]) model.fit(x_train, y_train, batch_size=32 , epochs=5 , validation_data=(x_test, y_test), validation_freq=1 ) model.summary()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 60000/60000 [==============================] - 3s 48us/sample - loss: 0.2965 - sparse_categorical_accuracy: 0.8910 - val_loss: 0.3379 - val_sparse_categorical_accuracy: 0.8780 Model: "mnist_model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten (Flatten) multiple 0 _________________________________________________________________ dense (Dense) multiple 100480 _________________________________________________________________ dense_1 (Dense) multiple 1290 ================================================================= Total params: 101,770 Trainable params: 101,770 Non-trainable params: 0 _________________________________________________________________